In my last post I promised to give more details about some rabbit holes that I went down during the investigation, including page tables, locks, WMI, and a vmmap bug. Those details are here, along with updated code samples. But first, a really quick summary of the original issue:

In the last post I talked about how every time a CFG-enabled process allocates executable memory some Control Flow Guard (CFG) memory is allocated as well. Windows never frees the CFG memory so if you keep allocating and freeing executable memory at different addresses then your process can accumulate an arbitrary amount of CFG memory. Chrome was doing this and that was leading to an essentially unbounded waste of memory, and hangs on some machines.

And, I have to say, hangs are hard to avoid if VirtualAlloc starts running more than a million times slower than normal.

In addition to the wasted CFG memory there was some other wasted memory, although it wasn’t as bad as vmmap suggested.

CFG and pages

Both code memory and CFG memory are ultimately allocated in 4-KB pages (more on that later). Since 4 KiB of CFG memory can describe 256 KiB of code memory (details on that later) that means that if you allocate a 256 KiB block of code memory that is 256 KiB aligned then you will get one 4 KiB CFG page. And, if you allocate a 4 KiB block of code memory then you will still get a 4 KiB CFG page, but with most of it unused.

Things get tricky when executable memory is freed. If you VirtualFree a block of executable memory that is not a multiple of 256 KiB or is not 256 KiB aligned then the OS would have to do some bookkeeping to see whether any other executable memory is still using the CFG pages. The CFG authors decided not to bother with this complexity and they just always leave the CFG memory allocated – always. That’s unfortunate. That means that when my test program allocates and then frees 1 GiB of aligned executable memory, this leaves 16 MiB of CFG memory allocated.

Things get tricky when executable memory is freed. If you VirtualFree a block of executable memory that is not a multiple of 256 KiB or is not 256 KiB aligned then the OS would have to do some bookkeeping to see whether any other executable memory is still using the CFG pages. The CFG authors decided not to bother with this complexity and they just always leave the CFG memory allocated – always. That’s unfortunate. That means that when my test program allocates and then frees 1 GiB of aligned executable memory, this leaves 16 MiB of CFG memory allocated.

And, more practically, this means that when Chrome’s JavaScript engine allocates and then frees 128 MiB of aligned executable memory (not all of it was committed, but the whole address range was allocated and freed at once) then up to 2 MiB of CFG memory will be left allocated, even though it’s trivially provable that it could be freed. Since Chrome was repeatedly allocating/freeing code memory at randomized addresses this was causing the problem that I discovered.

Additional wasted memory

On any modern operating system each process gets their own virtual memory address space so that the operating system can isolate processes and protect memory. It does that using a memory-management unit (MMU) and page tables. Memory is broken up into 4-KiB pages – that’s the smallest amount of memory that the OS can give you. Each page needs to be pointed to by an eight-byte page-table entry, and those are themselves stored in 4-KiB pages. Each of those pages can point to only 512 different memory pages, so we need a hierarchy of page tables. For the 48-bit address space supported on an x64 operating system:

- The level-one page table can cover 256 TiB (48 bits) by pointing to 512 different level-two page tables

- Each level-two page table can cover 512 GiB by pointing to 512 different level-three page tables

- Each level-three page table can cover 1 GiB by pointing to 512 different level-four page tables

- Each level-four page table can cover 2 MiB by pointing to 512 different physical pages

The MMU indexes into the level-one page table with the first (of 48) 9 address bits, then into the pointed-to level-two page table with the next 9 address bits, then into the pointed-to level-three page table with the next 9 address bits, then into the level-four page table with the next 9 address bits. At that point the MMU has used 36 bits, has 12 remaining, and those are use to index into the 4 KiB page whose address was found in the level-four page table. Phew.

If all of these page-table levels were fully populated you’d need more than 512 GiB of RAM just for them, so they are sparsely populated and filled in as needed. This means that when you allocate a page of memory the OS may need to allocate some page tables – anywhere from zero to three of them, depending on whether your allocation is in a previously unused 2 MiB region, previously unused 1 GiB region, or previously unused 512 GiB region (the level-one page table is always allocated).

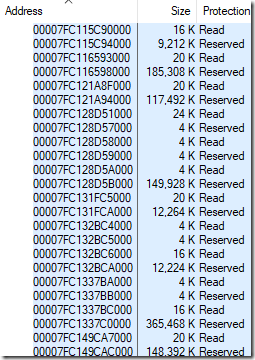

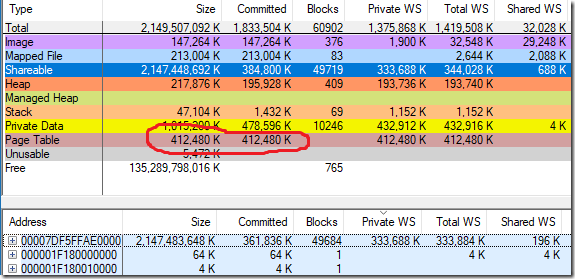

In short, sparse allocations can be significantly more expensive than nearby allocations, because page tables can’t be shared as much. The leaking CFG allocations were spread out quite sparsely so when vmmap told me that Chrome was using 412,480 KiB of page tables (edited out of the previous blog post) I assumed that the numbers were correct. Here’s the same screenshot of vmmap showing the chrome.exe memory layout that I had in the last blog post, but with the Page Table row shown:

But something didn’t seem right. I ended up adding a page-table simulator to my VirtualScan tool that would count how many page table pages were needed for all of the committed memory in the process being scanned. This is simply a matter of walking through committed memory, incrementing a counter every time a new multiple of 2 MiB, 1 GiB, or 512 GiB is encountered.

I quickly found that my simulator results matched vmmap on normal processes, but on processes with a large amount of CFG memory the results were off, by roughly the amount of CFG working set. For the process above where vmmap said there were 402.8 MiB (412,480 KiB) of page tables my tool said there were 67.7 MiB.

Scan time, Committed, page tables, committed blocks

Total: 41.763s, 1457.7 MiB, 67.7 MiB, 32112, 98 code blocks

CFG: 41.759s, 353.3 MiB, 59.2 MiB, 24866

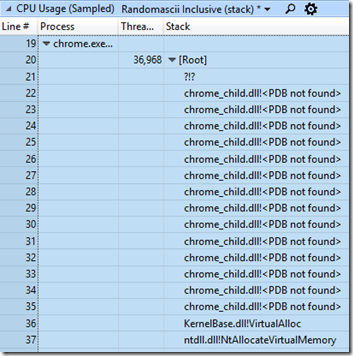

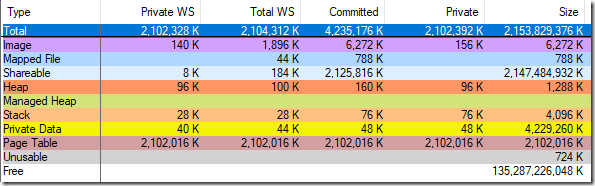

I was able to independently verify that vmmap was wrong by running VAllocStress which in its default settings causes Windows to commit 2 GiB of CFG memory. vmmap claimed that it had also allocated 2 GiB of page tables:

And yet, when I killed the process Task Manager showed the commit amount going down by just 2 GiB. So, vmmap is wrong, my quick-hack page-table calculations are correct, and after discussion with a helpful twitterati the vmmap bug report has been passed along and should get fixed. The CFG memory still consumes a lot of page table entries (59.2 MiB in the example above) but not as many as vmmap said, and when the fix ships it will consume hardly any.

What is CFG and CFG memory?

I want to step back and give a bit more information on what CFG is.

CFG is short for Control Flow Guard. It is an anti-exploit technique used to halt attacks that overwrite function pointers. When CFG is enabled the compiler and OS can work together to make sure a branch target is valid. First the relevant CFG control byte is loaded from the 2-TiB CFG reservation. A 64-bit process on Windows gets a 128-TiB address space so dividing the branch target address by 64 lets us find the relevant CFG byte for that target.

uint8_t cfg_byte = cfg_base[size_t(target_addr) / 64];

Now we have one byte that is supposed to describe which addresses in a 64-byte range are valid branch targets. To make that work CFG treats the byte as four two-bit values, each one corresponding to a 16-byte range. That two-bit number (whose value goes from zero to three) is interpreted like this:

- 0 – all targets in this sixteen-byte block are invalid indirect branch targets

- 1 – the start address in this sixteen-byte block is a valid indirect branch target

- 2 – related to “suppressed” CFG calls – the address is potentially invalid

- 3 – Non-aligned addresses in this sixteen-byte block are valid indirect branch targets, however the 16-byte aligned address is potentially invalid

If an indirect branch target is found to be invalid then the process is terminated and an exploit is avoided. Hurray!

From this we can tell that indirect branch targets should be 16-byte aligned for maximum security, and we can see why the amount of CFG memory used by a process will be roughly 1/64th the amount of code memory.

The actual CFG code loads 32-bits at a time, but that is just an implementation detail. Many sources describe the CFG memory as one-bit per 8 bytes rather than two-bits per 16 bytes. My explanation is better.

And that’s why we can’t have nice things

The hang which helped me find this memory problem happened for two reasons. One reason is that the scanning of CFG memory on Windows 10 16299 or earlier is painfully slow. I’ve seen the scan of the address space of a process take 40 or more seconds and literally 99.99% of that time was scanning the CFG memory reservation, even though the CFG reservation was only about three quarters of the committed memory blocks. I don’t know why the scanning was so slow and since it is fixed in Windows 10 17134 I don’t care enough to investigate.

This slow scanning caused a hang because gmail wanted to get the CFG reservation and WMI was holding a lock while scanning. But the lock on that memory reservation wasn’t being held the entire length of the scan. In my sample above there were ~49,000 blocks in the CFG region and the NtQueryVirtualMemory function, which acquires and releases the lock, was being called once for each of them. Therefore the lock was being acquired and released ~49,000 times and was held for, on average, less than one ms at a time.

But for some reason, even though the lock was released 49,000 times the Chrome process was never able to acquire it. That’s not fair!

And that is exactly the problem. As I said last time:

This is because Windows locks are, by design, not fair and if a thread releases a lock and then tries to reacquire it immediately then it can, in cases like this, reacquire it every single time.

A fair lock means that two threads fighting over a lock will alternate, with each making progress. However there will be lots of context switches, which are expensive, and the lock might spend a lot of time with no thread inside its critical region.

Unfair locks are cheaper, and they don’t force threads to wait in a line. They can just grab the lock, as Joe Duffy’s article mentions. Joe Duffy also says:

The change to unfair locks clearly has the risk of leading to starvation. But, statistically speaking, timing in concurrent systems tends to be so volatile that each thread will eventually get its turn to run, probabilistically speaking.

So how can we reconcile Joe’s 2006 statement about the rarity of starvation with my experience of a 100% repeatable and long-running starvation problem? I think the main reason is something else that happened in 2006. Intel launched the Core Duo, and multi-core computers began to become ubiquitous.

Because it turns out that my starvation problem will only happen on a multi-core system! On a multi-core system the WMI thread will release the lock, signal the Chrome thread to wake up, and then continue working. Because the WMI thread is already running it has a “head start” on the Chrome thread and it can easily re-enter NtQueryVirtualMemory and reacquire the lock before Chrome has a chance to get there.

On a single-core system you can, obviously, only have a single thread running at a time. Windows usually boosts the priority of a thread that has just been readied and that priority boost means that when the lock is released Chrome’s thread will be readied and will immediately preempt the WMI thread. This gives the Chrome thread lots of time to wake up and acquire the lock and the starvation never happens.

You see? On the multi-core system the priority boost will, in most cases, have no effect on the WMI thread, because it will be on a different core!

This means that a system with extra cores may end up being less responsive than one with the same workload and fewer cores. Curiously enough this means that if my computer had been busier – if it had had threads of the appropriate priority running on all of the CPU cores – then the hang might have been avoided (don’t try this at home).

So, unfair locks have higher throughput but can lead to starvation. I suspect that the solution is what I call “occasionally-fair” locks which would be unfair, say, 99% of the time, but would be fair 1% of the times when a contended lock was released. This would give most of the performance advantages, while avoiding most starvation. Windows locks used to be fair, they probably could be fair again, and maybe being occasionally fair would be the perfect balance. Disclaimer: I am not a locking expert or an OS engineer, but I’d be interested to hear thoughts on this, and at least I’m not the first to suggest something like this.

Linus Torvalds recently weighed in on the importance of fairness in locks – here and here. Maybe it’s time for the pendulum to swing the other way on Windows.

In summary: Holding a lock for seconds at a time is bad form, and restricts parallelism.

However, on multi-core systems with unfair locks, releasing and then immediately reacquiring the lock behaves almost identically – other threads will have no chance to sneak in.

Close call with ETW

I rely on ETW tracing for all of these investigations so I was a bit horrified when I started investigation this issue and found that Windows Performance Analyzer (WPA) couldn’t load Chrome’s symbols. It used to work, just last week, I’m certain. What happened…

I rely on ETW tracing for all of these investigations so I was a bit horrified when I started investigation this issue and found that Windows Performance Analyzer (WPA) couldn’t load Chrome’s symbols. It used to work, just last week, I’m certain. What happened…

What happened is that Chrome M68 shipped, and it is linked with lld-link instead of with VC++’s linker, and if you run dumpbin and look at the debug information you see this:

C:\b\c\b\win64_clang\src\out\Release_x64\./initialexe/chrome.exe.pdb

Okay, I guess WPA doesn’t like those slashes, but that doesn’t make sense because I signed off on the switch to lld-link and I know that I tested WPA before doing that so what happened…

What happened is that the 17134 version of WPA had shipped. I had tested the lld-link’s output with WPA 16299 and that had worked. What perfect timing! The new linker and new WPA were incompatible.

I reverted my copy of WPA so I could continue the investigation (xcopy from a machine with the older version works fine) and filed an lld-link bug which the team promptly fixed. I’ll be able to upgrade back to WPA 17134 when M69 ships, built with the fixed linker.

WMI

WMI, the trigger for the hangs, is Windows Management Instrumentation and it is something that I know very little about. I found that somebody had hit problem with high CPU use in WmiPrvSE.exe inside of perfproc!GetProcessVaData in 2014 or earlier, but they didn’t leave enough information to be useful. At this point I made the mistake of trying to figure out what crazy WMI query could be so expensive that it would cause gmail to hang for seconds at a time. I roped in some experts and wasted a bunch of time trying to find the magical query. I recorded Microsoft-Windows-WMI-Activity in my ETW traces, I experimented with powershell to find all of the Win32_Perf queries and went down a few other rabbit holes that are too tedious to share. I eventually found that the Win32_PerfRawData_PerfProc_ProcessAddressSpace_Costly counter, invokable through a single line of powershell, would trigger the hang in gmail:

measure-command {Get-WmiObject -Query “SELECT * FROM Win32_PerfFormattedData_PerfProc_ProcessAddressSpace_Costly”}

I then went down even more rabbit holes because of the counter name (“Costly”? really?) and because this counter appears and disappears based on factors that I don’t understand.

But the details of WMI didn’t really matter. WMI wasn’t doing anything wrong – not really – it was just scanning memory. Writing my own scanning code was far more useful.

Chores for Microsoft

Chrome has landed a mitigation, and all the remaining work items are on Microsoft

- Speed up the scanning of CFG regions – okay, this one is done

- Free up CFG memory when executable memory is released – at least for the 256 KiB aligned case, that’s easy

- Consider a flag to allow allocating executable memory without CFG memory, or use PAGE_TARGETS_INVALID for this purpose. Note that Windows Internals Part 1 7th Edition says “only [CFG] pages with at least one bit set {1,X} need to be committed” – if Windows 10 implemented this then PAGE_TARGETS_INVALID (now used by v8) would avoid committing memory

- Fix vmmap’s calculation of page tables for processes with lots of CFG commit

Code updates

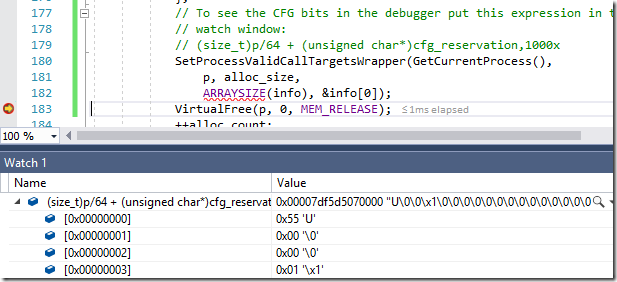

I updated my code samples – especially VAllocStress. VAllocStress now includes twenty lines of code that demonstrates how to find the CFG reservation in your process. I also added test code that uses SetProcessValidCallTargets to validate the meaning of the CFG bits, and to demonstrate the tricks needed to call it successfully (hint: calling it through GetProcAddress will probably hit a CFG violation!).

Hacker News discussion is here.

Reddit discussion is here.

Wouldn’t this whole abstruse, irrelevant, boring issue be solved if you just used Linux? Blah.

I use Linux (Ubuntu 18.04) on core 2 duo laptop with 4GB RAM.

Bluetooth daemon goes rogue at times and consumes 100% CPU.

Entire system becomes unresponsive, event the mouse pointer does not move.

Switching to virtual terminal takes minutes. Once on virtual terminal (which is text only interface) typing on keyboard results in many seconds of delay before characters are shown on the screen. It takes 15 minutes to finally find and kill the rogue daemon.

This is NOT the better experience than Windows.

Windows usually brings up the task manager within seconds and allows me to kill misbehaving process within first minute of it going rogue.

Linux might have great performance as a server when there us no local user input required but as a desktop workstation it is garbage.

>(Ubuntu 18.04)

No, you are using pretend Linux. Ubuntu is just as much garbage as Windows.

So now not every Linux is Linux. How about a suggestion on which Linux is Linux? Tried so far: Debian and derivatives, centos, Ubuntu and friends, arch and derivatives, Slackware, void, puppy, fedora.

They all behave the same when under heavy load – UI is unresponsive for minutes a time and even virtual terminal doesn’t respond to keystrokes for random period of time.

> Bluetooth daemon goes rogue at times and consumes 100% CPU.

You must have a DELL laptop as there was an unfixed bug with bluetooth with systemd and stuff (please keep systemd rants off this thread).

Yes, that particular problem is on Dell. Still, if Linux was so much better then one process should not be able to make the system unusable for minutes. I don’t care about systemd vs sysv vs BSD init, none of that should have any impact on ability of the system to handle basic multitasking. Remember, I am responding to the claim that Linux does not have unresponsive UI.

According to his “About” page, his job is to work “on Chrome for Windows”… so… that’s why he uses Windows.

No, it would be solved if *everyone* used Linux. Good luck with that!

Undoubtedly this particular problem would be avoided on Linux, but other problems would be found instead. Linux is great, and perf in particular is an amazing tool. ETW and WPA are also amazing tools and I enjoy using them to improve the performance of Chrome for our many millions of customers on Windows.

Awesome articles! Fun to read.

This might explain Windows getting mostly blown out by Linux in Phoronix’ Threadripper benchmarks.

I think benchmark results can be very misleading. Most people don’t know what they are benchmarking or what their tests are actually doing and what tes outcomes actually show.

I have recently compared the same 3 benchmark tests with fastest possible workload, CPU bound workload and memory bound workload and results were very different each time.

None of those tests took into account useability of the system during the tests.

Is it better to finish the operation in 20 seconds and lock up the system during that 20 seconds or finish in 45 seconds but allow system to do other things during that time?

It seems most people these days go for 20 seconds option but in case of desktop system 45 second option might be better most of the time.

What if difference is smaller? Lock up the system for 20 secs instead of running for 22 secs with responsive system.

I know Windows used to be more responsive under heavy load in 2000/XP days but possibly slower in benchmarks. Linux seems to always win in benchmarks but I always have poor user experience with Linux UI.

What is the point of all these CPU cores that I pay extra money for if end result is the system that prioritieses most busy process over user input? Isn’t that just giving more bullets to whoever shoots the fastest? Malware keeps the system busy so it gets most of the system resources?

Lies, damn lies, statistics and benchmarks.

But seriously, when benchmarking desktop system there should be user experience factor included in every test.

When benchmarking servers, go ahead and ignore local UI, there shoul be none, but if you have local user and you don’t respond to input for more than 1 sec then test should be considered as failed.

Great read, tx!

Can’t even remember few article like this.

This locking unfairness is exactly what happens with the Python GIL, or at least it used to happen in 2.7.x and earlier. There have been some changes which I have not evaluated a few years back.

It’s an oversimplification to suggest that unfair locks are always bad. (Maybe the name carries misleading connotations.) Rather, you have to make a choice between overhead and fairness. Lower overhead is *always* valuable, but fairness is only valuable if the lock is contended. If your locks are heavily contended, you likely have bigger problems than fairness, and might need a deeper change than just switching to a fair lock. (I don’t fundamentally disagree with the points that Linus makes in the linked messages; I’m just giving different weights to performance and predictability under stress.)

It’s also an oversimplification to conclude that “Windows’ locks are unfair”. The Windows kernel has both fair and unfair locks, and developers (ideally) select the right tool for the job. For example, KeAcquireSpinLock is unfair, while KeAcquireInStackQueuedSpinLock is fair. The former is the default and is more commonly used; developers will typically only reach for the latter if its additional overhead proves necessary when benchmarking a particular workload.

Source & disclaimer: I’m a developer on the Windows team.

Well, I never said unfair locks are always bad. I linked to an explanation of their advantages (Joe Duffy’s article).

That said… most of the higher overhead of fair locks only happens when they are contended, correct? So fairness only costs when it is valuable.

Thanks for the clarification on Windows locks types. Critical sections and push locks seem to be the main ones that I see. I hope Windows has fair locks other than spin locks – I have an entire article about just some of the excessive spins I’ve seen:

What’s your take on VirtualQuery’s use of locks? An unfair lock was selected, and that worked badly. The scanning is faster now but still takes ~200 ms on many processes – too long to hog a lock. A fair spin lock would have been better, but would have left Chrome spinning for multiple ms. Do you think it’s lock choice will be changed?

Again, thanks for the insights.