The behavior of the Windows scheduler changed significantly in Windows 10 2004 (aka, the April 2020 version of Windows), in a way that will break a few applications, and there appears to have been no announcement, and the documentation has not been updated. This isn’t the first time this has happened, but this change seems bigger than last time. So far I have found three programs that hit problems because of this silent change.

The short version is that calls to timeBeginPeriod from one process now affect other processes less than they used to. There is still an effect, and thread delays from Sleep and other functions may be less consistent than they used to be (see [updated] section below), but in general processes are no longer affected by other processes calling timeBeginPeriod.

I think the new behavior is an improvement, but it’s weird, and it deserves to be documented. Fair warning – all I have are the results of experiments I have run, so I can only speculate about the quirks and goals of this change.

Update, 2021: this change is acknowledged now in the timeBeginPeriod documentation.

If any of my conclusions are wrong then please let me know and I will update this.

Timer interrupts and their raison d’être

First, a bit of operating-system design context. It is desirable for a program to be able to go to sleep and then wake up a little while later. This actually shouldn’t be done very often – threads should normally be waiting on events rather than timers – but it is sometimes necessary. And so we have the Windows Sleep function – pass it the desired length of your nap in milliseconds and it wakes you up later, like this:

First, a bit of operating-system design context. It is desirable for a program to be able to go to sleep and then wake up a little while later. This actually shouldn’t be done very often – threads should normally be waiting on events rather than timers – but it is sometimes necessary. And so we have the Windows Sleep function – pass it the desired length of your nap in milliseconds and it wakes you up later, like this:

Sleep(1);

It’s worth pausing for a moment to think about how this is implemented. Ideally the CPU goes to sleep when Sleep(1) is called, in order to save power, so how does the operating system (OS) wake your thread if the CPU is sleeping? The answer is hardware interrupts. The OS programs a timer chip that then triggers an interrupt that wakes up the CPU and the OS can then schedule your thread.

The WaitForSingleObject and WaitForMultipleObjects functions also have timeout values and those timeouts are implemented using the same mechanism.

If there are many threads all waiting on timers then the OS could program the timer chip with individual wakeup times for each thread, but this tends to result in threads waking up at random times and the CPU never getting to have a long nap. CPU power efficiency is strongly tied to how long the CPU can stay asleep (8+ ms is apparently a good number), and random wakeups work against that. If multiple threads can synchronize or coalesce their timer waits then the system becomes more power efficient.

There are lots of ways to coalesce wakeups but the main mechanism used by Windows is to have a global timer interrupt that ticks at a steady rate. When a thread calls Sleep(n) then the OS will schedule the thread to run when the first timer interrupt fires after the time has elapsed. This means that the thread may end up waking up a bit late, but Windows is not a real-time OS and it actually cannot guarantee a specific wakeup time (there may not be a CPU core available at that time anyway) so waking up a bit late should be fine.

The interval between timer interrupts depends on the Windows version and on your hardware but on every machine I have used recently the default interval has been 15.625 ms (1,000 ms divided by 64). That means that if you call Sleep(1) at some random time then you will probably be woken sometime between 1.0 ms and 16.625 ms in the future, whenever the next interrupt fires (or the one after that if the next interrupt is too soon).

In short, it is the nature of timer delays that (unless a busy wait is used, and please don’t busy wait) the OS can only wake up threads at a specific time by using timer interrupts, and a regular timer interrupt is what Windows uses.

Some programs (WPF, SQL Server, Quartz, PowerDirector, Chrome, the Go Runtime, many games, etc.) find this much variance in wait delays hard to deal with but luckily there is a function that lets them control this. timeBeginPeriod lets a program request a smaller timer interrupt interval by passing in a requested timer interrupt interval. There is also NtSetTimerResolution which allows setting the interval with sub-millisecond precision but that is rarely used and never needed so I won’t mention it again.

Decades of madness

Here’s the crazy thing: timeBeginPeriod can be called by any program and it changes the timer interrupt interval, and the timer interrupt is a global resource.

Let’s imagine that Process A is sitting in a loop calling Sleep(1). It shouldn’t be doing this, but it is, and by default it is waking up every 15.625 ms, or 64 times a second. Then Process B comes along and calls timeBeginPeriod(2). This makes the timer interrupt fire more frequently and suddenly Process A is waking up 500 times a second instead of 64 times a second. That’s crazy! But that’s how Windows has always worked.

At this point if Process C came along and called timeBeginPeriod(4) this wouldn’t change anything – Process A would continue to wake up 500 times a second. It’s not last-call-sets-the-rules, it’s lowest-request-sets-the-rules.

To be more specific, whatever still running program has specified the smallest timer interrupt duration in an outstanding call to timeBeginPeriod gets to set the global timer interrupt interval. If that program exits or calls timeEndPeriod then the new minimum takes over. If a single program called timeBeginPeriod(1) then that is the timer interrupt interval for the entire system. If one program called timeBeginPeriod(1) and another program then called timeBeginPeriod(4) then the one ms timer interrupt interval would be the law of the land.

This matters because a high timer interrupt frequency – and the associated high-frequency of thread scheduling – can waste significant power, as discussed here.

This matters because a high timer interrupt frequency – and the associated high-frequency of thread scheduling – can waste significant power, as discussed here.

One case where timer-based scheduling is needed is when implementing a web browser. The JavaScript standard has a function called setTimeout which asks the browser to call a JavaScript function some number of milliseconds later. Chromium uses timers (mostly WaitForSingleObject with timeouts rather than Sleep) to implement this and other functionality. This often requires raising the timer interrupt frequency. In order to reduce the battery-life implications of this Chromium has been modified recently so that it doesn’t raise the timer interrupt frequency above 125 Hz (8 ms interval) when running on battery.

timeGetTime

timeGetTime (not to be confused with GetTickCount) is a function that returns the current time, as updated by the timer interrupt. CPUs have historically not been good at keeping accurate time (their clocks intentionally fluctuate to avoid being FM transmitters, and for other reasons) so they often rely on separate clock chips to keep accurate time. Reading from these clock chips is expensive so Windows maintains a 64-bit counter of the time, in milliseconds, as updated by the timer interrupt. This timer is stored in shared memory so any process can cheaply read the current time from there, without having to talk to the timer chip. timeGetTime calls ReadInterruptTick which at its core just reads this 64-bit counter. Simple!

Since this counter is updated by the timer interrupt we can monitor it and find the timer interrupt frequency.

The new undocumented reality

With the Windows 10 2004 (April 2020 release) some of this quietly changed, but in a very confusing way. I first heard about this through reports that timeBeginPeriod didn’t work anymore. The reality was more complicated than this.

A bit of experimentation gave confusing results. When I ran a program that called timeBeginPeriod(2) then clockres showed that the timer interval was 2.0 ms, but a separate test program with a Sleep(1) loop was only waking up about 64 times a second instead of the 500 times a second that it would have woken up under previous versions of Windows.

It’s time to do science

I then wrote a pair of programs which revealed what was going on. One program (change_interval.cpp) just sits in a loop calling timeBeginPeriod with intervals ranging from 1 to 15 ms. It holds each timer interval request for four seconds, and then goes to the next one, wrapping around when it is done. It’s fifteen lines of code. Easy.

The other program (measure_interval.cpp) runs some tests to see how much its behavior is altered by the behavior of change_interval.cpp. It does this by gathering three pieces of information.

- It asks the OS what the current global timer resolution is, using NtQueryTimerResolution.

- It measures the precision of timeGetTime by calling it in a loop until its return value changes. When it changes then the amount it changed by is its precision.

- It measures the delay of Sleep(1) by calling it in a loop for a second and counting how many calls it can make. The average delay is just the reciprocal of the number of iterations.

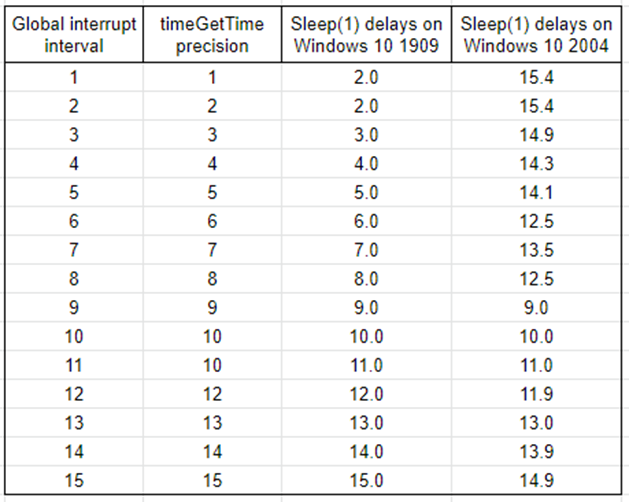

@FelixPetriconi ran the tests for me on Windows 10 1909 and I ran the tests on Windows 10 2004. The results (cleaned up to remove randomness) are shown here:

What this means is that timeBeginPeriod still sets the global timer interrupt interval, on all versions of Window. We can tell from the results of timeGetTime() that the interrupt fires on at least one CPU core at that rate, and the time is updated. Note also that the 2.0 on row one for 1909 was 2.0 on Windows XP, then 1.0 on Windows 7/8, and is apparently back to 2.0? I guess?

However the scheduler behavior changes dramatically in Windows 10 2004. Previously the delay for Sleep(1) in any process was simply the same as the timer interrupt interval (with an exception for timeBeginPeriod(1)), giving a graph like this:

In Windows 10 2004 the mapping between timeBeginPeriod and the sleep delay in another process (one that didn’t call timeBeginPeriod) is peculiar:

Why?

Implications

[Updated] The section below was added after publishing and then updated several times.

As was pointed out in the reddit discussion, the left half of the graph seems to be an attempt to simulate the “normal” 15.625 ms delay as closely as possible given the available precision of the global timer interrupt. That is, with a 6 millisecond interrupt interval they delay for ~12 ms (two cycles) and with a 7 millisecond interrupt interval they delay for ~14 ms (two cycles) – that matches the data fairly well. However what about with an 8 millisecond interrupt interval? They could sleep for two cycles but that would give an average delay of 16 ms, and the measured value is more like 14.5 ms.

Closer analysis shows that Sleep(1) when another process has called timeBeginPeriod(8) returns after one interval about 20% of the time and after two intervals the rest. Therefore three calls to Sleep(1) resulting in a average delay of 14.5 ms. This variation in the handling of Sleep(1) happens sometimes at other timer interrupt intervals but is most consistent when it is set to 8 ms.

This is all very weird, and I don’t understand the rationale, or the implementation. The intentional inconsistency in the Sleep(1) delays is particularly worrisome. Maybe it is a bug, but I doubt it. I think that there is complex backwards compatibility logic behind this. But, the most powerful way to avoid compatibility problems is to document your changes, preferably in advance, and this seems to have been slipped in without anyone being notified.

This behavior also seems to apply to CreateWaitableTimerEx and its so-far-undocumented now documented CREATE_WAITABLE_TIMER_HIGH_RESOLUTION flag, based on the quick-and-dirty waitable timer tests that you can find here (requires Windows 10 1803 or higher).

Most programs will be unaffected. If a process wants a faster timer interrupt then it should be calling timeBeginPeriod itself. That said, here are the problems that this could cause:

- A program might accidentally assume that Sleep(1) and timeGetTime have similar resolutions, and that assumption is broken now. But, such an assumption seems unlikely.

- A program might depend on a fast timer resolution and fail to request it. There have been multiple claims that some games have this problem and there is a tool called Windows System Timer Tool and another called TimerResolution 1.2 that “fix” these games by raising the timer interrupt frequency. Those fixes presumably won’t work anymore, or at least not as well. Maybe this will force those games to do a proper fix, but until then this change is a backwards compatibility problem.

- A multi-process program might have its master control program raise the timer interrupt frequency and then expect that this would affect the scheduling of its child processes. This used to be a reasonable design choice, and now it doesn’t work. This is how I was alerted to this problem. The product in question now calls timeBeginPeriod in all of their processes so they are fine, thanks for asking, but their software was misbehaving for several months with no explanation. Since I wrote this article I have received reports of three other multi-process programs (two within Google, two outside) with the same problem (one mentioned here). It’s an easy fix, but a painful investigation to understand what has gone wrong.

Sacrifice

The change_interval.cpp test program only works if nothing has requested a higher timer interrupt frequency. Since both Chrome and Visual Studio have a habit of doing this I had to do most of my experimentation with no access to the web while writing code in notepad. Somebody suggested Emacs but wading into that debate is more than I’m willing to do.

I’d love to hear more about this from Microsoft, including any corrections to my analysis. Discussions:

- Twitter announcement is here

- Hacker news and hacker news redux.

I think some graphics are missing. Above “Implications”.

Yep. Sorry about that. They are fixed now.

These appear to be exact same graph though..?

Apparently the unit tests for this blog post failed. I’ve fixed the graphs now, for realz this time.

I guess it’s simply skipping on waking up your thread until its (local) timeBeginPeriod setting is going to be greater than the next multiple of the global timeBeginPeriod setting. So if one process has set 150Hz, and another has the default 64Hz it will only wake up every second time (since you can fit two periods of the global wakeup in the 64Hz). This is probably a good way to save power, I doubt it was done to reduce the effect one process has on another.

Missing images between “a graph like this:” and “In Windows 10 2004”,

and “is bizarre:” and “The exact shape”

Damn. Thanks. I’ll fix that right away.

Looks like you accidentally repeated the same graph.

Fixed. Thanks.

You might want to disable WordPress “pingback” feature, as it seems abused. WTF is that, it seems bots are copying content, swapping random words and reposting on some generic looking sites..? What’s even the purpose of this?

That sounds like good advice. I globally unchecked these two settings in Settings->Discussions:

– Attempt to notify any blogs linked to from the article

– Allow link notifications from other blogs (pingbacks and trackbacks)

I don’t know if the second one is needed, but it seemed like a good idea.

Yea seems like 99% of all the Pingbacks on your blog are spam. I suggest turning off 100% and removing all old ones throughout your blog.

Page @ https://www.ampercent.com/delete-spam-trackbacks-pingbacks-wordpress-database/11950/ and https://www.shoutmeloud.com/delete-all-trackbacks-on-wordpress-blog-disable-self-pings.html may help?

Okay, pingbacks deleted. I think about 15% of the comments were spammy pingbacks, or pingbacks anyway (checking which ones were spam was too much work).

On a modern machine, could the OS apply different interrupt frequencies on different cores? If one process requests a faster timer, could the OS set one core to that interval and tweak the process’s affinity to prefer that core? That could affect other processes if they happen to get scheduled on that core while the greedy process is sleeping. Thus the apparent effect on a other process could vary based on the myriad factors considered by the scheduler, but in general you’d expect them to observe something closer to the default interval.

Actually that sounds like this is an adaptation for ARM processors where there are low performance cores in additional to high-performance cores

Whether that helps depends a lot on the CPU design (how isolated the power domains of different processors are) and other factors too complex for me to want to analyze. I guess the short answer is “yes”, but with lots of disclaimers and provisos – thread scheduling is hard.

Clearly you’ve shown some new behavior. Since the KPROCESS and similar structures for the 2004 edition have additions specifically for time management, I have no trouble believing that what you’ve found is specifically new for 2004. I must add this to my ever-growing list of things to look into, but where is the Great Rule Change?

The rule has always been as you say: “If a process wants a faster timer interrupt then it should be calling timeBeginPeriod itself”, though I would add that busy programmers do in practice need to be reminded to call timeEndPeriod when they no longer need the finer resolution. When I say “always”, I mean all the way back to version 3.10, though the rule was then only in principle since the interrupt period is fixed at startup. But the rule applied for real as early as version 3.50.

The rule had to be established early (even if the implementation was for many years rudimentary) because as much as an operating system for programs in general wants to give each program the illusion of owning the computer, delivering this ideal for access to an interval timer is all but impossible. Even if each processor has its own timer, you can’t expect that Windows will reprogram the timer each time it switches the processor to a thread from a different process. A timer’s interrupts are anyway how the system itself learns the passing of time without having to keep asking. These interrupts are almost necessarily a shared resource. They need to be frequent enough to meet the most demanding of realistic expectations from programs, but there’s a balance since interrupts that are too frequent have their own deleterious effects on performance all round.

Perhaps I’ve been looking at this for too long, but from this perspective it hardly seems like “decades of madness” that programs are each given the means to tell Windows how fine a resolution they desire and Windows sets the interrupt period to meet the finest requirement. If you need that your wait for 10ms be 10, not 11, then you tell Windows you want 1ms resolution. If you can tolerate that your 10ms may be 15, then you tell Windows you’re OK with 5ms resolution. You may get finer resolution but you’ve indicated that you don’t require finer resolution.

What is mad is to depend on the precise implementation. Yes, Microsoft has written that the one process’s request for the finest resolution sets it globally, but that’s just Microsoft presenting an implementation detail as helpful background. They make a rod for their own backs by skimping on the documentation, making it inevitable that programmers end up grasping at every implementation detail they can find. Against this is that Microsoft’s technical writers, such as they are, perhaps assume that programmers read the documentation judiciously and won’t think a design is reasonable if it depends on a detail that the programmer otherwise describes as crazy.

As you note, the new behaviour you see looks to be an attempt at improvement. A presumption in the implementation so far is that programs won’t be troubled if the timer resolution is finer than they’ve asked for. Put aside whether they can be if well written. There is plausibly some waste in making a thread ready earlier than its process has indicated is tolerable. A push to give each thread an average delay that more closely aligns with its process’s expressed expectation should therefore not surprise. I expect, though, that compatibility considerations apply. A programmer that plays the game of calling timeBeginPeriod must know that other programs may play too. Such programs may have coordinated. They may better be left alone. So I should not be surprised if waste elimination is sought only (or first) for processes that do not play the game. Well, we’ll know once someone does the research.

This brings me to your graphs. Your graph of old behaviour can be explained very well – indeed, by the simple model you present in which the caller of Sleep(1) becomes ready for return at the first timer interrupt that occurs 1ms or more after making the call. Assume the interrupt period is constant, having been set by the other program and not changed by any other. The first Sleep(1) is random with respect to these interrupts. All the remainder are called soon after an interrupt. Over a long enough run, you measure the average time in calls to Sleep(1) that are syncronised with interrupts. Mostly then, you just measure the interrupt period. When the interrupt period is 1ms, the time you spend just to call Sleep(1) means you’ll still be asleep for the first interrupt after the one that woke you but you’ll catch the second, and your experiment therefore measures 2ms.

Explaining your graph of new behaviour will have to wait for research, but there can’t be any surprise that you don’t get a neat result. Microsoft may be aiming that the process that has not asked for a finer resolution should find that its average time spent in a random Sleep(1) is unaffected by the system’s use of a finer resolution than the default. But your calls to Sleep(1) are not random!

That is a _very_ long comment.

> where is the Great Rule Change?

The great rule change is that the effect of timeBeginPeriod used to work one way, and now it works another, and this difference has not been documented. This broke at least one program, and almost certainly more.

I am hopeful that Microsoft will eventually document this, but I don’t think there is much more for them to say. It would just be good for the official documentation to say what I discovered through experimentation here.

It was a long comment because I wanted to present my case carefully that there is no rule change. What you present as the new rule is what the rule really has been all along. All that you’ve yet shown has changed is an implementation detail that Microsoft will have documented so that programmers should understand that setting the timer resolution has consequences for others and is better done considerately. They won’t have documented it so that programmers start depending on the particulars of those consequences.

True, their documentation is terrible, and so misunderstanding is inevitable. But do you not think programmers bear some responsibility too to think through the consequences of what they depend on? Here, they were being told that in this, of all things, you don’t own the processor. Please be a good citizen. Use no more of this feature than you really do need. How that turns into an invitation to depend on details of the feature’s implementation, I don’t know, and they themselves ought to have been able to see how easily they might tie themselves in knots – as when you yourself describe the old implementation as decades of madness, yet you say that depending on it in some particular way for coordinating processes is a reasonable design.

I’ll add it to my list of things I’ve seen Googlers call reasonable or even elegant but which give me the shivers. Less flippantly, I do agree with you that since Microsoft did document the implementation detail without spelling out that it was not to be depended on and since programmers inevitably will have depended on it, Microsoft ought to bear some cost and write some better documentation. But that’s enough dreaming!

We must be arguing semantics because it is quite clear that the behavior has changed. It hasn’t changed for processes that call timeBeginPeriod(1), but it has changed for any process that doesn’t call timeBeginPeriod(1) running on a system where some other process has.

That’s all I claimed, and that is definitely true. Since the reality of this change is indisputable you must be arguing about whether that counts as a rule change, which seems like an uninteresting argument.

You seem to be arguing that since the old behavior was not documented there was no official “rule” and therefore the change cannot be a “rule” change. I disagree with that analysis, and I also think you could present that argument much more clearly if you condensed it down to a single sentence, instead of a mini blog post. See the first sentence of this paragraph for an example.

I know of one program that was broken and had to be modified because of this change. By definition this means that it was a breaking change, and I’ll leave it up to the Talmudic scholars to decide whether “the great rule change” is an accurate description or not.

I completely agree with Geoff. The behavior changed. But both behaviors, the old one as well as the new one, are consistent with the docs, and the new one is (additionally) even consistent with what an unsuspecting programmer would expect. And I didn’t verify Geoff’s claim, but I believe him when he says:

> The rule has always been as you say: “If a process wants a faster timer interrupt then it should be calling timeBeginPeriod itself”

I think we’re just debating semantics here. The behavior clearly changed. It changed enough to break several programs. That is unusual because Microsoft tries very hard to maintain backwards compatibility. I think the change is justifiable because of the (presumed) power benefits. As I said earlier:

I’ll leave it up to the Talmudic scholars to decide whether “the great rule change” is an accurate description or not.

Very interesting read!

I think I’ve found one quite popular old software that uses these features. I’ve been using GlovePie many many years and its been working just fine from Windows 7 to Windows 10 1909. But after version 2004 something changed. Glovepie lets you set how fast the script is run (from 0hz to 1000hz) and now it can only run scripts in 64hz. So when running scripts that requires smooth operations (like mapping head movements to mouse movements) everything is skippy and stuttery, Bummer.

It sounds like GlovePie doesn’t call timeBeginPeriod but was instead relying on other processes to do that for it. I filed a bug in a GlovePIE repo I found:

https://github.com/Ravbug/GlovePIE/issues/1

Did you perhaps test whether program compatibility value selected affects the behavior?

I did not test whether program compatibility settings affect the behavior.

Is there any other idiot who says “Let’s stop using Windows 7”?? :))

I don’t know what your point is, or who you are suggesting is an idiot. Maybe be more specific, and less ad-hominem.

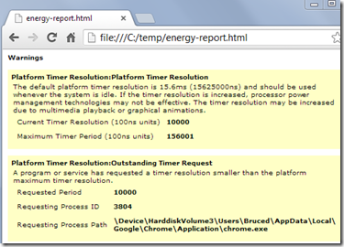

There is another place where you can see that something has changed, which is the powercfg energy report. First I thought that’s just a bug in 2004, but now I think it totally fits your observations. If you have any processes running which request higher-than-default resolution, and you run “powercfg /energy”, the energy report will show warnings (yellow boxes) about each particular process requesting a higher timer resolution and it’s value. If you scroll down a bit, there is also an information about the current global timer resolution of the system.

Prior to 2004, the latter was always following the lowest interval requested by any of the processes above (so in case of increased time resolution, it was also within the ‘warnings’ section). But NOW, the current timer resolution of the system is always reported as 156250 (and is reported as being OK in the ‘information’ section).

Actually, I like this change. The past has shown that many developers unfortunately do not care too much about the implications of globally increasing the timer resolution of the whole system, leading to an increased power consumption of other processes which wouldn’t need the higher resolution at all. It’s good that Windows is applying a brake now to lessen the ‘collateral damage’ caused by this practice.

That’s a good observation about powercfg. Personally I have stopped using it because it just reports the timer frequency at a point in time, which is surprisingly unhelpful.

I agree that this change is for the better. I wish it was documented by Microsoft, but if people find this blog post then maybe even that doesn’t matter.

Thanks for writing this up. The Oculus runtime had been setting timer resolution in our service and depending on the fact that it’s system-wide in VR clients. We’ve been getting reports of all kinds of problems that happened to coincide with major code restructuring at our end (and compounding the issue our corporate windows version was pre-2004), we only just now noticed the timer resolution was to blame.

One note on the article: on first skimming it I saw “Windows 10 2004” and assumed this was a change from before Oculus existed, moving the “(April 2020 release)” to the top of the article would have gotten me to pay attention sooner.

Interesting. Thanks for sharing. I also came across another Google system that had the same sort of dependency. Luckily I was able to recognize what was going on from the ETW trace that they shared and solve their mystery quickly.

Good point on the confusion caused by Windows 10 2004. The naming convention should have been 20-04 or 20.04, but oh well. I updated it to avoid future confusion.

It seems a good solution would be to extend the API to allow processes to specify a resolution along with the interval each time when calling Sleep/setting up timers? Ie instead of a global change, it just affects each individual call, allowing the OS to use whichever interrupt accuracy to fulfill the user requests as well as possible for a non-RT OS? I wonder why it wasn’t done like that?

Each time a process requested a different sleep resolution the OS would potentially have to reprogram the timer interrupt, which is probably not cheap. I think the goal was that those few programs that needed a raised timer interrupt frequency would request it for a while. It was a reasonable idea, but:

1) Those “few” programs turned into a lot, many of them Microsoft products that failed to set a good example

2) Many developers didn’t even know that timeBeginPeriod was a thing so they would suffer with poor timer precision

3) The bleedover between processes was confusing

4) Many programs (the Go runtime, for one) now change the timer interrupt interval quite frequently

Anyway, specifying a precision now would require creating SleepExEx for the extra parameter and I can’t see that happening.

Have you tested this with Windows 10 Game Mode disabled? I wonder if that is the source of this new behavior.

I have no reason to believe that this is anything to do with Game Mode.

I’ve done some tests.

It seems that the call affects a single process (not system, not only threads).

I launched a process for each physical core (6 cores, 6 threads), using properties

– Process.ProcessorAffinity

and

– ProcessThread.ProcessorAffinity

– ProcessThread.IdealProcessor

Each process had 2 threads, which calculated the real life of Thread.Sleep (1).

Calling “TimeBeginPeriod” from a thread changed the result of both threads in the process, but did not affect other processes.

I tried different combinations, STA/MTA thread, ecc.: it seems to be like I said.

What do you think?

That is consistent with my results. And, it makes sense as a policy that Microsoft might implement intentionally. If a process calls timeBeginPeriod then clearly that process should get what it asked for. And, conversely, if a process _doesn’t_ call timeBeginPeriod then it is reasonable for it to be as unaffected as possible.

So, all very sensible. It’s just a pity that it is undocumented.

Of course. All clear, thank you for your effort and contribution.

Pingback: How to change setWaitableTimer accuracy on Windows 10 20H1 and later – Windows Questions

It looks like Microsoft updated the documentation of timeBeginPeriod, they’ve described the new implementation introduced in Windows 10 2004.

Oh wow, they did indeed. Thanks for pointing that out. It’s not ideal – it glosses over some of the realities – but it is progress.

https://docs.microsoft.com/en-us/windows/win32/api/timeapi/nf-timeapi-timebeginperiod

Pingback: winapi - Do I have to call timeBeginPeriod before timeSetEvent when using Windows multimedia timers? | ITTone

It is not true, that nothing has changed, if you called timeBeginPeriod in your program all along.

I am writing a streaming application, that used one shot CreateTimerQueueTimer timers to trigger the sending of UDP packets every few milliseconds. Since Win 10 2004 this is broken, my timers only fire after 15ms despite calling timeBeginPeriod(1). I changed it to use timeSetEvent, now it works again, including on Windows 10 2004 and 20H2.

So since the 2004 update CreateTimerQueueTimer() ignores the resolution defined by timeBeginPeriod.

Unfortunately timeSetEvent is desclared obsolete, with a recommendation to use the (now broken) CreateTimerQueueTimer. On the other hand timeSetEvent has been declared obsolete since at least 2008 (earliest mention of the obsolence found in a quick Google search) and it is still around. Hopefully it will stay oder Microsoft fixes CreateTimerQueueTimer.

That sounds like a serious problem. Consider creating a repro and filing an issue on Feedback Hub or else at https://github.com/microsoft/Windows-Dev-Performance/issues

Pingback: Windows Timer Resolution change (2004) and Motion Simulators - RowanHick.com

Hi Bruce!

Thank you. Now I can understand the frustration of the Telos Alliance’s developers more indepth. This company (and especially their department Axia) is specialized in digital audio manipulation (mixing) and transfer. Their concept of audio over IP is called Livewire. There is other audio over IP solutions (eg. Dante), but they are more or less designed to be less prudent (survive more or less jitter in packet generation/arrival).

Livewire, in its most real-time mode, has an audio packet rate of 200 UDP packets per second. This is around one packet every 5 milliseconds. Axia IP Driver makes it possible to have a virtual sound card on a PC providing audio channels. These channels are accessible by ASIO (Audio Stream Input/Output) or DirectX. Native Livewire streams should never be routed.

Getting most out of a non-real-time OS, like Windows, has not been too difficult up to Windows 10 (2004), but proven to be problematic at least since Windows 10 (20H1). If I interpret material from Axia correctly, the only reliable way of achieving a constant UDP packet transfer rate has been by using the sleep() function. After updating to 20H1 the Axia IP driver just got narcolepsy 🙂

Of course, all this is very proprietary, but imagine Earth’s gravitational pull changing every two years. It would be a nightmare for Engineers e.g. in the building industry.

Hi!

Let me also say few words about this…

Windows newer being an real-time OS, but in comparision with others, e.g. Linux it always let programmers write quality multimedia programms. It was since Win3.1 and MMSystem.dll first was introduced. And this “status” of Windows was brocken suddenly with Win10, 2004 update.

I think there was alot of MultiMedia Windows software that relay on possibility of 1msec timer precision. MIDI,Audio software, even some Control Mesurement Systems…

All words about: “other threads/processes can’t get control” – is only words, because when we use for example:

timeSetEvent(1,0,@MsTimerTickHandler,DWORD(Self),TIME_PERIODIC);

“MsTimerTickHandler” – callback works similar as Interrupt handler routine. In MMSystem docs there was described some rules: what you can do in Timer Handler and what you can’t.

So, in this case we have ability of real-time extention provided by OS this “easy” way.

Programs that use this timer calls are “priority” programms.

When you play software-plugin synthesizer, you think about “minimal delay”, and you sure don’t

think about other soft works slower or even “freeze” while you record some music in your DAW.

BTW, Here one more example of software, Microsoft made brocken with their “update”.

Reading from site:

“KARMA Software for Windows is not compatible with Windows 10 version 2004 (May 2020 Update) or later and will not run. The PACE drivers that we have been using are no longer compatible and we are not planning to recompile the software. Therefore, after 18 years of continuous support, Windows 10 version 1909 is the end of the line for this version of the software.”

It was perfect real-time MIDI effects generation soft which extends ability of KORG and YAMAHA syntherizers.

I’ve just run into an issue where the default frequency on some very recent Win10 builds seems to be 20ms, and timeouts that used to return in microseconds are now returning on a 20ms period!

20 ms is quite long, but has happened historically.

The advice remains the same – if possible, avoid depending on timeouts at all. It is better to wait on events. If timeouts are necessary and if they have to be short, then call timeBeginPeriod.

There’s new PROCESS_POWER_THROTTLING_IGNORE_TIMER_RESOLUTION option in Windows SDK 22000. It’s for SetThreadInformation (…, PROCESS_POWER_THROTTLING_STATE, …), but I haven’t yet tested what it actually does.

Just as a curious find, and in case anyone else is having performance issues with low latency audio I thought I’d post this. Funnily, this was a result of troubleshooting the Axia IP-driver mentioned earlier by Kristian!

Based on my findings, using timeSetEvent is not enough to give good low latency performance in DAWs on it’s own, at least on Win10 21H1. I can see a timer request happening, but the system timer interval remains on ~15 ms (tested for using WPR) unless I have a driver that can push it down to ~1 ms, which sadly the Axia driver does not (yet) do. A good lot of other drivers for hardware audio interfaces that I own do however, so I set out to try to find out why. The solution seems to be a call to ExSetTimerResolution (https://docs.microsoft.com/en-us/windows-hardware/drivers/ddi/wdm/nf-wdm-exsettimerresolution), an so I decided to try my wings at writing a dummy driver that I could use to call this when needed.

I simply followed the MS guide for writing a KMDF hello world driver in Visual Studio (https://docs.microsoft.com/sv-se/windows-hardware/drivers/gettingstarted/writing-a-very-small-kmdf–driver), with some bits removed and some added, and ended up with this very simple piece of code. I built a release version for x64, and the result was a driver that after installation let me add a device manually through Device Manager -> Actions -> add legacy hardware -> manual installation -> show all devices yada yada… (I had edited the inf and added a manufacturer name to make it easier to find here). When this device is enabled, the system clock will be set to 1 ms, or the lowest possible interval that the system can support if higher than 1 ms.

If you decide to build this yourself, the device will end up under “System devices” based on the name chosen for the project.

The actual installation of the inf will require test signed drivers to be allowed using the command

Bcdedit.exe -set TESTSIGNING ON

which in turn will require safe boot to be switched off in BIOS. Anyhow, here is the code in case it helps someone, USE AT OWN RISK, I’m not a developer, just stubborn:

#include <ntddk.h>

#include <wdf.h>

#include <wdm.h>

DRIVER_INITIALIZE DriverEntry;

EVT_WDF_DRIVER_DEVICE_ADD TimerEvtDeviceAdd;

EVT_WDF_DRIVER_UNLOAD TimerEvtWdfDriverUnload;

NTSTATUS DriverEntry( _In_ PDRIVER_OBJECT DriverObject,

_In_ PUNICODE_STRING RegistryPath) {

// NTSTATUS variable to record success or failure

NTSTATUS status = STATUS_SUCCESS;

// Allocate the driver configuration object

WDF_DRIVER_CONFIG config;

// Initialize the driver configuration object to register the

// entry point for the EvtDeviceAdd callback, TimerEvtDeviceAdd

WDF_DRIVER_CONFIG_INIT(&config, TimerEvtDeviceAdd);

config.EvtDriverUnload = TimerEvtWdfDriverUnload;

// Finally, create the driver object

status = WdfDriverCreate(DriverObject, RegistryPath, WDF_NO_OBJECT_ATTRIBUTES, &config, WDF_NO_HANDLE);

if (status == STATUS_SUCCESS) {

ExSetTimerResolution(10000, TRUE);

}

return status;

}

NTSTATUS TimerEvtDeviceAdd( _In_ WDFDRIVER Driver,

_Inout_ PWDFDEVICE_INIT DeviceInit) {

// We’re not using the driver object,

// so we need to mark it as unreferenced

UNREFERENCED_PARAMETER(Driver);

NTSTATUS status;

// Allocate the device object

WDFDEVICE hDevice;

// Create the device object

status = WdfDeviceCreate(&DeviceInit, WDF_NO_OBJECT_ATTRIBUTES, &hDevice);

return status;

}

// Remove the timer request when unloading the driver

void TimerEvtWdfDriverUnload(_In_ WDFDRIVER Driver) {

UNREFERENCED_PARAMETER(Driver);

ExSetTimerResolution(0, FALSE);

}

Cheers

Alexander

Unfortunately, the board seems to strip tags, the includes should read:

ntddk.h

wdf.h

wdm.h

enclosed in less than/greater than symbols, perhaps the moderator can change this?

/Alexander

Fixed. The comment editor really is rubbish. I had to use HTML character codes (< and >) to make it work.

I haven’t used TimeSetEvent so I can’t really comment on it. I do wonder why you are using ExSetTimerResolution rather than timeBeginPeriod, but maybe there is a good reason. timeBeginPeriod still does change the timer interrupt frequency, just remember that that change is now “hidden” from other processes.

Thanks!

Well like I wrote a bit about in the beginning of my comment, I saw the driver, along with a host of other processes, requesting 1ms timers when looking at a trace in Windows Performance Analyzer. But the system timer was still fixed at ~15ms. I did test what you suggested first, writing a simple program that called timeBeginPeriod (timeSetEvent could also have been used, it calls the former according to https://stackoverflow.com/questions/67170346/do-i-have-to-call-timebeginperiod-before-timesetevent-when-using-windows-multime) but it had absolutely no impact on the low latency audio performance.

After realizing that a MIDI driver on my system did improve the performance greatly when in use, I also saw that the system timer went down from ~15ms to 1ms in WPA (along with a new timer in it’s own section called “Driver”). I assume that the audio driver in question was relying on something else in the system that could not benefit from the “local” timer requested by the process, but instead needed that global timer to go down. ExSetTimerResolution was the first thing I tried and it worked beautifully. Since I wrote the comment though, I realized that I had gotten a beta of their driver that actually solved the same problem, perhaps by calling the same function even.

These differences between “soft” and “hard” timer changes can also be observed with a “powercfg /energy /duration n” type report. When the system timer is changed by a kernel driver (to say 1ms), the report warns about an outstanding kernel timer request, and that the platform timer is 10,000 100ns units :), but when using a userland function there is no kernel timer request, only regular timer requests reported, and the platform timer is in my case reported as 156,250 100ns units.

Hello,

Could you please share the compiled version of the driver? Currently I, and I assume many others, are not in a position to compile it by myself, however I’d very much like to test it.

Thank you in advance!

Hello Stoyan,

Nice to hear that such a simple slapped together thing could be useful to someone else, please find it here:

https://1drv.ms/u/s!AmaIcdR-tmXPkcUHbFHStXFcAe–JA?e=8Mq2RM

And please treat this as 100% insecure, test it in a sandbox environment etc etc

Best regards

Alexander

Hello Alexander!

Thank you so much for sharing!

I can confirm after disabling driver signing from the startup options I was able to install and use the driver, however, it didn’t have such dramatic impact as I was hoping for, however, with some fine tuning of the resolution interval I’ve managed to lower the delta between the resolution and the sleep to the 0.00XX ms range (measured with MeasureSleep from Github).

If someone was to sign this it would be a great little gem for people who need it.

I’ve seen a way to “load” the unsigned driver without using the startup option for unsigned drivers, however I’m not sure how it’s done.

Otherwise one should restart into the “startup options menu” and select the option for the driver to load properly.

Another concern is that this unsigned driver (and state of the system) might interfere with some anti-cheat solutions, which could lead to unwanted and undeserved bans from online competitive games.

Nevertheless – Thank you for the readiness to share! Much appreciate it!

All the best,

Stoyan

Behavior change is explained here, in the official documentation:

https://docs.microsoft.com/en-us/windows/win32/api/timeapi/nf-timeapi-timebeginperiod

The single paragraph about a change in the 2004 version, explains how it works and is in line with what you have described in this article. It’s in principle, completely straightforward.

Predicting results in a complex system, is however not so simple.

Thanks for the reminder. I knew that this was documented now (lightly, as you say) but I’d forgotten to update this article. I’ve done that now.

More details about the motives (power saving) and implementation (trying to get the requested interval on average?) would be nice but I guess it’s mostly understood now.

Aside: I randomly met the developer for this feature at a yoga class…

> CPUs have historically not been good at keeping accurate time (their clocks intentionally fluctuate to avoid being FM transmitters…

I would love to read more about this phenomenon!

Unfortunately I can’t find any online information about this. I was told about this technique when working on the Xbox 360. It nominally ran at 3.2 GHz but it it actually varied between that speed and 1% lower, for an average speed of about 3.184 GHz. I assume that other CPUs do the same thing, but I cannot prove it.

Look for “Spread Spectrum” BIOS options. They intentionally add jitter to the CPU/PCIe/… clock in order to reduce spread the energy emitted in the frequency spectrum from one tone to a range (the area (energy emitted) in the spectrum (energy density over frequency) stays the same, but the frequency range is increased, leading to lower energy density).

hey sir can i ask why KeSetTimer feels so extreme good when setting it to nanoseconds much faster then timebeginperiod

I don’t know. I think KeSetTimer lets you set the timer interval to 0.5 ms which might make a difference in some cases, but I don’t know what “so extreme good” means.

I do think that programs should try to rely less on raising the timer interrupt frequency. Waiting on events (v-blank interrupts, audio-data needed interrupts, and their signaled variants) is much more energy efficient and can give perfect responsiveness.

I’m sorry for misleading you i meant input wise feels much better i try a lot timers functions

timeBeginPeriod

ExSetTimerResolution

ZwSetTimerResolution

RDTSC with QueryPerformanceFrequency/QueryPerformanceCounter

threadpooltimer

timesetevent

and KeSetTimer did better

KeQueryInterruptTime is the real thing i never seen timer that so good and most fastest i test

its possible read QueryPerformanceCounter 1ns(1000000000) the only problem is HT/SMT i read it as first core to avoid sync problem(SetThreadAffinityMask) i also using NtDelayExecution 100ns i think this is the fastest u can go on windows.

You can restore old global timer resolution functionality with this reg key

name: GlobalTimerResolutionRequests

DWORD : 1

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Session Manager\Kernel

restart required

Thanks – that’s very interesting. I wouldn’t recommend setting that (except for test purposes), and software definitely shouldn’t set it on user machines, but it’s good to have that option for testing

Hey,

I try it on Windows 10 and didn’t work.

Is there a solution for windows 10 ?

Thanks

I would guess that the registry key would _only_ apply to Windows 10 (maybe to Windows 11) because the change didn’t happen until Windows 10.

Anyway, why do you want this?

Hi,

The registry key works only on Windows 11. Yes, the change was made after the Win10 v2004 update but still it doesn’t work. Is there a similar solution about it for Windows 10 ?

Thanks.

better frame pacing in games

If the scheduler change makes a difference to the frame pacing in games then that suggests that the games are buggy and that they are failing to request a higher timer frequency themselves. Eventually they will probably sort themselves out

wishful thinking imo. games that have been broken for a decade or longer won’t just “eventually sort themselves out” at this point.

Why would a game “sort itself out”? This doesn’t make any sense and if older games could be broken on Windows 10 2004 and upwards, no one will ever patch them, because even in some cases, the studio for that game may have been closed. How will that make a game “sort itself out”?

You’re right – any unsupported games will remain broken, and that is a shame. They can probably be hacked to inject the necessary calls to fix them – I hope somebody creates a tool for that, and that it works on many games.

hello,

I was reading Windows Internals and it says timers are only handled on cpu0 for modern standby enabled laptop (page 73 chapter 8 part 2 7th edition). I used the kernel debugger and confirm non modern standby enabled laptops also has the same behavior.

My question is: Windows has Intelligent Timer Tick Distribution and timer coalescing since 7, and tickless timer since 8, is it still necessary to put all timers on cpu0 for power saving purpose?

Also, do you think the same idea of power saving applies to interrupts? Windows has an interrupt routing setting that can route interrupts to cpu0/cpu1.

My understanding is that, parallelism is always preferable to increase efficiency, I see no reason to affinitize the first core with lots of things.

Parallelism is important if you want to maximize performance. However single-core is preferable if you want to increase efficiency. Every time a CPU core wakes up this act consumes some energy – more energy than it takes to handle an interrupt.

Your distinction between timers and interrupts suggests some confusion. The timers are timer interrupts. For maximum power efficiency all interrupts – including time interrupts – should be handled on the same core.

Have you considered package C-states? If you keep interrupts on one core, other cores would certainly stay in C-states longer, but package C-states depend on the lightest core C-state, meaning that if you load too many things on one core, the package is less likely to go to deep package C-states due to one core waking up too frequently.

I don’t claim to be an expert, but I think that a core being overloaded by interrupts on an otherwise mostly idle system is unusual and unlikely.

That said, optimal power management is challenging and full of heuristics, because the ideal solution usually requires predicting the future which is known to be difficult.

Hello again, I was reading Windows Internals and found this paragraph:

“Back when the clock only fired in a periodic mode, since its expiration was at known interval multiples, each multiple of the system time that a timer could be associated with is an index called a hand, which is stored in the timer object’s dispatcher header. Windows used that fact to organize all driver and application timers into linked lists based on an array where each entry corresponds to a possible multiple of the system time. Because modern versions of Windows 10 no longer necessarily run on a periodic tick (due to the dynamic tick functionality), a hand has instead been redefined as the upper 46 bits of the due time (which is in 100 ns units). This gives each hand an approximate “time” of 28 ms. Additionally, because on a given tick (especially when not firing on a fixed periodic interval), multiple hands could have expiring timers, Windows can no longer just check the current hand. Instead, a bit-map Is used to track each hand in each processor’s timer table. These pending hands are found using the bitmap and checked during every clock interrupt.”

I don’t get why a hand interval is 28ms instead of 15ms, what if a timer needs to expire right after 1 clock interval, does it expire after 15ms or 28ms?

That’s interesting. What section is that?

There is a tension between precision (high-frequency interrupts) and power efficiency (lower-frequency interrupts) and I think the question that Microsoft’s kernel developers would ask is “why do you need to wake up at that exact time” and their follow-up question would be why you aren’t waiting on something relevant to your need, such as a v-blank, disk-read completion, audio-buffer draining, etc.

I think that Microsoft is right (waiting on timers with that resolution shouldn’t ever be necessary) but also they violate this rule as frequently as anyone.

Windows Internals Part 2 7th page 70.

Correct me if I am wrong: the expiration time is actually 15ms ± 15ms because a relative timer could be earlier or later than actual expiration time by 1 clock interval, the error depends on the nearest hand to the expiration time.

ExSetTimerResolution – working

Latency moon (Kernel Timer Latency) – shows 1000us (before 12000us).

KeSetTimerEx – same, maybe a little better.

how to set this function without driver?

im just want use .exe or windows service for this.

ok, all i could do was run it ExSetTimerResolution

because is very simple, but

I dont know about KeSetTimer, KeSetTimerEx, KeQueryInterruptTime.

(im try, but i have nothing or bsods).

What a difference? maybe someone have a code to run system timer with this func.

Really insightful post!

It helped me figure out the cause of a bug in an old 2000s game which resulted in the framerate being capped at 64fps when running on modern windows. It was calling Sleep(1) on each game loop assuming it would always sleep for 1ms, but this weird behavior modern windows has was affecting that call.

Interesting! Presumably that means that that game would end up with a capped frame rate even on old versions of Windows if there wasn’t another process running that had raised the timer interrupt frequency. What is the game?

It’s the tie in licensed game for the Pixar film Ratatouille, developed by Asobo Studio. To this day they use the same engine than back then (with lots of upgrades over the years).

Crysis 2 is another example, capped at 64 fps unless higher timer resolution is called.

Serious Sam 2 and HD are also affected to some extent when using their built-in frame rate limiter, often falling below the frame rate target.

It is pretty sad that Crysis 2 and Serious Sam 2 were relying on other processes on the customer’s computer to raise the timer interrupt frequency in order to run properly. The mind boggles. Obviously the developers didn’t realize this, but it is a (sadly) hilarious indictment of Windows software development.

* I was under the impression that <timeapi.h> (the multimedia timers API to which timeBeginPeriod belongs) had been deprecated for a long time. I’m surprised so many applications still have to rely on it. https://learn.microsoft.com/en-us/windows/win32/multimedia/multimedia-timers

* Visual Studio seems to raise the timer frequency _sometimes_. Not long ago, I, wanted to test whether my program, which does not raise the timer frequency, was benefiting from other processes having done so, and I found I had to close all instances Visual Studio (among other applications) before clockres showed the default rate. But lately, that doesn’t seem to be the case. I think VS’s “diagnostic tools”–the live graphs the pop up when you run the debugger–might be a culprit.

* I recently came across the Multimedia Class Scheduler Service. With it, individual threads can tell the service when they are performing a multimedia task, like “Pro Audio” or “Games”.

https://learn.microsoft.com/en-us/windows/win32/procthread/multimedia-class-scheduler-service

Browsing the registry settings for the task classes, I see most (all?) have a “Clock Rate” value of 10000. Assuming 100 ns units, that’s 1 ms. I wonder whether that’s the way modern programs are expected to request higher precision timing.

With per-thread resolution, the system theoretically could track whether a window-owning multimedia class thread has any non-hidden, non-minimized, and non-occluded windows when deciding whether it gets a vote raising the timer frequency.

* The Desktop Window Manager has a facility to buffer up to 8 frames that it will compose onto the desktop at a steady pace. Highly interactive applications often wait for the vertical blank (hopefully for the correct monitor) and then present the next frame. But a low interactivity task (like video playback or an animation) could present a burst of frames and then sleep for many milliseconds while the DWM methodically works through the batch. That would not require a precise sleep time.

That article only says that the Joysticks feature is deprecated. timeBeginPeriod is unrelated. I haven’t heard that it has been deprecated, and it’s still extremely important.

I would agree that many apps don’t need high-precision timers. The DWM example is one good one. Although, buffering 8 frames implies 133-266 ms of buffered data, which is enough that delays in handling “pause” would be noticeable, so it’s not clear that it is worth the complexity/tradeoffs, even in the simple video playback case.

Even if it is, 8 frames is a fair amount of memory. Tradeoffs galore.

And, of course, games and video chat require short latencies. Not always 1 ms, but 1 ms latency does make things easier.

Tricky challenges.

The bit about joysticks seems to be a copy-paste error. This page refers to the multimedia timers as a “legacy” feature and “strongly recommends” converting to the shiny new thing. https://learn.microsoft.com/en-us/windows/win32/multimedia/using-multimedia-timers?source=recommendations

Buffering 8 frames would be a lot, but you can buffer any value up to 8. At 30 fps, 3 frames is only 100 ms, which means the average response time to a pause could be 50 ms. (Faster is always better, but UI response times below 100 ms are often considered acceptable.) The playback thread could sleep for 4 or more cycles of a 15.625 ms interval on a regular basis.

Gotta love copy/paste errors.

Okay, so Microsoft wants people to stop using the multimedia timers, and I guess that includes timeBeginPeriod, but I just don’t see it happening. For one thing there are the millions of programs using it already. For another, I haven’t seen an article that convincingly explains how, and why. Developers aren’t going to rewrite their code without a reason, and just saying “its deprecated” isn’t going to work very well, especially when so much of Microsoft’s code still uses timeBeginPeriod.

But the how is also important. It’s easy to write timeBeginPeriod(1) and then start enjoying the increased resolution of Sleep, WaitForSingleObject timeouts, etc. How do developers make the transition? What is the mapping? I haven’t investigated

This page, for example, demonstrates timeBeginPeriod, says that it is a legacy API, but contains zero links to explain how to rewrite the code:

https://learn.microsoft.com/en-us/windows/win32/multimedia/obtaining-and-setting-timer-resolution

Personally I take one look at that and decide not to bother. Unclear benefits and an unclear transition plan tells me to stick with what I’m using.