I have been making the same recommendations about tempdb since I started working with SQL Server over 15 years ago, when I was working with customers running version 2000. The gist of it: create multiple data files that are sized the same, with the same auto-growth settings, enable trace flag 1118 (and maybe 1117), and reduce your tempdb use. From the customer side, this has been the limit of what can be done*, until SQL Server 2019.

*There are a few additional coding recommendations that Pam Lahoud discusses in her very informative post, TEMPDB – Files and Trace Flags and Updates, Oh My!

What I find interesting is that, after all this time, tempdb is still a problem. The SQL Server team has made many changes over the years to try and mitigate issues, but the abuse continues. The latest adaptation by the SQL Server team is moving the system tables (metadata) for tempdb to In-Memory OLTP (aka memory-optimized). Some information is available in the SQL Server 2019 release notes, and there was a demo from Bob Ward and Conor Cunningham during the first day of the PASS Summit keynote. Pam Lahoud also did a quick demo in her PASS Summit general session. Now that 2019 CTP 3.2 is out, I thought it might be time to do a bit of testing myself.

Setup

I have SQL Server 2019 CTP 3.2 installed on my virtual machine, which has 8GB of memory (max server memory set to 6 GB) and 4 vCPUs. I created four (4) tempdb data files, each sized to 1GB.

I restored a copy of WideWorldImporters and then created three stored procedures (definitions below). Each stored procedure accepts a date input, and pushes all rows from Sales.Order and Sales.OrderLines for that date into the temporary object. In Sales.usp_OrderInfoTV the object is a table variable, in Sales.usp_OrderInfoTT the object is a temporary table defined via SELECT … INTO with a nonclustered added afterwards, and in Sales.usp_OrderInfoTTALT the object is a pre-defined temporary table which is then altered to have an additional column. After the data is added to the temporary object, there is a SELECT statement against the object that joins to the Sales.Customers table.

/*

Create the stored procedures

*/

USE [WideWorldImporters];

GO

DROP PROCEDURE IF EXISTS Sales.usp_OrderInfoTV

GO

CREATE PROCEDURE Sales.usp_OrderInfoTV @OrderDate DATE

AS

BEGIN

DECLARE @OrdersInfo TABLE (

OrderID INT,

OrderLineID INT,

CustomerID INT,

StockItemID INT,

Quantity INT,

UnitPrice DECIMAL(18,2),

OrderDate DATE);

INSERT INTO @OrdersInfo (

OrderID,

OrderLineID,

CustomerID,

StockItemID,

Quantity,

UnitPrice,

OrderDate)

SELECT

o.OrderID,

ol.OrderLineID,

o.CustomerID,

ol.StockItemID,

ol.Quantity,

ol.UnitPrice,

OrderDate

FROM Sales.Orders o

INNER JOIN Sales.OrderLines ol

ON o.OrderID = ol.OrderID

WHERE o.OrderDate = @OrderDate;

SELECT o.OrderID,

c.CustomerName,

SUM (o.Quantity),

SUM (o.UnitPrice)

FROM @OrdersInfo o

JOIN Sales.Customers c

ON o.CustomerID = c.CustomerID

GROUP BY o.OrderID, c.CustomerName;

END

GO

DROP PROCEDURE IF EXISTS Sales.usp_OrderInfoTT

GO

CREATE PROCEDURE Sales.usp_OrderInfoTT @OrderDate DATE

AS

BEGIN

SELECT

o.OrderID,

ol.OrderLineID,

o.CustomerID,

ol.StockItemID,

ol.Quantity,

ol.UnitPrice,

OrderDate

INTO #temporderinfo

FROM Sales.Orders o

INNER JOIN Sales.OrderLines ol

ON o.OrderID = ol.OrderID

WHERE o.OrderDate = @OrderDate;

SELECT o.OrderID,

c.CustomerName,

SUM (o.Quantity),

SUM (o.UnitPrice)

FROM #temporderinfo o

JOIN Sales.Customers c

ON o.CustomerID = c.CustomerID

GROUP BY o.OrderID, c.CustomerName

END

GO

DROP PROCEDURE IF EXISTS Sales.usp_OrderInfoTTALT

GO

CREATE PROCEDURE Sales.usp_OrderInfoTTALT @OrderDate DATE

AS

BEGIN

CREATE TABLE #temporderinfo (

OrderID INT,

OrderLineID INT,

CustomerID INT,

StockItemID INT,

Quantity INT,

UnitPrice DECIMAL(18,2));

INSERT INTO #temporderinfo (

OrderID,

OrderLineID,

CustomerID,

StockItemID,

Quantity,

UnitPrice)

SELECT

o.OrderID,

ol.OrderLineID,

o.CustomerID,

ol.StockItemID,

ol.Quantity,

ol.UnitPrice

FROM Sales.Orders o

INNER JOIN Sales.OrderLines ol

ON o.OrderID = ol.OrderID

WHERE o.OrderDate = @OrderDate;

SELECT o.OrderID,

c.CustomerName,

SUM (o.Quantity),

SUM (o.UnitPrice)

FROM #temporderinfo o

JOIN Sales.Customers c

ON o.CustomerID c.CustomerID

GROUP BY o.OrderID, c.CustomerName

END

GO

/*

Create tables to hold testing data

*/

USE [WideWorldImporters];

GO

CREATE TABLE [dbo].[PerfTesting_Tests] (

[TestID] INT IDENTITY(1,1),

[TestName] VARCHAR (200),

[TestStartTime] DATETIME2,

[TestEndTime] DATETIME2

) ON [PRIMARY];

GO

CREATE TABLE [dbo].[PerfTesting_WaitStats] (

[TestID] [int] NOT NULL,

[CaptureDate] [datetime] NOT NULL DEFAULT (sysdatetime()),

[WaitType] [nvarchar](60) NOT NULL,

[Wait_S] [decimal](16, 2) NULL,

[Resource_S] [decimal](16, 2) NULL,

[Signal_S] [decimal](16, 2) NULL,

[WaitCount] [bigint] NULL,

[Percentage] [decimal](5, 2) NULL,

[AvgWait_S] [decimal](16, 4) NULL,

[AvgRes_S] [decimal](16, 4) NULL,

[AvgSig_S] [decimal](16, 4) NULL

) ON [PRIMARY];

GO

/*

Enable Query Store

(testing settings, not exactly what

I would recommend for production)

*/

USE [master];

GO

ALTER DATABASE [WideWorldImporters] SET QUERY_STORE = ON;

GO

ALTER DATABASE [WideWorldImporters] SET QUERY_STORE (

OPERATION_MODE = READ_WRITE,

CLEANUP_POLICY = (STALE_QUERY_THRESHOLD_DAYS = 30),

DATA_FLUSH_INTERVAL_SECONDS = 600,

INTERVAL_LENGTH_MINUTES = 10,

MAX_STORAGE_SIZE_MB = 1024,

QUERY_CAPTURE_MODE = AUTO,

SIZE_BASED_CLEANUP_MODE = AUTO);

GO

Testing

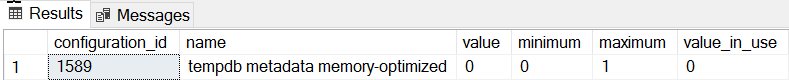

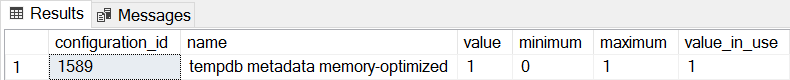

The default behavior for SQL Server 2019 is that the tempdb metadata is not memory-optimized, and we can confirm this by checking sys.configurations:

SELECT *

FROM sys.configurations

WHERE configuration_id = 1589;

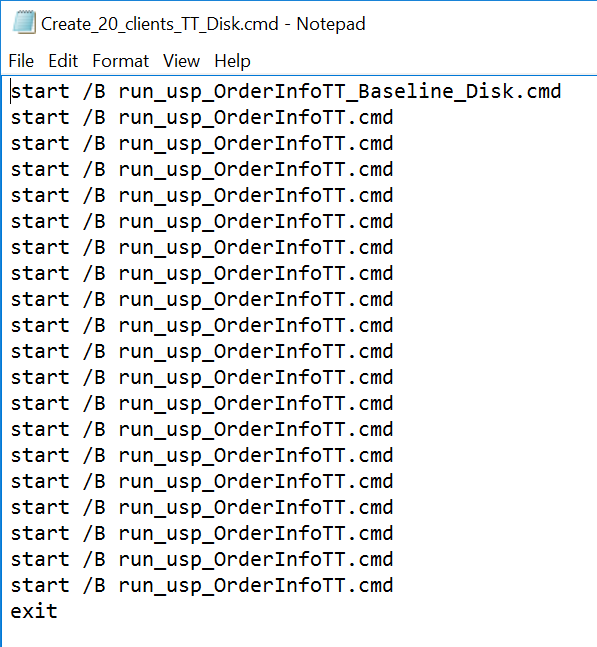

For all three stored procedures we will use sqlcmd to generate 20 concurrent threads running one of two different .sql files. The first .sql file, which will be used by 19 threads, will execute the procedure in a loop 1000 times. The second .sql file, which will just have one (1) thread, will execute the procedure in a loop 3000 times. The file also include TSQL to capture two metrics of interest: total duration and wait statistics. We will use Query Store to capture average duration for the procedure.

/*

Example of first .sql file

which calls the SP 1000 times

*/

SET NOCOUNT ON;

GO

USE [WideWorldImporters];

GO

DECLARE @StartDate DATE;

DECLARE @MaxDate DATE;

DECLARE @Date DATE;

DECLARE @Counter INT = 1;

SELECT @StartDATE = MIN(OrderDate) FROM [WideWorldImporters].[Sales].[Orders];

SELECT @MaxDATE = MAX(OrderDate) FROM [WideWorldImporters].[Sales].[Orders];

SET @Date = @StartDate;

WHILE @Counter <= 1000

BEGIN

EXEC [Sales].[usp_OrderInfoTT] @Date;

IF @Date <= @MaxDate

BEGIN

SET @Date = DATEADD(DAY, 1, @Date);

END

ELSE

BEGIN

SET @Date = @StartDate;

END

SET @Counter = @Counter + 1;

END

GO

/*

Example of second .sql file

which calls the SP 3000 times

and captures total duration and

wait statisics

*/

SET NOCOUNT ON;

GO

USE [WideWorldImporters];

GO

DECLARE @StartDate DATE;

DECLARE @MaxDate DATE;

DECLARE @DATE DATE;

DECLARE @Counter INT = 1;

DECLARE @TestID INT;

DECLARE @TestName VARCHAR(200) = 'Execution of usp_OrderInfoTT - Disk Based System Tables';

INSERT INTO [WideWorldImporters].[dbo].[PerfTesting_Tests] ([TestName]) VALUES (@TestName);

SELECT @TestID = MAX(TestID) FROM [WideWorldImporters].[dbo].[PerfTesting_Tests];

SELECT @StartDATE = MIN(OrderDate) FROM [WideWorldImporters].[Sales].[Orders];

SELECT @MaxDATE = MAX(OrderDate) FROM [WideWorldImporters].[Sales].[Orders];

SET @Date = @StartDate;

IF EXISTS (SELECT * FROM [tempdb].[sys].[objects]

WHERE [name] = N'##SQLskillsStats1')

DROP TABLE [##SQLskillsStats1];

IF EXISTS (SELECT * FROM [tempdb].[sys].[objects]

WHERE [name] = N'##SQLskillsStats2')

DROP TABLE [##SQLskillsStats2];

SELECT [wait_type], [waiting_tasks_count], [wait_time_ms],

[max_wait_time_ms], [signal_wait_time_ms]

INTO ##SQLskillsStats1

FROM sys.dm_os_wait_stats;

/*

set start time

*/

UPDATE [WideWorldImporters].[dbo].[PerfTesting_Tests]

SET [TestStartTime] = SYSDATETIME()

WHERE [TestID] = @TestID;

WHILE @Counter <= 3000

BEGIN

EXEC [Sales].[usp_OrderInfoTT] @Date;

IF @Date <= @MaxDate

BEGIN

SET @Date = DATEADD(DAY, 1, @Date);

END

ELSE

BEGIN

SET @Date = @StartDate;

END

SET @Counter = @Counter + 1

END

/*

set end time

*/

UPDATE [WideWorldImporters].[dbo].[PerfTesting_Tests]

SET [TestEndTime] = SYSDATETIME()

WHERE [TestID] = @TestID;

SELECT [wait_type], [waiting_tasks_count], [wait_time_ms],

[max_wait_time_ms], [signal_wait_time_ms]

INTO ##SQLskillsStats2

FROM sys.dm_os_wait_stats;

WITH [DiffWaits] AS

(SELECT

-- Waits that weren't in the first snapshot

[ts2].[wait_type],

[ts2].[wait_time_ms],

[ts2].[signal_wait_time_ms],

[ts2].[waiting_tasks_count]

FROM [##SQLskillsStats2] AS [ts2]

LEFT OUTER JOIN [##SQLskillsStats1] AS [ts1]

ON [ts2].[wait_type] = [ts1].[wait_type]

WHERE [ts1].[wait_type] IS NULL

AND [ts2].[wait_time_ms] > 0

UNION

SELECT

-- Diff of waits in both snapshots

[ts2].[wait_type],

[ts2].[wait_time_ms] - [ts1].[wait_time_ms] AS [wait_time_ms],

[ts2].[signal_wait_time_ms] - [ts1].[signal_wait_time_ms] AS [signal_wait_time_ms],

[ts2].[waiting_tasks_count] - [ts1].[waiting_tasks_count] AS [waiting_tasks_count]

FROM [##SQLskillsStats2] AS [ts2]

LEFT OUTER JOIN [##SQLskillsStats1] AS [ts1]

ON [ts2].[wait_type] = [ts1].[wait_type]

WHERE [ts1].[wait_type] IS NOT NULL

AND [ts2].[waiting_tasks_count] - [ts1].[waiting_tasks_count] > 0

AND [ts2].[wait_time_ms] - [ts1].[wait_time_ms] > 0),

[Waits] AS

(SELECT

[wait_type],

[wait_time_ms] / 1000.0 AS [WaitS],

([wait_time_ms] - [signal_wait_time_ms]) / 1000.0 AS [ResourceS],

[signal_wait_time_ms] / 1000.0 AS [SignalS],

[waiting_tasks_count] AS [WaitCount],

100.0 * [wait_time_ms] / SUM ([wait_time_ms]) OVER() AS [Percentage],

ROW_NUMBER() OVER(ORDER BY [wait_time_ms] DESC) AS [RowNum]

FROM [DiffWaits]

WHERE [wait_type] NOT IN (

-- These wait types are almost 100% never a problem and so they are

-- filtered out to avoid them skewing the results.

N'BROKER_EVENTHANDLER', N'BROKER_RECEIVE_WAITFOR', N'BROKER_TASK_STOP',

N'BROKER_TO_FLUSH', N'BROKER_TRANSMITTER', N'CHECKPOINT_QUEUE',

N'CHKPT', N'CLR_AUTO_EVENT', N'CLR_MANUAL_EVENT',

N'CLR_SEMAPHORE', N'CXCONSUMER', N'DBMIRROR_DBM_EVENT',

N'DBMIRROR_EVENTS_QUEUE', N'DBMIRROR_WORKER_QUEUE', N'DBMIRRORING_CMD',

N'DIRTY_PAGE_POLL', N'DISPATCHER_QUEUE_SEMAPHORE', N'EXECSYNC',

N'FSAGENT', N'FT_IFTS_SCHEDULER_IDLE_WAIT', N'FT_IFTSHC_MUTEX',

N'HADR_CLUSAPI_CALL', N'HADR_FILESTREAM_IOMGR_IOCOMPLETION', N'HADR_LOGCAPTURE_WAIT',

N'HADR_NOTIFICATION_DEQUEUE', N'HADR_TIMER_TASK', N'HADR_WORK_QUEUE',

N'KSOURCE_WAKEUP', N'LAZYWRITER_SLEEP', N'LOGMGR_QUEUE',

N'MEMORY_ALLOCATION_EXT', N'ONDEMAND_TASK_QUEUE', N'PARALLEL_REDO_DRAIN_WORKER',

N'PARALLEL_REDO_LOG_CACHE', N'PARALLEL_REDO_TRAN_LIST', N'PARALLEL_REDO_WORKER_SYNC',

N'PARALLEL_REDO_WORKER_WAIT_WORK', N'PREEMPTIVE_XE_GETTARGETSTATE',

N'PWAIT_ALL_COMPONENTS_INITIALIZED', N'PWAIT_DIRECTLOGCONSUMER_GETNEXT',

N'QDS_PERSIST_TASK_MAIN_LOOP_SLEEP', N'QDS_ASYNC_QUEUE',

N'QDS_CLEANUP_STALE_QUERIES_TASK_MAIN_LOOP_SLEEP',

N'QDS_SHUTDOWN_QUEUE', N'REDO_THREAD_PENDING_WORK', N'REQUEST_FOR_DEADLOCK_SEARCH',

N'RESOURCE_QUEUE', N'SERVER_IDLE_CHECK', N'SLEEP_BPOOL_FLUSH',

N'SLEEP_DBSTARTUP', N'SLEEP_DCOMSTARTUP', N'SLEEP_MASTERDBREADY',

N'SLEEP_MASTERMDREADY', N'SLEEP_MASTERUPGRADED', N'SLEEP_MSDBSTARTUP',

N'SLEEP_SYSTEMTASK', N'SLEEP_TASK', N'SLEEP_TEMPDBSTARTUP',

N'SNI_HTTP_ACCEPT', N'SOS_WORK_DISPATCHER', N'SP_SERVER_DIAGNOSTICS_SLEEP',

N'SQLTRACE_BUFFER_FLUSH', N'SQLTRACE_INCREMENTAL_FLUSH_SLEEP',

N'SQLTRACE_WAIT_ENTRIES', N'WAIT_FOR_RESULTS', N'WAITFOR',

N'WAITFOR_TASKSHUTDOWN', N'WAIT_XTP_RECOVERY', N'WAIT_XTP_HOST_WAIT',

N'WAIT_XTP_OFFLINE_CKPT_NEW_LOG', N'WAIT_XTP_CKPT_CLOSE',

N'XE_DISPATCHER_JOIN', N'XE_DISPATCHER_WAIT', N'XE_TIMER_EVENT'

)

)

INSERT INTO [WideWorldImporters].[dbo].[PerfTesting_WaitStats] (

[TestID],

[WaitType] ,

[Wait_S] ,

[Resource_S] ,

[Signal_S] ,

[WaitCount] ,

[Percentage] ,

[AvgWait_S] ,

[AvgRes_S] ,

[AvgSig_S]

)

SELECT

@TestID,

[W1].[wait_type] AS [WaitType],

CAST ([W1].[WaitS] AS DECIMAL (16, 2)) AS [Wait_S],

CAST ([W1].[ResourceS] AS DECIMAL (16, 2)) AS [Resource_S],

CAST ([W1].[SignalS] AS DECIMAL (16, 2)) AS [Signal_S],

[W1].[WaitCount] AS [WaitCount],

CAST ([W1].[Percentage] AS DECIMAL (5, 2)) AS [Percentage],

CAST (([W1].[WaitS] / [W1].[WaitCount]) AS DECIMAL (16, 4)) AS [AvgWait_S],

CAST (([W1].[ResourceS] / [W1].[WaitCount]) AS DECIMAL (16, 4)) AS [AvgRes_S],

CAST (([W1].[SignalS] / [W1].[WaitCount]) AS DECIMAL (16, 4)) AS [AvgSig_S]

FROM [Waits] AS [W1]

INNER JOIN [Waits] AS [W2]

ON [W2].[RowNum] <= [W1].[RowNum]

GROUP BY [W1].[RowNum], [W1].[wait_type], [W1].[WaitS],

[W1].[ResourceS], [W1].[SignalS], [W1].[WaitCount], [W1].[Percentage]

HAVING SUM ([W2].[Percentage]) - [W1].[Percentage] < 95; -- percentage threshold

GO

-- Cleanup

IF EXISTS (SELECT * FROM [tempdb].[sys].[objects]

WHERE [name] = N'##SQLskillsStats1')

DROP TABLE [##SQLskillsStats1];

IF EXISTS (SELECT * FROM [tempdb].[sys].[objects]

WHERE [name] = N'##SQLskillsStats2')

DROP TABLE [##SQLskillsStats2];

GO

Example of command line file:

Results

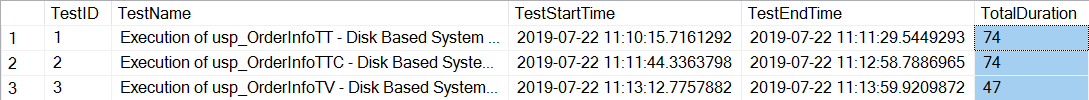

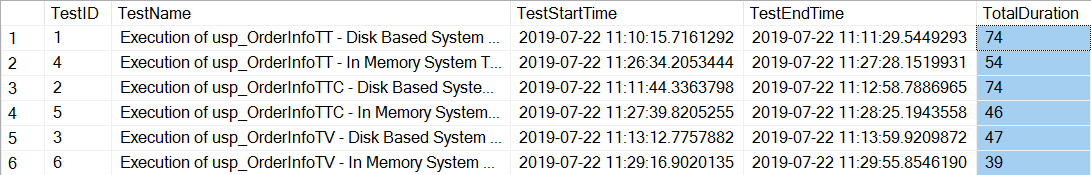

After executing the command line files that generate 20 threads for each stored procedure, checking the total duration for the 12,000 executions of each procedure shows the following:

SELECT *, DATEDIFF(SECOND, TestStartTime, TestEndTime) AS [TotalDuration]

FROM [dbo].[PerfTesting_Tests]

ORDER BY [TestID];

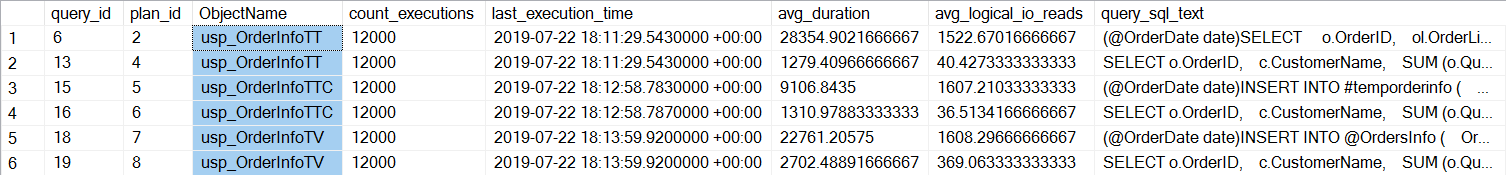

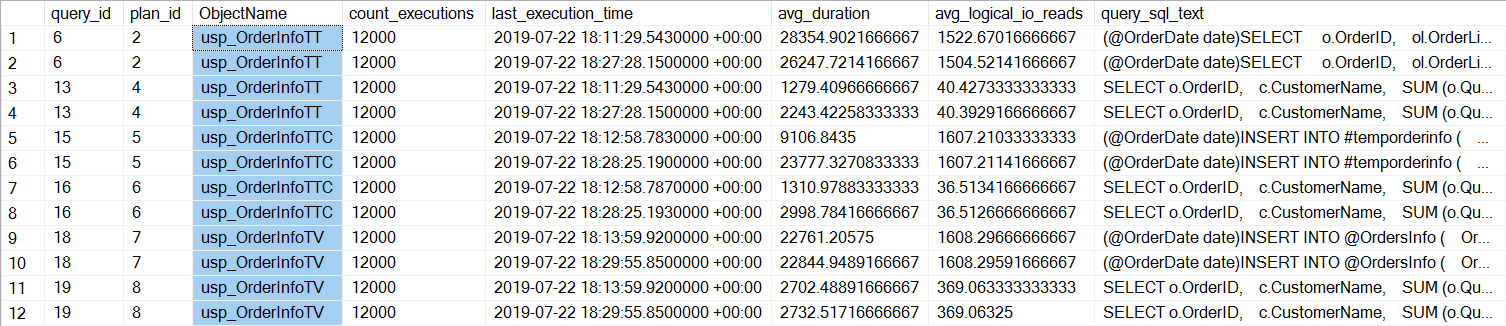

The stored procedures with the temporary tables (usp_OrderInfoTT and usp_OrderInfoTTC) took longer to complete. If we look at individual query performance:

SELECT

[qsq].[query_id],

[qsp].[plan_id],

OBJECT_NAME([qsq].[object_id]) AS [ObjectName],

[rs].[count_executions],

[rs].[last_execution_time],

[rs].[avg_duration],

[rs].[avg_logical_io_reads],

[qst].[query_sql_text]

FROM [sys].[query_store_query] [qsq]

JOIN [sys].[query_store_query_text] [qst]

ON [qsq].[query_text_id] = [qst].[query_text_id]

JOIN [sys].[query_store_plan] [qsp]

ON [qsq].[query_id] = [qsp].[query_id]

JOIN [sys].[query_store_runtime_stats] [rs]

ON [qsp].[plan_id] = [rs].[plan_id]

WHERE ([qsq].[object_id] = OBJECT_ID('Sales.usp_OrderInfoTT'))

OR ([qsq].[object_id] = OBJECT_ID('Sales.usp_OrderInfoTV'))

OR ([qsq].[object_id] = OBJECT_ID('Sales.usp_OrderInfoTTALT'))

ORDER BY [qsq].[query_id], [rs].[last_execution_time];

We can see that the SELECT … INTO for usp_OrderInfoTT took about 28ms on average (duration in Query Store is stored in microseconds), and only took 9ms when the temporary table was pre-created. For the table variable, the INSERT took just over 22ms on average. Interestingly, the SELECT query took just over 1ms for the temporary tables, and approximately 2.7ms for the table variable.

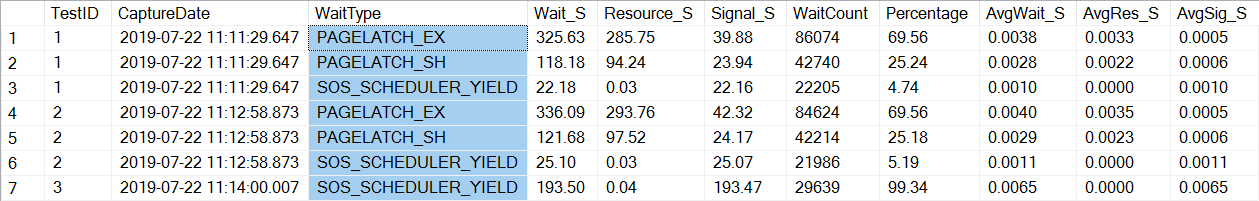

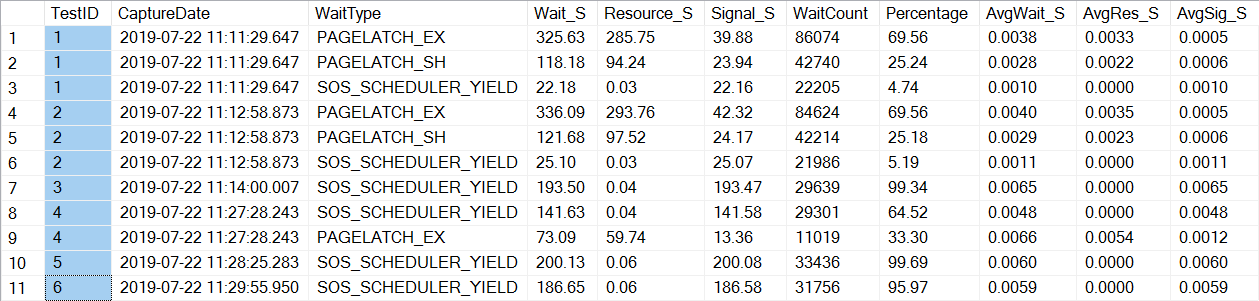

A check of wait statistics data finds a familiar wait_type, PAGELATCH*:

SELECT *

FROM [dbo].[PerfTesting_WaitStats]

ORDER BY [TestID], [Percentage] DESC;

Notice that we only see PAGELATCH* waits for tests 1 and 2, which were the procedures with the temporary tables. For usp_OrderInfoTV, which used a table variable, we only see SOS_SCHEDULER_YIELD waits. Please note: This does not in any way imply that you should use table variables instead of temporary tables, nor does it imply that you will not have PAGELATCH waits with table variables. This is a contrived scenario; I highly recommend you test with YOUR code to see what wait_types appear.

Now we’ll change the instance to use memory-optimized tables for the tempdb metadata. There are two ways this can be done, via the ALTER SERVER CONFIGURATION command, or by using sp_configure. As this setting is an advanced option, if you use sp_configure you’ll need to enable advanced options first.

ALTER SERVER CONFIGURATION SET MEMORY_OPTIMIZED TEMPDB_METADATA = ON;

GO

After this change it’s necessary to restart to the instance. (NOTE: you can change this back to NOT use memory-optimized tables, you just have to restart the instance again.) After the restart, if we check sys.configurations again we can see the metadata tables are memory-optimized:

After executing the command line files again, the total duration for the 21,000 executions of each procedure shows the following (note that results are ordered by stored procedure for easier comparison):

There was definitely an improvement in performance for both usp_OrderInfoTT and usp_OrderInfoTTC , and a slight increase in performance for usp_OrderInfoTV. Let’s check query durations:

For all queries, query duration is nearly the same, except for the increase in INSERT duration when the table is pre-created, which is completely unexpected. We do see an interesting change in wait statistics:

For usp_OrderInfoTT, a SELECT … INTO is executed to create the temporary table. The waits change from being PAGELATCH_EX and PAGELATCH_SH to only PAGELATCH_EX and SOS_SCHEDULER_YIELD. We no longer see the PAGELATCH_SH waits.

For usp_OrderInfoTTC, which creates the temporary table and then inserts, the PAGELATCH_EX and PAGELATCH_SH waits no longer appear, and we only see SOS_SCHEDULER_YIELD waits.

Finally, for OrderInfoTV, the waits are consistent – only SOS_SCHEDULER_YIELD, with almost the same total wait time.

Summary

Based on this testing, we do see an improvement in all cases, significantly for the stored procedures with temporary tables. There is a slight change for the table variable procedure. It’s extremely important to remember that this is one scenario, with a small load test. I was very interested to try these three very simple scenarios, to try and understand what might benefit the most from making the tempdb metadata memory-optimized. This workload was small and ran for a very limited time – in fact I had more varied results with more threads, which is worth exploring in another post. The biggest takeway is that, as with all new features and functionality, testing is important. For this feature, you want to have a baseline of current performance against which to compare metrics such as Batch Requests/Sec and wait statistics after making the metadata memory-optimized.

Additional Considerations

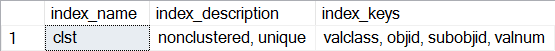

Using In-Memory OLTP requires a filegroup of the MEMORY OPTIMIZED DATA type. However, after enabling MEMORY_OPTIMIZED TEMPDB_METADATA, no additional filegroup is created for tempdb. In addition, it’s not known if the memory-optimized tables are durable (SCHEMA_AND_DATA) or not (SCHEMA_ONLY). Typically this can be determined via sys.tables (durability_desc), but nothing returns for the involved system tables when querying this in tempdb, even when using the Dedicated Administrator Connection. You do have the ability to view nonclustered indexes for the memory-optimized tables. You can use the following query to see which tables are memory-optimized in tempdb:

SELECT *

FROM tempdb.sys.dm_db_xtp_object_stats x

JOIN tempdb.sys.objects o

ON x.object_id = o.object_id

JOIN tempdb.sys.schemas s

ON o.schema_id = s.schema_id;

Then, for any of the tables, run sp_helpindex, for example:

EXEC sys.sp_helpindex N'sys.sysobjvalues';

Note that if it’s a hash index (which requires estimating the BUCKET_COUNT as part of creation), the description would include “nonclustered hash.”

Great First Test Erin

Id love to try this on a heavily loaded server and do A/B.

But Im wondering: once you do in-memory you cant turn it off, right?

So, then TempDB will be in-memory forever, unless you reinstall SQL.

George-

You can turn it off, it just requires restarting the instance to take effect. That's in the documentation, but I can update the post to make that clear.

Erin

I guess this means better and better is good, but I'd rather be able to create tempdb files for groups/users to control their i/o. Like priority jobs running on local tempdb files on ssd, user experiences running on remote tempdb files on SAN.