Migrating an on-premises SQL Server instance to an Azure Virtual Machine (VM) is a common method to migrate to Azure. IT professionals are familiar with scoping the size of VMs with regards to vCPU, memory, and storage capacity. Microsoft offers multiple VM types and sizes for an organization’s needs. You’ll see the types referenced as Family in the Azure Portal when sizing a VM.

VM Types and Sizes

Microsoft has helped simplify things by creating multiple types of virtual machines. The types are geared toward defining the machines by purpose. The various types are:

- General purpose – Balanced CPU-to-memory ratio, small to medium databases

- Compute optimized – High CPU-to-memory ration, medium traffic web servers and application servers

- Memory optimized – High memory-to-CPU ratio, relational database servers, medium to large caches, and in-memory analytics

- Storage optimized – High disk throughput and IO

- GPU – Heavy graphic rendering and video editing

- High performance compute – Fastest and most powerful CPU virtual machines

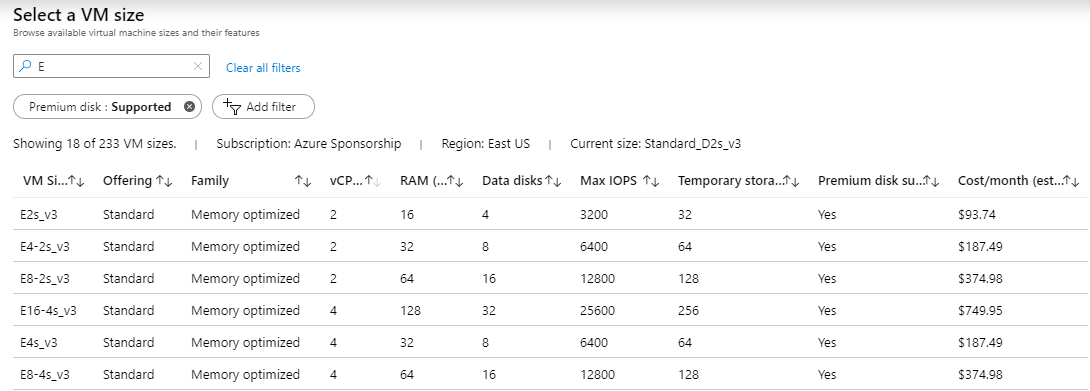

Within each type/family there are numerous sizes to select from. The sizes offer you options for the number of vCPUs, RAM, and data disks. The number of data disks will determine maximum IOPS (IOPS stands for input/output operations per second.) and the overall size will determine the amount of temporary storage available. Certain sizes also support premium storage, which should be a requirement for a production SQL Server.

VM Image Options

When selecting an image for SQL Server, you have several options. You can choose to select just the OS, such as Linux or Windows, or you can choose to select an image with the OS and SQL Server already installed. Currently I prefer to choose the image with just the OS so that I can install SQL Server and configure it the way I like. When you choose the gallery image with SQL Server preinstalled, all components that are included in the ISO for that version are installed, and I don’t always need Integration Services or Analysis Services installed.

VM Sizing Considerations

Selecting an Azure VM may seem straight forward, with choosing a size based on the number of vCPU, memory, and enough storage to hold your databases, however I am seeing customers having performance issues related to storage. The common trend is to choose a VM based exclusively on vCPU, memory, and storage capacity without benchmarking the current IO and throughput requirements. When you create an Azure VM in the Azure Portal, you can filter the options based on the following:

- Size

- Generation

- Family

- RAM/memory

- Premium storage support

- Number of vCPU

- Ephemeral OS disk

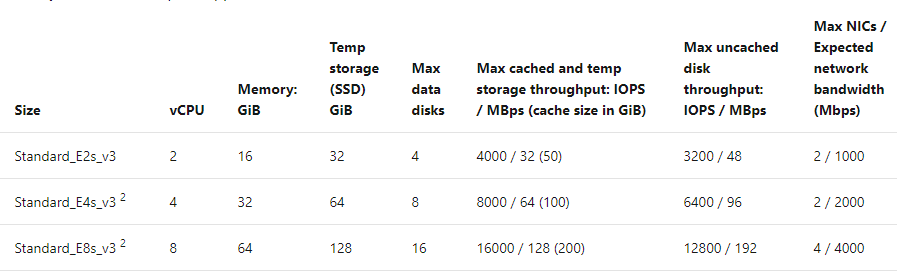

Once you select your filter options, if any, you will see a list of available servers. In the list it displays the VM Size, offering, family, vCPU, RAM, number of data disk supported, max IOPS, temporary storage (D:), if premium disk is supported, and estimated cost. I’ve filtered the following on premium disk supported and size starting with the letter E for memory optimized.

What is not displayed, however, is the amount of throughput allowed per VM. Throughput measures the data transfer rate to and from the storage media in megabytes per second.

Throughput can be calculated using the following formula

Using this formula, KB per IO would be your block size. If you are formatting your data and log drives at 64k, then the formula for the E2s_v3, E4-2s_v3, and E8-2s_v3 VMs with 3,200, 6,400, and 12,800 IOPS would be:

MB/s = 6,400 * 64/1,024 or 400 MB/s

MB/s = 12,800 * 64/1,024 or 800 MB/s

The throughput calculations based on the IOPS of each VM with a 64k block size aren’t too bad. These numbers don’t take into consideration any write penalties for RAID. I put each of these VMs to a test using CrystalDiskMark. The numbers I got for throughput were nowhere near what the calculations were estimating.

Benchmark Test

I provisioned three virtual machines of the same family, however different sizes, and each with 2 vCPU. The specifications of the virtual machines were:

- E2s_v3 – 2 vCPU – 16GB RAM – 3,200 IOPS – Support up to 4 data disks

- E4-2s_v3 – 2 vCPU – 32GB RAM – 6,400 IOPS – Support up to 8 data disks

- E8-2s_v3 – 2 vCPU – 64GB RAM – 12,800 IOPS – Support up to 16 data disks

- P60 data disk – Premium SSD – 16,000 IOPS

On each VM, I provisioned a P60 premium disk at 8TB capacity. This disk advertised 16,000 IOPS which with a 64k block size could support 1,000 MBps throughput, however Azure documentation states the disk provides 500 MBps throughput.

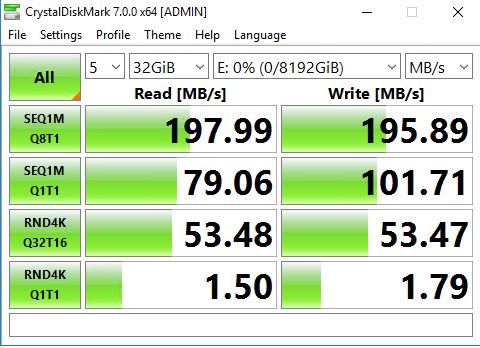

CrystalDiskMark is an open source disk drive benchmark tool for Windows, and it’s based-on Microsoft’s Diskspd tool. The tool measures sequential and random performance of reads and writes.

Across the top of the tool are some drop downs. The number 5 is the number of iterations of the test that will be run. Next is 1GiB, this is the size of the test file. For a real production test, this should be large enough to help avoid hitting cache. Version 7.0 supports up to a 64 GiB file. Last is the drive that you want to perform the test against.

Once you’ve made your selection, you can click All to begin the series of test. The test will loop through various sequential and random read/write operations. Use caution if you plan to benchmark real production servers. This test puts a load on your disk and could drastically impact a production workload. After hours or during a maintenance window would be preferred.

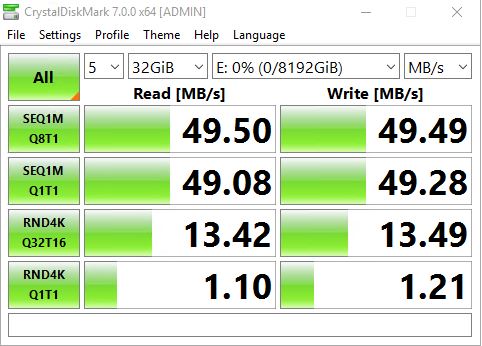

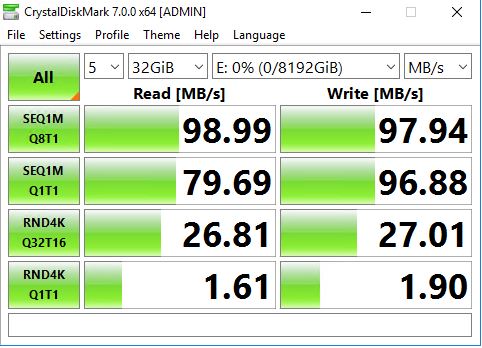

I opted to run 5 iterations of the test with a 32 GiB file on the P60 disk which was drive E:.

The E2s_v3 VM maxed out under 50 MBps, which is way less than the 200MB that we calculated.

The E4-2s_v3 VM maxed out under 100 MBps rather than 400 MBps.

The E8-2s_v3 VM maxed out under 200 MBps rather than the estimated 800 MBps.

Why Lower Throughput?

Based upon my earlier calculations, 3,200 IOPS at 64k block size should produce 200MB throughput, yet I didn’t see numbers close to that until I had a 16,000 IOPS disk on a VM that supports up to 12,800 IOPS. The reasoning is that each VM size has a limit for throughput. If you look at the documentation for the memory optimized family of VMs, you’ll find that the E2s max uncached disk throughput is 3,200 IOPS /48 MBps, E4s max uncached disk throughput is 6,400 IOPS / 96 MBps, and the E8s max uncached disk throughput is 12,800 IOPS / 192 MBps. These numbers coincide with the results I obtained using CrystalDiskMark.

Even though you can allocate very large disks that have plenty of IOPS and that support high throughput numbers, the VM size could very well be limiting your throughput.

Benchmarking your current throughput needs should be a big priority before migrating any SQL Server workload to Azure.

Conclusion

I understand that IOPS is a unit of measurement that disk manufacturers and storage vendors provide, and that is ok. However, when it comes to testing storage, we tend to focus more on throughput numbers. It is just a math problem, but unless you are in the business of benchmarking storage and doing the calculations from IOPS to throughput based on block size, it can be confusing.

What is troubling to me is the fact that the restriction on throughput is not clear when you select a VM size. The unit of measurement for storage IO is IOPS. At 3,200 IOPS with a 64k block size, I could be around 200 MBps however my VM was limited to 48 MBps. Many IT professionals have discovered they have disk performance issues and scaled their storage to larger and faster disk (more IOPS) expecting better performance, only to find that it didn’t solve their problem. The issue is the size of the VM was limiting their throughput. Scaling up to a higher size VM would solve the issue, but that comes with a cost. In my example, the E4 was twice the cost of the E2, however it doubled the memory and throughput, while retaining the same vCPU. The E8 was double the cost of the E4 and doubled the throughput and memory, while retaining the same vCPU. Maintaining the same vCPU count means I wouldn’t have an increase in core SQL Server license cost.

Ultimately, you need to benchmark your current throughput requirements and make sure your sizing the Azure VM, or any VM appropriately for your needs. Don’t just focus on a benchmark of your local storage, analyze what your workload needs and size accordingly.