Narrowing the gap between serverless and its state with storage functions

The Morning Paper

JANUARY 28, 2020

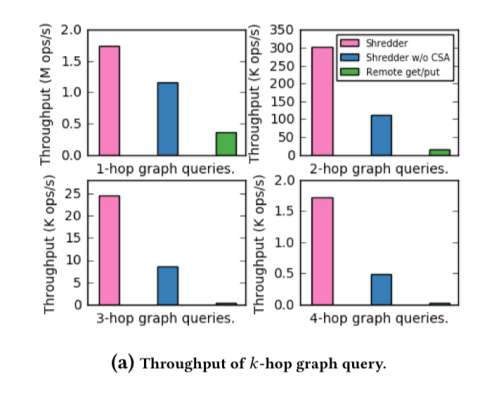

Narrowing the gap between serverless and its state with storage functions , Zhang et al., Shredder is " a low-latency multi-tenant cloud store that allows small units of computation to be performed directly within storage nodes. " SoCC’19. "Narrowing Shredder’s implementation is built on top of Seastar.

Let's personalize your content