If you’re wondering what a service mesh is and whether you would benefit from having one, you likely have a mature Kubernetes environment running large cloud-native applications. As monolithic applications have given way to cloud-connected microservices that perform distinct functions, containerized environments, such as the Kubernetes platform, has become the framework of choice.

Kubernetes orchestrates ready-to-run software packages (containers) in pods, which are hosted on nodes (compute instances) organized in clusters. This modular microservices-based approach to computing decouples applications from the underlying infrastructure to provide greater flexibility and durability while enabling developers to build and update these applications faster and with less risk.

But that modularity and flexibility also bring complexity and challenges as applications grow to include many microservices that need to communicate with each other. This becomes even more challenging when the application receives heavy traffic because a single microservice might become overwhelmed if it receives too many requests too quickly. A service mesh can solve these problems, but it can also introduce its own issues. Here’s what you need to know about service meshes and how to overcome their challenges.

What is a service mesh?

A service mesh is a dedicated infrastructure layer built into an application that controls service-to-service communication in a microservices architecture. It controls the delivery of service requests to other services, performs load balancing, encrypts data, and discovers other services.

Although you can code the logic that governs communication directly into the microservices, a service mesh abstracts that logic into a parallel layer of infrastructure using a proxy called a sidecar, which runs alongside each service.

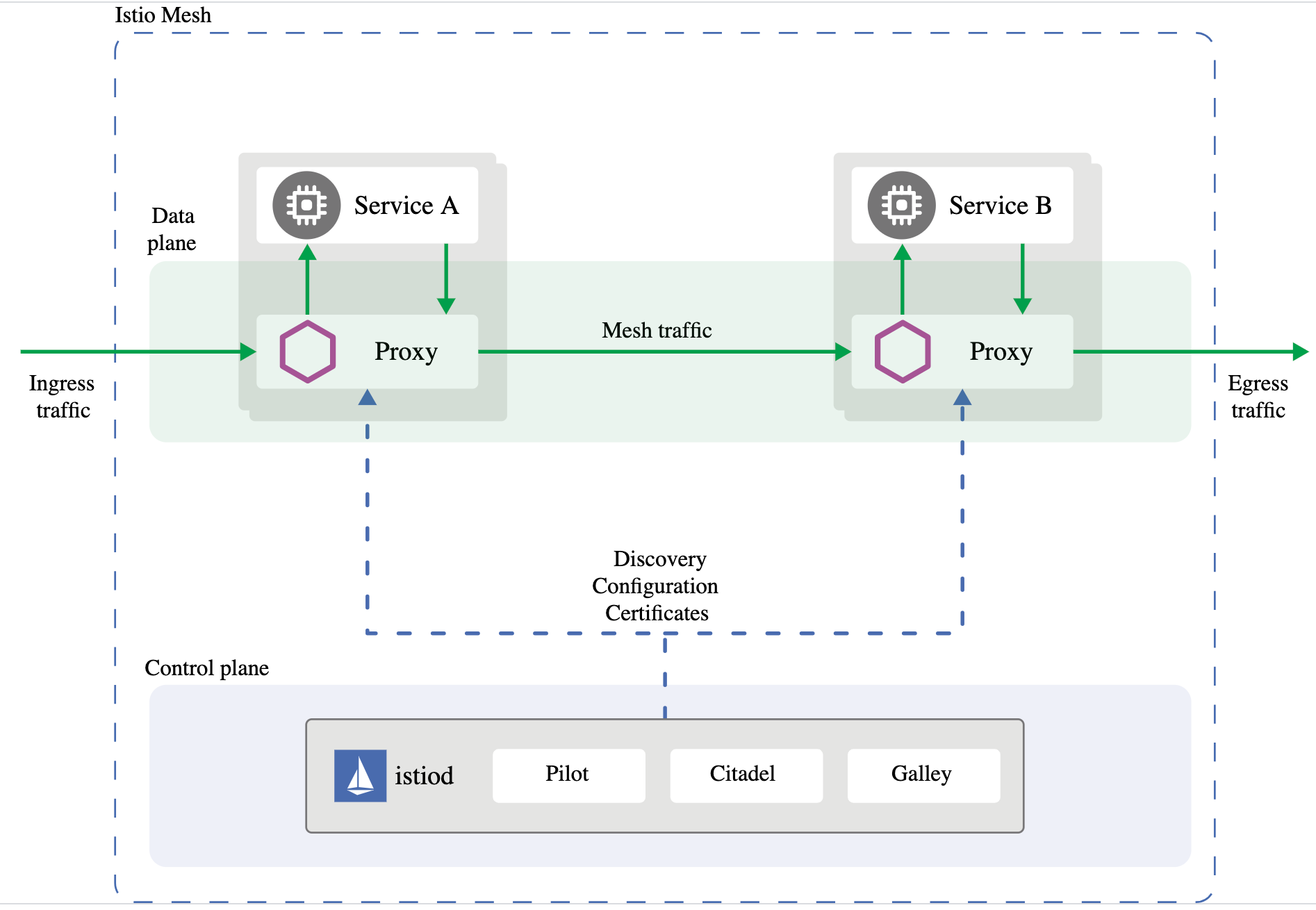

Sidecar proxies make up a service mesh’s data plane, which manages the exchange of data between services. Management processes make up the control plane, which coordinates the proxies’ behavior. The control plane also provides an API so operators can easily manage traffic control, network resiliency, security and authentication, and custom telemetry data for each service.

Why do you need a service mesh?

In general, your organization can benefit from a service mesh if you have large-scale applications composed of many microservices. As application traffic grows, requests between these services can increase exponentially, requiring sophisticated routing capabilities to optimize the flow of data between the services and ensure the application continues to perform at a high level.

From a secure communications standpoint, service meshes are useful for managing the secure TLS (mTLS) connections between services.

Because service meshes manage the communication layer, they allow developers to focus on adding business value with each service they build, rather than worrying about how each service communicates with all other services.

For DevOps teams that have an established production CI/CD pipeline, a service mesh can be essential for programmatically deploying apps and application infrastructure (Kubernetes) to manage source code and test automation tools like Git, Jenkins, Artifactory, or Selenium. A service mesh enables DevOps teams to manage their networking and security policies through code.

How service meshes work: The Istio example

Istio is a popular Kubernetes-native mesh developed by Google, IBM, and Lyft that helps manage deployments, breeds resilience, and improves security in Kubernetes. Istio uses related open-source services like Envoy, a high-performance proxy that mediates all inbound and outbound service traffic, and Jaeger, a simple UI for visualizing and storing distributed traces that developers can use to debug their microservices.

The Istio data plane shown in green in the graphic below uses Envoy proxies deployed as sidecars, which control all communication between microservices (service A and service B). The Envoy proxies also collect and report telemetry on all traffic among the services in the mesh.

The control plane routes traffic within the mesh by managing and configuring the proxies based on policies that have been defined for that application.

Istio generates telemetry data in the form of metrics, logs, and traces for all the services within the mesh. This telemetry helps organizations observe, troubleshoot problems, and track the performance of their applications on a service-by-service basis.

A service mesh helps organizations run microservices at scale by providing:

- A more flexible release process (for example, support for canary deployments, A/B testing, and blue/green deployments)

- Availability and resilience (for example, setup retries, failovers, circuit breakers, and fault injection)

- Secure communications (authentication, authorization, and encryption of communication with and among services)

Benefits and Challenges

In the ever-evolving landscape of modern software architecture, service mesh has emerged as a game-changer, offering a robust solution to manage the complexity of microservices-based applications. While service mesh brings many benefits, it also introduces challenges organizations must navigate.

Benefits of service mesh:

- Centralized Traffic Management: A service mesh allows for fine-grained control over communication between services, including advanced routing capabilities, retries, and failovers. This can be crucial in ensuring high availability and resilience.

- Security at Scale: Security is paramount in microservices architecture, and service mesh addresses this by providing a uniform layer for implementing security measures like encryption, authentication, and authorization. It ensures that communication between services remains secure without burdening individual services with security concerns.

- Resilience and Fault Tolerance: Service mesh introduces capabilities for implementing circuit breaking, retries, and timeouts, promoting resilience in the face of failures. It enables applications to gracefully handle faults, preventing cascading failures and ensuring optimal user experiences.

- Enhanced Observability: Service mesh provides unparalleled visibility into the interactions between microservices. With features like distributed tracing and monitoring, organizations can gain insights into the performance and behavior of their applications, facilitating efficient troubleshooting and optimization.

Challenges of service mesh:

- Increased Complexity: Introducing service mesh can add a layer of complexity to the architecture. Organizations must invest time and effort in understanding, implementing, and maintaining the mesh, potentially impacting the agility of development teams.

- Performance Overhead: While service mesh provides invaluable features, it comes with a performance cost. The additional layer of proxies handling communication can introduce latency, impacting the application’s overall performance. Organizations must carefully balance the benefits against this overhead.

- Steep Learning Curve: Adopting service mesh requires a learning curve for development and operations teams. Understanding the intricacies of the mesh, configuring policies, and troubleshooting issues demand expertise, necessitating training and upskilling initiatives.

- Vendor Lock-in: Some service meshes are tightly coupled with specific platforms or technologies. Choosing an open source, vendor-neutral solution can mitigate this risk.

Observability challenges of service mesh

While service meshes such as Istio are great for managing deployments and providing resilience and security, the troubleshooting and performance telemetry they provide is limited to the communications between the services they manage. This means you cannot get an end-to-end view of every service a transaction may touch outside the context of the Kubernetes environment.

To get distributed traces from Istio or other service meshes requires that you make manual code changes in all services it interacts with. But even with manual code changes, you don’t get details about what’s happening inside the service. And if you don’t own the code for a specific service, you lose end-to-end visibility.

Unless you spend significant time and effort to build custom logging features, your organization can be blind in a sea of Kubernetes nodes. Even worse, you may be left guessing and prodding your system by trial and error to find the root cause of a performance issue or other problem.

How to solve the end-to-end observability issues of service mesh

Gaining situational awareness into a dynamic microservices environment in a way that doesn’t place additional strain on your DevOps team requires an approach that is easy to implement and understand. Dynatrace’s automatic, AI-driven approach gives you out-of-the-box insights into your microservices, their dependencies, the service mesh, and your underlying infrastructure with full end-to-end observability and precise problem identification and root cause analysis.

By deploying OneAgent, Davis AI can automatically analyze data from your service mesh and microservices in real time, understanding billions of relationships and dependencies to pinpoint the root cause of bottlenecks and provide your DevOps team with a clear path to remediation.

With no configuration or additional coding required, Dynatrace enables you to see all the details of each distributed trace through the service mesh, including HTTP method and response codes and timing details. This approach demystifies the service mesh so you can easily investigate what happens up and downstream in the context of a single end-to-end transaction.

Dynatrace has prebuilt integrations with the most popular service meshes, including Istio and Hashicorp Consul.

Watch our webinar

To learn more about how Dynatrace AI and automation can unlock the complexity of your Kubernetes environment, join us for an on-demand demonstration, Advanced Observability for Kubernetes Infrastructure and Microservices.

Looking for answers?

Start a new discussion or ask for help in our Q&A forum.

Go to forum