In this article, we will explore the Bulk Copy Program tool that is also known as the SQL Server BCP tool. BCP allows us to export data into particular flat-file formats from SQL Server, and it also enables us to transfer data between different SQL Server instances or into the SQL Azure.

Introduction

Either exporting or importing the data is among the most needed operations of the database persons. In order to achieve this operation on SQL Server, we have various tool alternatives. Such as SQL Server Integration Services (SSIS), SQL Server Management Studio (SSMS), OPENROWSET function, and BCP are the first options that come to our minds. SQL Server BCP is a very simple command-line tool that exports table data into flat files from SQL Server, or it can import data into the database tables. However, when we want to use the BCP for the giant data transferring operations we might face some performance problems. For this reason, in the next sections of this article, we will mainly focus on how to improve the performance of the data transferring operations for the tables which have a huge number of rows.

Pre-requirements

For the examples in this article, we will create a table and populate it with 100 million rows. The following script creates the SalesPerson table and we can also use ApexSQL Generate to generate 100 million test data.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

CREATE TABLE [dbo].[SalesPerson]( [SalesPerson_Id] [int] IDENTITY(1,1) NOT NULL, [First_name] [varchar](50) NOT NULL, [Last_name] [varchar](50) NOT NULL, [Email] [varchar](255) NOT NULL, [Phone] [varchar](25) NULL, [Active] [tinyint] NOT NULL, [Store_id] [int] NOT NULL, [Manager_id] [int] NOT NULL, PRIMARY KEY CLUSTERED ( [SalesPerson_Id] ASC )) |

On the other hand, we will use the Adventureworks sample database for the first look examples.

A first look at BCP

As we stated before, the BCP is a command-line tool and as a first step, we need to check the installed version of this tool. The bcp /v command gives the version information of this utility.

If the installed version is older than the last version, we can download and install the latest version from the Microsoft website. The main capability of the SQL Server BCP is not much complex because it can only run with several arguments. The syntax of the BCP is like below:

bcp {table|view|”query”} {out|queryout|in|format} {data_file|nul} {[optional_argument]…}

For example, if we want to export any data of a table to a text file, we have to specify the table name, the out option, and the data file. The following command will export the Production table into the specified text file.

bcp AdventureWorks2017.Production.Product out C:\ExportedData\Product.txt -S localhost -T –w

In the above script, we have also used some additional parameters:

-S: Server Name

-T: Use the trusted connection

-w: Performs the bulk copy operation using Unicode characters

After the exporting operation, the text file will look as below:

At the same time, we can export result sets of the queries through the queryout parameter so that we can filter the data or join the tables before exporting operation.

bcp ” SELECT p.[FirstName] ,p.[LastName],e.BirthDate FROM AdventureWorks2017.[HumanResources].[Employee] e INNER JOIN AdventureWorks2017.[Person].[Person] p ON p.[BusinessEntityID] = e.[BusinessEntityID] WHERE e.BirthDate > ‘19800101’” queryout C:\ExportedData\QueryData.txt -S localhost -T -w

Using the BCP to import data into the SQL Azure

We can use BCP to import data into SQL Azure. The following command will import the Production table text data into the SQL Azure. The only change is to use in the argument and it specifies copy the data from a file into the database table.

bcp TestDB.dbo.Product in C:\ExportedData\Product.txt -S tcp:esat1.database.windows.net –U username –P password -w

The imported data can be seen in the query editor of SQL Azure.

Use the SQL Server BCP to export big tables data

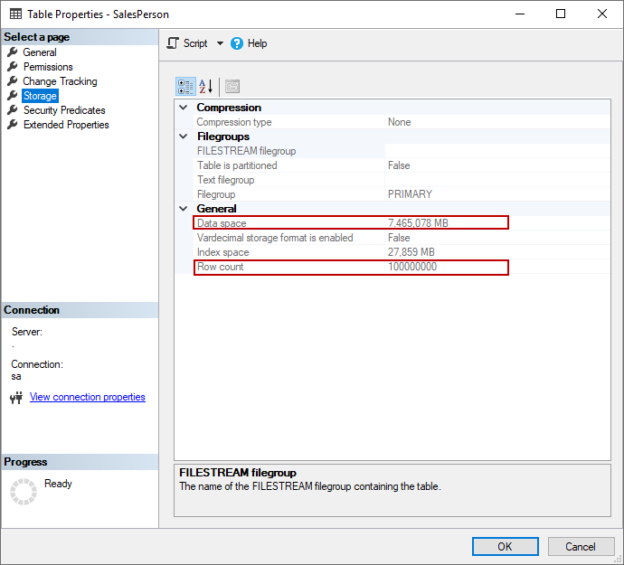

In this example, we will export the SalesPerson table data into a text file. This table includes 100 million rows and it’s size is about 7.5 GB.

In our first testing, we will run the SQL Server BCP with default values in order to export 100 M rows.

bcp SalesTest.dbo.SalesPerson out C:\ExportedData\SalesPerson.txt -S localhost -T -w

As we can see in the above image, the export operation has completed about 588.079 seconds and it has exported 170.045 rows per second. The network packet size configuration allows us to specify how much bytes data is sent out by the SQL Server. The –a parameter changes the packet size individually for the bcp data transfer session and might help to increase the performance of data transfer operations. The default packet size is 4096 bytes and we will increase this number to 32.728 bytes and it will affect the performance of the data exporting positively.

bcp SalesTest.dbo.SalesPerson out C:\ExportedData\SalesPerson.txt -S localhost -T -w -a 32768

After changing the packet size parameter of the BCP the data transfer duration has decreased and the number of rows transferred per second has increased so increasing the packet size may have an improvement in data transfer. As a last, using the fast disk systems for the exported file location, changing the packet size parameter of the BCP, and using the fast NIC card will improve the export performance.

Use the SQL Server BCP to import a huge amount of data into tables

In general, performance bottlenecks are experienced related to BCP during the importing of external data into the SQL tables. Under the full recovery model, the first thing, we need to consider is the workload that will occur on the log file during the data importing operation because if we don’t determine any batch size for the data import operation, the whole operation will be done in a big single transaction. In this circumstance, all imported data will be fully written into the transaction log file of the database.

Before starting the first testing with BCP, we will take a look at the log file size of the database and then we will start the data import operation for 100 M rows.

|

1 2 3 |

SELECT CAST(ROUND((total_log_size_in_bytes)*1.0/1024/1024,2,2) AS FLOAT) AS [Total Log Size] FROM sys.dm_db_log_space_usage; |

bcp Sales.dbo.SalesPerson in C:\ExportedData\SalesPerson.txt -S localhost -T –w

After the completion of the data import operation, the log file size has reached 27.144 megabytes.

|

1 2 3 |

SELECT CAST(ROUND((total_log_size_in_bytes)*1.0/1024/1024,2,2) AS FLOAT) AS [Total Log Size] FROM sys.dm_db_log_space_usage; |

On the other hand, using the simple recovery model or bulk-logged recovery model may enable the minimal logging mode. For SQL Server to enable the minimum logging option, the target table must meet the following conditions:

- The table is not being replicated

- The table is not memory-optimized

In addition to these conditions, we need to use the TABLOCK hint in the BCP command, and also the table must be empty if it includes a clustered index.

After changing the database recovery model to bulk-logged, we drop and re-create the target table and re-execute the following bcp command with the TABLOCK hint.

bcp Sales.dbo.SalesPerson in C:\ExportedData\SalesPerson.txt -S localhost -T -w -h”TABLOCK”

After the completion of the data import operation, the log file size has only reached 200 megabytes.

In this test, we have realized that SQL Server minimizes the log file activity and it increases the performance of the bulk copy operation.

Use the native data format to import or export data with SQL Server bcp

Microsoft recommends using native data formats when the data will be transferred between two SQL Server instances for the identical tables if the table data does not contain any extended/double-byte character set (DBCS) characters. Thus, SQL Server avoids unnecessary conversion of data types to and from character format. To use native data format in BCP, we need to replace the –w parameter with the –n parameter.

bcp Sales.dbo.SalesPerson in C:\ExportedData\SalesPerson.txt -S localhost -T -n -h”TABLOCK”

After using the native data format in BCP, the data import performance has boosted because the unnecessary data conversions are eliminated.

The following chart shows how the minimal logging mode and using the native data format option affect the data importing performance.

Conclusion

In this article, we have explored the SQL Server BCP tool and also we have focused on how to improve its performance with some changes. Minimal logging mode and using the native formats dramatically increase the performance of the BCP. At the same time, the packet size parameter of the BCP can affect the performance of the data transfer performance of the BCP.

- SQL Performance Tuning tips for newbies - April 15, 2024

- SQL Unit Testing reference guide for beginners - August 11, 2023

- SQL Cheat Sheet for Newbies - February 21, 2023