In the recent MySQL 8.0.16 release there is a new variable for the InnoDB storage engine called innodb_spin_wait_pause_multiplier described as providing “greater control over the duration of spin-lock polling delays that occur when a thread waits to acquire a mutex or rw-lock” and “delays can be tuned more finely to account for differences in PAUSE instruction duration on different processor architectures”

This post aims to address what this about and whether you really need to be concerned about the difference in instructions on the different processor architectures for MySQL by testing the performance with HammerDB. Note that the main developer of HammerDB is Intel employee (#IAMINTEL) however HammerDB is a personal open source project and HammerDB has no optimization whatsoever for a database running on any particular architecture. Fortunately the HammerDB TPC-C/OLTP workload intentionally has a great deal of contention between threads and is therefore ideal for testing spin-locks.

So the PAUSE instruction is an optimization over NOP when a thread is waiting to acquire a lock and is particularly important in spin-locks on x86 CPUs for power and performance. However in the Skylake microarchitecture (you can see a list of CPUs here) the PAUSE instruction changed and in the documentation it says “the latency of the PAUSE instruction in prior generation microarchitectures is about 10 cycles, whereas in Skylake microarchitecture it has been extended to as many as 140 cycles.” and “as the PAUSE latency has been increased significantly, workloads that are sensitive to PAUSE latency will suffer some performance loss.” The impact on a database workload is not necessarily straightforward however as it depends on how much time that workload actually spends in spin-locks, fortunately as noted a HammerDB deliberately induces a great deal of contention so is at the top end of workloads that do.

Also it is interesting to note that the impact of this change was not observed in other databases or other MySQL storage engines such as MyRocks the only noticeable impact with HammerDB workloads occurred in MySQL with InnoDB where in the source code ut0ut.cc in the directory storage/innobase/ut the following section has UT_RELAX_CPU defined to call the PAUSE instruction on x86.

for (i = 0; i <delay * 50; i++) {

j += i;

UT_RELAX_CPU();

}

Note that this fixed value of 50 is multiplied by the parameter innodb_spin_wait_delay which has a default of 6 (but selects a value at random up to this value) so could be calling PAUSE up to 300 times. So innodb_spin_wait_delay has always been configurable in recent versions but now from MySQL innodb_spin_wait_pause_multiplier is configurable also rather than requiring modification of the source code to do so. However as noted many factors affect spin-locks including how much time you actually spend in locks in the first place and therefore the best way to really determine how much time you have to spend in fine-tuning spin-locks in the real world can be done through testing.

So to test I took a system with Skylake CPUs and all storage on a P4800X SSD.

model name : Intel(R) Xeon(R) Platinum 8180 CPU @ 2.50GHz

The OS was:

Ubuntu 18.04.1 LTS (GNU/Linux 4.15.0-23-generic x86_64)

I downloaded and installed the pre-built Linux binary from here:

mysql-8.0.16-linux-glibc2.12-x86_64

and set the following my.cnf

[mysqld] datadir=/home/mysql/mysql-8.0.16/mysql-8.0.16-linux-glibc2.12-x86_64/data language=/home/mysql/mysql-8.0.16/mysql-8.0.16-linux-glibc2.12-x86_64/share/english default_authentication_plugin=mysql_native_password socket=/tmp/mysql.sock port=3307 bind_address=127.0.0.1 # general max_connections=4000 table_open_cache=8000 table_open_cache_instances=16 back_log=1500 default_password_lifetime=0 ssl=0 performance_schema=OFF max_prepared_stmt_count=128000 skip_log_bin=1 character_set_server=latin1 collation_server=latin1_swedish_ci transaction_isolation=REPEATABLE-READ # files innodb_file_per_table innodb_log_file_size=1024M innodb_log_files_in_group=32 innodb_open_files=4000 # buffers innodb_buffer_pool_size=64000M innodb_buffer_pool_instances=16 innodb_log_buffer_size=64M # tune innodb_doublewrite=0 innodb_thread_concurrency=0 innodb_flush_log_at_trx_commit=0 innodb_max_dirty_pages_pct=90 innodb_max_dirty_pages_pct_lwm=10 join_buffer_size=32K sort_buffer_size=32K innodb_use_native_aio=1 innodb_stats_persistent=1 innodb_spin_wait_delay=6 innodb_spin_wait_pause_multiplier=50 innodb_max_purge_lag_delay=300000 innodb_max_purge_lag=0 innodb_flush_method=O_DIRECT_NO_FSYNC innodb_checksum_algorithm=none innodb_io_capacity=4000 innodb_io_capacity_max=20000 innodb_lru_scan_depth=9000 innodb_change_buffering=none innodb_read_only=0 innodb_page_cleaners=4 innodb_undo_log_truncate=off # perf special innodb_adaptive_flushing=1 innodb_flush_neighbors=0 innodb_read_io_threads=16 innodb_write_io_threads=16 innodb_purge_threads=4 innodb_adaptive_hash_index=0 # monitoring innodb_monitor_enable='%'

Initially I set doublewrite and flush_log_at_trx_commit and then deleted and rebuilt the schema to re-test with these parameters turned on.

I installed HammerDB 3.1 and used the client library from MySQL 5.7 installed in the home directory

libmysqlclient.so.20

and added this to the library path as follows:

export LD_LIBRARY_PATH=/home/mysql:$LD_LIBRARY_PATH

I created a HammerDB build file and called it using the source command with the hammerdbcli tool.

HammerDB-3.1$ cat innodbbuild400.tcl #!/bin/tclsh puts "SETTING CONFIGURATION" dbset db mysql diset connection mysql_host 127.0.0.1 diset connection mysql_port 3307 diset tpcc mysql_count_ware 400 diset tpcc mysql_partition true diset tpcc mysql_num_vu 64 diset tpcc mysql_storage_engine innodb print dict buildschema

Once the schema was built I shutdown and restarted the database and configured a test script as follows:

HammerDB-3.1$ cat innodbtest1.tcl

#!/bin/tclsh

proc runtimer { seconds } {

set x 0

set timerstop 0

while {!$timerstop} {

incr x

after 1000

if { ![ expr {$x % 60} ] } {

set y [ expr $x / 60 ]

puts "Timer: $y minutes elapsed"

}

update

if { [ vucomplete ] || $x eq $seconds } { set timerstop 1 }

}

return

}

puts "SETTING CONFIGURATION"

dbset db mysql

diset connection mysql_host 127.0.0.1

diset connection mysql_port 3307

diset tpcc mysql_driver timed

diset tpcc mysql_rampup 1

diset tpcc mysql_duration 2

vuset logtotemp 1

loadscript

puts "SEQUENCE STARTED"

foreach z { 1 2 4 8 16 24 32 40 48 56 64 72 80 88} {

puts "$z VU TEST"

vuset vu $z

vucreate

vurun

runtimer 240

vudestroy

after 20000

}

puts "TEST SEQUENCE COMPLETE"

and then ran the test leaving it unattended until it reported the message “TEST SEQUENCE COMPLETE”. In particular note that the parameter innodb_spin_wait_pause_multiplier remained at the default throughout.

HammerDB-3.1$ ./hammerdbcli HammerDB CLI v3.1 Copyright (C) 2003-2018 Steve Shaw Type "help" for a list of commands The xml is well-formed, applying configuration hammerdb>source innodbtest1.tcl

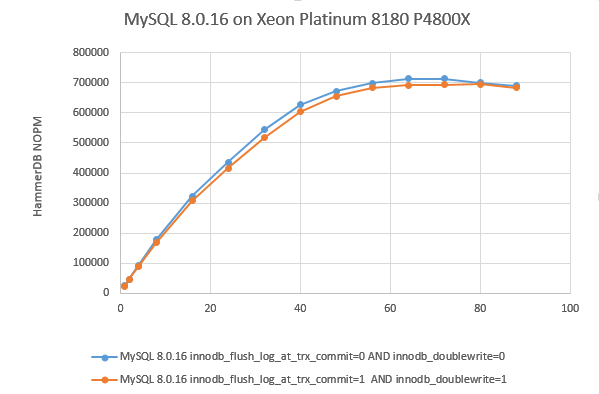

The following was the results in graph form:

with a peak performance of the following:

Vuser 1:64 Active Virtual Users configured Vuser 1:TEST RESULT : System achieved 2159378 MySQL TPM at 713488 NOPM

so we are generating more than 2 million MySQL Transactions per minute and more than 700,000 New Orders per minute without having modified any of the spin-loop parameters.

Now that the parameter is configurable I could also run some experiments:

mysql> show global variables like '%pause%'; +-----------------------------------+-------+ | Variable_name | Value | +-----------------------------------+-------+ | innodb_spin_wait_pause_multiplier | 50 | +-----------------------------------+-------+ 1 row in set (0.00 sec) mysql> set global innodb_spin_wait_pause_multiplier=25; Query OK, 0 rows affected (0.00 sec) mysql> show global variables like '%pause%'; +-----------------------------------+-------+ | Variable_name | Value | +-----------------------------------+-------+ | innodb_spin_wait_pause_multiplier | 25 | +-----------------------------------+-------+ 1 row in set (0.00 sec)

what I found was when reducing innodb_spin_wait_pause_multiplier signficantly to a value such as 5 I could achieve marginally improved performance up to 40 virtual users 643423 NOPM at a value of 5 compared to 626630 NOPM at 50 however beyond this point performance was signficantly lower and at 64 virtual users was only 278909 NOPM. The optimal performance remained at the default.

Further testing involved doubling the number of warehouses with the same results, however not partitioning the schema did bring some benefits in reducing innodb_spin_wait_pause_multiplier to a lower value.

The key finding was remarkably consistent with that of spin-lock configuration on other databases in that in the vast majority of use cases you simply do not have to worry about modifying these parameters from the default regardless of the processor architecture. However it is noted that there are many factors that input into home much time is spent in spin-locks in the first place until these parameters come into play, examples such as the speed of the memory and the storage and configuration of the schema with partitioning as well as the operating system. In particular the number of concurrent users is going to be a significant factor.

Nevertheless if you have an application generating millions of transactions per minute and have the opportunity to test and optimize surely the availability of more parameters to fine tune a database to a particular application is welcome because with each release MySQL is continuing to push the boundaries of performance over previous releases.