Seeing through hardware counters: a journey to threefold performance increase

The Netflix TechBlog

NOVEMBER 9, 2022

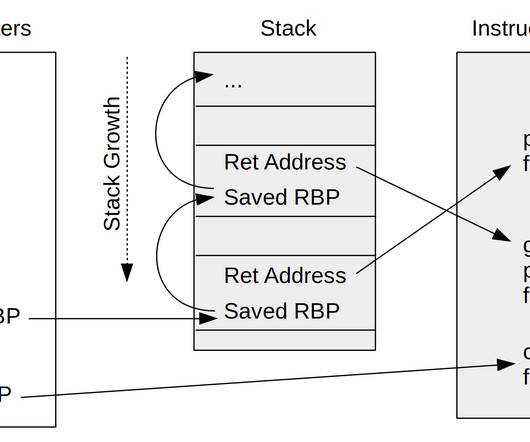

We also see much higher L1 cache activity combined with 4x higher count of MACHINE_CLEARS. a usage pattern occurring when 2 cores reading from / writing to unrelated variables that happen to share the same L1 cache line. Cache line is a concept similar to memory page?—? Thread 0’s cache in this example.

Let's personalize your content