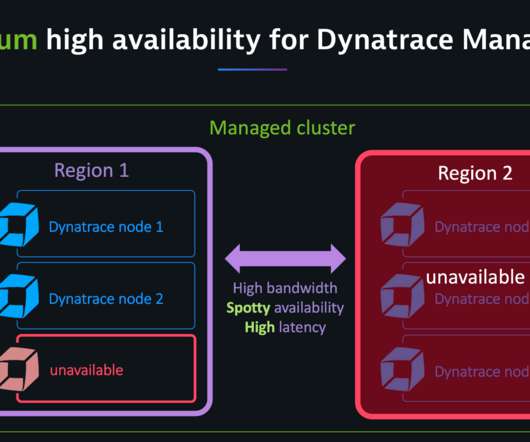

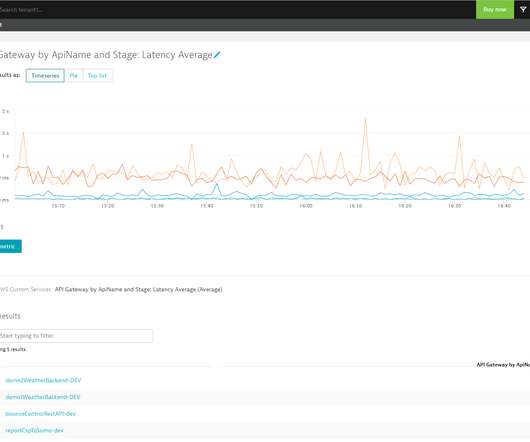

Dynatrace Managed turnkey Premium High Availability for globally distributed data centers (Early Adopter)

Dynatrace

JUNE 25, 2020

Dynatrace Managed is intrinsically highly available as it stores three copies of all events, user sessions, and metrics across its cluster nodes. The network latency between cluster nodes should be around 10 ms or less. Minimized cross-data center network traffic. Dynatrace news.

Let's personalize your content