Seeing through hardware counters: a journey to threefold performance increase

The Netflix TechBlog

NOVEMBER 9, 2022

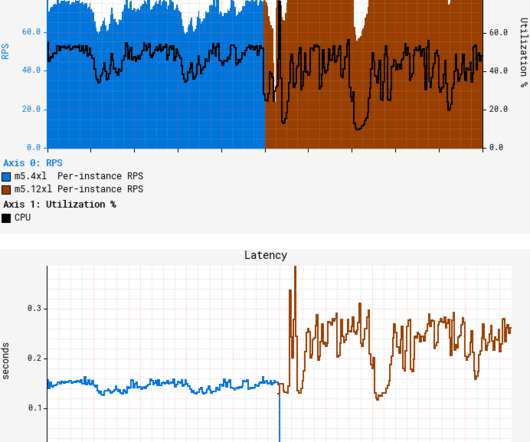

At Netflix, we periodically reevaluate our workloads to optimize utilization of available capacity. We also see much higher L1 cache activity combined with 4x higher count of MACHINE_CLEARS. a usage pattern occurring when 2 cores reading from / writing to unrelated variables that happen to share the same L1 cache line.

Let's personalize your content