The Three Cs: Concatenate, Compress, Cache

CSS Wizardry

OCTOBER 16, 2023

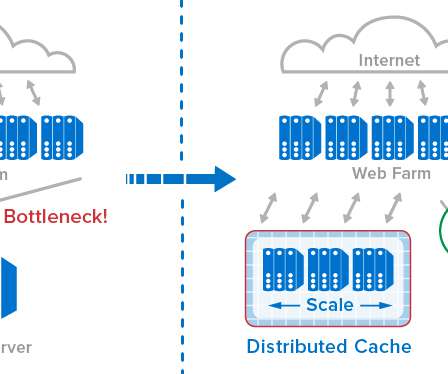

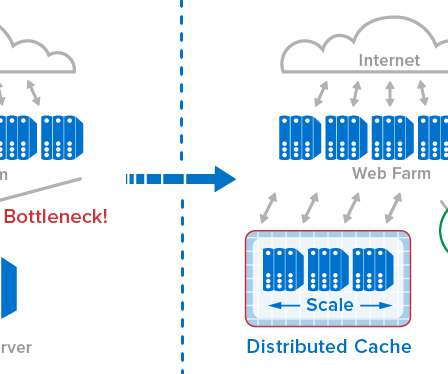

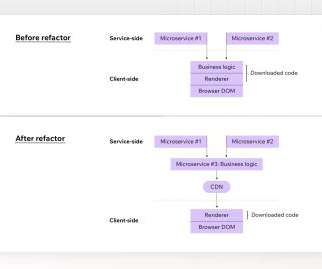

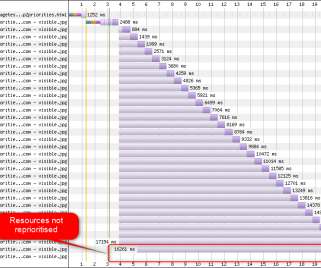

Concatenating our files on the server: Are we going to send many smaller files, or are we going to send one monolithic file? What is the availability, configurability, and efficacy of each? ?️ Caching them at the other end: How long should we cache files on a user’s device? Cache This is the easy one. main.af8a22.css

Let's personalize your content