Stuff The Internet Says On Scalability For June 26th, 2020

Hey, it's HighScalability time!

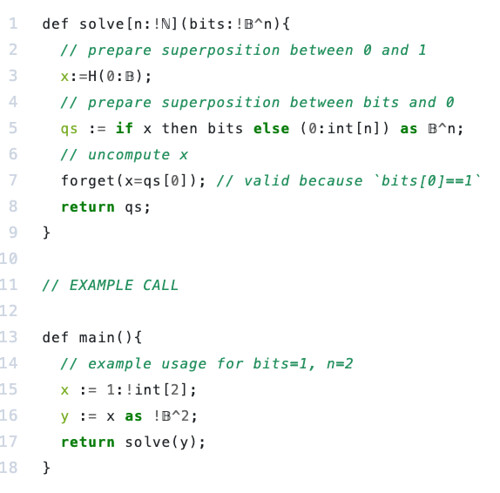

Line noise? Perl? Still uncertain? It's how you program a quantum computer. Silq.

Do you like this sort of Stuff? Without your support on Patreon this kind of Stuff can't happen. You are that important to the fate of the smart and thoughtful world.

Know someone who could benefit from understanding the cloud? Of course you do. I wrote Explain the Cloud Like I'm 10 just for them. On Amazon it has 115 mostly 5 star reviews. Here's a 100% gluten-free review:

Number Stuff:

- 5 billion: metrics per minute ingested by Twitter—1.5 petabytes.

- 2.3 Tbps: DDoS attack mitigated by AWS. UDP strikes again. Cloudflare said that 92% of the DDoS attacks it mitigated in Q1 2020 were under 10 Gbps and that 47% were even smaller, of under 500 Mbps.

- 900 million: one month of podcast download streams by the 10 world-leading podcasts publishers. Leader is iHeartRadio with 216.25m.

- 64%: of all extremist group joins are due to Facebook's recommendation tools.

- 20%: reduction in smartphone sales in Q1.

- $1.4bn: revenue last year for Pokémon Go, ranking 6th in free-to-play-games. Frotnite is #1. Weekly spending jumped 70% between March 9th and March 16th.

- +9%: amount people stayed home in California compared to baseline April 25th - Jun 6th based on Google Map data. Seems low. -37% retail & recreation. -18% workplaces.

- 2.8x: more calculations a second made by Japan's new ARM based supercomputer compared to IBM's now second place supercomputer. Cost a cool $1 billion.

- 50% - 80%: of Amazon's profits have been due to AWS. Maybe, sort of.

- 90%: of electricity is used to move data between the memory and processor. It can cost about 200 times more energy to access DRAM than to perform a computation.

- $500 million: TikTok's expected US revenue this year. $100 billion valuation in private markets.

- 117,000: IT jobs lost since March.

- 7M: Facebook attacking a small website with its army of crawlers. Peak of 300req/second. Eventually blocked by user-agent using cloudflare rules.

- 86%: respondents want to use Rust, while 67.1% want to use TypeScript, and 66.7% want to use Python.

- 15 years: Age of YouTube.

- 44.2 Terabits per second: achieved through the use of a micro-comb – an optical chip replacing 80 separate infrared lasers, capable of carrying communication signals.

- ~50%: Accounts Tweeting About Coronavirus Are Likely Bots

- 126 million: play Minecraft monthly. Sales have officially topped 200 million.

- 1.07%: Annualized Failure Rate of hard drives at Backblaze. Lowest ever. Keep in mind they have 129,959 drives.

- 95%: of Android apps contain dark patterns, which are effective at manipulating user behavior.

- 84 years: Olympus cameras are no more. An era has ended. Maybe the Titans will return?

Quotable Stuff:

- James Hamilton: ARM processors literally are everywhere. This vast vast vast volume of ARM processors is particularly important in our industry because nothing drives low cost economics better than volume. Nothing funds the R&D investment required to produce great server processors better than volume. Nothing builds dev tool ecosystems faster than volume. And it's volume that brings application developers.

- Kevin Kelly: Being enthusiastic is worth 25 IQ points.

- Twitter: Using a custom storage backend instead of traditional key value store reduced the overall cost by a factor of 10. This also reduced latency by a factor of five. More importantly, this enables us to add other features that require higher scale.

- @QuinnyPig: Non-competes are bad news for everyone. The incredibly talented folks at AWS deserve better, massive amounts of goodwill among AWS’s candidate pool are being torched by moves like this, and I fail to see any way that this situation benefits customers.

- @timallenwagner: A 'Think Big' for the AWS CDK team: Imagine the CDK not as a dev tool, but as an embeddable way to add build & control capabilities to every AWS-hosted application - a new, fundamental element of every well-architected SaaS and PaaS offering in the cloud.

- Air Force Lt. Gen. Jack Shanahan~ there are lots of autonomous systems in [the Department of Defense] today, but there are very few, and I would say really no significant, AI-enabled autonomous systems.. Coginot group-based authentication. 2. Request & response validation. 3. Web sockets. 4. Automated API documentation. 5. Integration with DynamoDB.

- @dbsmasher: What the hell is the point of ‘microservice’ if all instances connect to one database?? What are you smoking, HSBC??...This is a blast radius and risk assessment concern less a data store engine concern.

- Marc Andreessen: I should start by saying that none of what follows would be possible without the help of my amazing and indefatigable assistant Arsho Avetian. She's been my secret weapon for more than 20 years.

- Joab Jackson: Still, the industry seems to be moving towards Rust. Amazon Web Services uses it, in part for deploying the Lambda serverless runtime, as well as for some parts of EC2. Facebook has started using Rust, as has Apple, Google, Dropbox and Cloudflare.

- Sam Sydeffekt: Turns out that the level of computational optimization and sheer power of this incredible technology is meant to make fun of web developers, who struggle to maintain 15fps while scrolling a single-page application on a $2000 MacBook Pro, while enjoying 800ms delays typing the corresponding code into their Electron-based text editors.

- @ID_AA_Carmack: Average reading speed is < 250 words/minute. If only the actually read data was transmitted, one billion people reading for an hour would only be 75 TB of traffic and under $10k in costs.

- @ID_AA_Carmack: I can send an IP packet to Europe faster than I can send a pixel to the screen. How f’d up is that?

- Shubheksha: By combining exponential backoff and jittering, we introduce enough randomness such that all requests are not retried at the same time. They can be more evenly distributed across time to avoid overwhelming the already exhausted node. This gives it the chance to complete a few in-flight requests without being bombarded by new requests simultaneously.

- @colmmacc: I don't know who needs to know this, but a cryptographic seed can safely generate about 700M times its size in secure random output. Meanwhile a Sequoia seed can generate a Redwood tree that is about 2.5B times its volume.

- @conniechan: A fun use case is “Cloud clubbing” on TikTok⚡️. “The Shanghai nightclub TAXX earned $104,000 in tips paid through the app during a single Livestream that gained 71,000 views.”

- @wolfniya: We've installed six unlicensed copies of Oracle DB across your organisation's network. 10 bitcoin and we tell you where, else we'll tell Oracle's licensing department.

- djb_hackernews: I'm going to blow your mind...The "mastering" process of audio mixing historically starts with the input of "slave" tapes...

- Elizabeth MacBride: “Investors are a simple-state machine,” Michael Siebel, the accelerator’s CEO, told me. “They have simple motivations, and it’s very clear the kind of companies they want to see.”

- Li Jin: Beepi, which was a used car marketplace, had a massive chicken and egg problem in attracting sellers and buyers initially. To solve this, the founders went out and purchased used cars to seed the supply side. After a few months, they moved to the marketplace model.

- @dhh: Because it's an awesome way to wrangle the cloud. Never been so happy to be in the cloud as the past 2 weeks. We're blowing through our projections for the first year. K8S has made it trivial to scale a lot of it.

- @chrismunns: Haters gonna hate. I see a full app architecture where i don’t manage a single server or OS or container, have metrics/monitoring/loggging built in, and with deny by default/fine grained security throughout. Oh and it costs me pennies when not in use and scales automatically

- @adamdangelo: We are going fully remote first at Quora. Most of our employees have opted not to return to the office post-covid, I will not work out of the office, our leadership teams will not be located in the office, and all policies will orient around remote work. (1/2)

- @rts_rob: "We had to choose between two security approaches. One, containerization, is fast and resource efficient but doesn’t provide strong isolation between customers... Security is always our top priority at AWS, so we built Lambda using traditional VMs."

- dougmwne: The strategy they used speaks exactly to your point. The diseases were not "cured" in the sense that these people were brought back to a generic baseline. That very well may have required a complex series of edits that are well beyond what is currently possible. Instead, they focused on a clever workaround that was simpler to implement, reactivating fetal hemoglobin. That's how they were able to cure two different diseases with the same gene edit.

- @cote: ‘seven out of ten said their ai projects had generated little impact so far. Two-fifths of those with “significant investments” in ai had yet to report any benefits at all.’

- Stephen Wolfram: What we learned is that there is a layer of computational irreducibility. We already knew that within any computationally irreducible system, there are always pockets of computational irreducibility. What we realized is basically most of physics, as we know it, lives in a layer of computational reducibility that sits on top of the computational irreducibility that corresponds to the underlying stuff of the universe.

- Novel Marketing: The March payout to Kindle Select authors (authors who are exclusive with Kindle Unlimited), was $29 million paid in royalties—the highest Kindle Select Global Fund payout ever. It was the highest monthly increase ever recorded because it was a 6.6% increase over the prior month.May 11, 2020

- @ben11kehoe: What saddens me about this going around is the number of people I look up to who are have no curiosity to understand this. Serverless isn’t inherently creating complexity, but it does move it from your code into your infra. Let’s have the conversation about *that* trade off.

- @hichaelmart: Pretty amazing how far behind @nodejs has become at the Techempower Benchmarks. And it's not JavaScript – eg, for postgres updates, @es4x_ftw (JS on the JVM) is 5x faster. Saddens me that Node.js http perf has barely moved in 7 years

- @theburningmonk: I have spent quite a bit of time with AppSync on a few projects, and it's really grown on me, big time. So much of what's difficult with API GW comes out-of-the-box. Here are 5 things that really stood out (a long form blog post to follow soonish).

- George: If anything it's made me a bit more open to the assumption that you can probably explain away the wage gap with skill + tax irregularities + immigration alone. That is to say, you don't necessarily need any irrationality or unfairness in the market to explain the wage gap, but I haven't proven this, it's just an assumption I might test in the future.

- joshuaellinger: Interestingly, my main client (a very large company) just went the other direction and moved all its compute to a system that is Spark underneath running on Azure. They are trying to decommission some expensive TerraData instances. So far, it is a mixed bag -- it is a big step forward (for them) on anything that is 'batch-oriented' but 'interactive' performance is dismal. Price appears to be a big motivation. I always forget that most large enterprises run on exotic stuff with crazy service contracts that makes the cloud look cheap.

- Cindy Sridharan: It’s important to note here that the goal of testing in production isn’t to completely circumvent code under test from causing any impact to the production environment. Rather, the goal of testing in production is to be able to detect problems that cannot be surfaced in pre-production testing early enough that a fully blown outage can be prevented from happening.

- Rachel Brazil: Today, molecular biologists are beginning to understand that certain stretches of DNA can flip from the right- to the left-handed conformation as part of a dynamic code that controls how some RNA transcripts are edited. The hunt is now on to discover drugs that could target Z-DNA and the proteins that bind to it, in order to manipulate the expression of local genes.

- HANRAHAN: Remember when Ed said we needed to do 80 million micro-polygons? We [Pixar] had a budget of 400 floating point operations per pixel when we were building Render-Man. We were always trying to get more compute cycles. When GPUs came along, and they literally had thousands of core machines instead of one core machine, I immediately realized that this was going to be the key to the future.

- aquamesnil: Stateless serverless platforms like Lambda force a data shipping architecture which hinders any workflows that require state bigger than a webpage like in the included tweet or coordination between function. The functions are short-lived and not reusable, have a limited network bandwidth to S3, and lack P2P communication which does not fit the efficient distributed programming models we know of today. Emerging stateful serverless runtimes[1] have been shown to support even big data applications whilst keeping the scalability and multi-tenancy benefits of FaaS. Combined with scalable fast stores[2][3], I believe we have here the stateful serverless platforms of tomorrow.

- qeternity: We pay around $2k for one of our analytic clusters. Hundreds of cores, over 1tb of ram, many tb of nvme. Some days when the data science team are letting a model train (on a different gpu cluster) or doing something else, the cluster sits there with a load of zero. But it’s still an order of magnitude cheaper than anything else we’ve spec’d out.

- Leigh Bardugo: That was what magic did. It revealed the heart of who you’d been before life took away your belief in the possible.

- ibs: Timmermann demonstrated that a realistic extinction in the computer model is only possible, if Homo sapiens had significant advantages over Neanderthals in terms of exploiting existing food resources. Even though the model does not specify the details, possible reasons for the superiority of Homo sapiens could have been associated with better hunting techniques, stronger resistance to pathogens or higher level of fecundity.

- fxtentacle: One time, I wanted to process a lot of images stored on Amazon S3. So I used 3 Xeon quad-core nodes from my render farm together with a highly optimized C++ software. We peaked at 800mbit/s downstream before the entire S3 bucket went offline. Similarly, when I was doing molecular dynamics simulations, the initial state was 20 GB large, and so were the results. The issue with these computing workloads is usually IO, not the raw compute power. That's why Hadoop, for example, moves the calculations onto the storage nodes, if possible.

- Daniel Stolte: "These starless cores we looked at are several hundred thousand years away from the initial formation of a protostar or any planets," said Yancy Shirley, associate professor of astronomy, who co-authored the paper with lead author Samantha Scibelli, a third-year doctoral student in Shirley's research group. "This tells us that the basic organic chemistry needed for life is present in the raw gas prior to the formation of stars and planets."

- bob1029: Latency is one of the most important aspects of IO and is the ultimate resource underlying all of this. The lower your latency, the faster you can get the work done. When you shard your work in a latency domain measured in milliseconds-to-seconds, you have to operate with far different semantics than when you are working in a domain where a direct method call can be expected to return within nanoseconds-to-microseconds. We are talking 6 orders of magnitude or more difference in latency between local execution and an AWS Lambda. It is literally more than a million times faster to run a method that lives in warm L1 than it is to politely ask Amazon's computer to run the same method over the internet.

- CoolGuySteve: Systems programming in any language would benefit immensely from better hardware accelerated bounds checking. While Intel/AMD have put incredible effort into hyperthreading, virtualization, predictive loading, etc, page faults have more or less stayed the same since the NX bit was introduced. The world would be a lot safer if we had hardware features like cacheline faults, poison words, bounded MOV instructions, hardware ASLR, and auto-encrypted cachelines. Per-process hardware encrypted memory would also mitigate spectre and friends as reading another process's memory would become meaningless.

- Ryk Brown: Miss Bindi laughed. “Of course not. Anything involving human beings has the potential to become corrupted. That’s where our AIs come in.” “What a minute,” Nathan interrupted. “How’d we get on AIs?” “An AI has no ego, no hidden agenda. An AI simply analyzes an equation and deduces an answer.” “So you follow the decisions of your AIs?” “Not always,” Miss Bindi admitted. “But more often than not. At the very least, we always consult their analysis prior to deciding. That’s how we keep our own egos, and our own human frailties, from leading us into bad decisions.” “And this works?” “Not always,” Miss Bindi replied. “I mean, we are still human, and despite our best efforts, our own emotions still have great influence over us.”

- jupp0r: This [Lambda + EFS] is a horrible idea. This gives lambda functions shared mutable state to interfere with each other, with very brittle semantics compared to most databases (even terrible ones).

- Stefan Tilkov: Successful systems often end up with the worst architecture.

- @ben11kehoe: Actual harm caused by vendor lock-in with major public cloud providers, or such harm successfully avoided with a multi-cloud strategy, is like cow tipping. You can prove it doesn't happen because there's no videos of it on YouTube.

- Heidi Howard: Faster Paxos is similar to Paxos, except that a leader can choose to allow any node to can send values directly to a chosen majority quorum of nodes.

- bhickey: An old friend of mine is one of the authors on this. Their instruments generate a lot of data. I don't know if this is the case for their array in Australia, but when he was working in South Africa they had to carry hard drives out of the country because there wasn't enough fiber capacity out of the continent.

- @alexstamos: 2) None of the major players offer E2E by default (Google Meet, Microsoft Teams, Cisco WebEx, BlueJeans). WebEx has an E2E option for enterprise users only, and it requires you to run the PKI and won't work with outsiders. Any E2E shipping in Zoom will be groundbreaking.

- danharaj: Only hydrogen has an analytic solution. Even helium requires approximations because the electrons interact. Approximation requires an understanding of the structure of the wavefunction which gets increasingly complex as we add more particles to the system that interact and entangle with one another like electrons in an atom do. Even further, that's for single atoms that aren't interacting strongly with their environment. Here we're talking about an ensemble of nitrogen atoms interacting with each other in a somewhat extreme environment.

- cryptonector: The consensus regarding automobiles and touch interfaces is starting to form that they are just a bad idea. Physical switches, knobs, toggles, buttons -- these things can be activated using one's hands without needing to coordinate with sight, meaning, our eyes can stay on the road.

- adwww: They also struggle with the culture of software. From what I've heard from employees, software is looked down on by management and is not really something they 'get'. Things like app connectivity are after thoughts and not core to their products. A good chunk of what makes a Tesla unique is the radically different software and digital user experience. Dyson (the company) are not ready to embrace the fast moving culture that enables that.

- Hannu Rajaniemi: Tools always break. She should have remembered that.

- Time Wagner: And some of this was a learning curve for us at AWS too, right? In terms of understanding. Because if you thought of a Lambda as something that you hooked up to an S3 bucket, then maybe it didn't need a whole kind of development paradigm or CICD pipeline mechanism or application construction framework around it. And it quickly grew to be obviously so much more than that. And I think had we known how far and how fast it was going to go back in the day, we would have given more credence to the idea that we need our own ... we need a framework here and we need client side support for this. We also had a little bit of that AWS-itis where you're like look, if the service is great, people will do whatever they need to do. And we didn't realize, we didn't think hard enough about the fact that, hey, if that's hard or even if there just isn't a simple way of doing it, it's going to actually make the service difficult to consume because it's not the least common denominator plugin piece of infrastructure here. It's something that is very, very different in that regard.

- CBC Radio. Here's the magic — the next time you face a task that your whole body is screaming, "I don't want to, I don't feel like it," ask yourself: what's the next action? What's the next action I'd need to take to make some progress? Don't break the whole task down. That will be sure to overwhelm you. I think if most of us broke our whole task down, we'd realize that life's too short, you can never get it all done. Instead just say, "What's the next action?" and keep that action as small and as concrete as possible.

- Ian M. Banks: Strength in depth; redundancy; over-design. You know the Culture’s philosophy.

- Grady Booch: Architecture is whatever hurts when you get it wrong

- ignoramous: I know at least one team at Amazon that does runs map-reduce style jobs with Lambda, but now that Athena supports user-defined functions, I'd personally be inclined to use it instead over EFS or S3 + Lambda.

- Katie Mack: Vacuum decay is the idea that the universe that we are living in is not fully stable. We know that when the universe started, it was in this very hot, dense state. And we know that the laws of physics change with the ambient temperature, the ambient energy. We see that in particle colliders. We see that if you have a collision of high enough energies, then the laws of physics are a little bit different. And so, in the very early universe — the first tiny few microseconds or whatever it was — it went through a series of transitions. And after one of those transitions, we ended up in a universe that has — that has electromagnetism and the weak nuclear force and the strong nuclear force and gravity. It created the laws of physics that we see today.

- Matthew Lyon: Roberts called his program RD, for “read.” Everyone on the ARPANET loved it, and almost everyone came up with variations to RD—a tweak here and a pinch there. A cascade of new mail-handling programs based on the Tenex operating system flowed into the network: NRD, WRD, BANANARD (“banana” was programmer’s slang for “cool” or “hip”), HG, MAILSYS, XMAIL . . . and they kept coming. Pretty soon, the network’s main operators were beginning to sweat. They were like jugglers who had thrown too much up in the air. They needed more uniformity in these programs. Wasn’t anyone paying attention to the standards?

Useful Stuff:

- What stack would you use to build a modern email client that runs on multiple platforms?

- Here's what Basecamp went with for Hey:

- Vanilla Ruby on Rails on the backend, running on edge (edge means latest version off git)

- Stimulus

- Turbolinks,

- Trix + NEW MAGIC on the front end (NEW MAGIC is to be released so not sure what it is yet)

- MySQL for DB (Aurora, Vitess for sharding (how this works I don't know))

- Redis for short-lived data + caching

- ElasticSearch for indexing

- AWS/K8S/EKS

- The Majestic Monolith - an integrated system that collapses as many unnecessary conceptual models as possible. Eliminates as much needless abstraction as you can swing a hammer at. It’s a big fat no to distributing your system lest it truly prevents you from doing what really needs to be done.

- Shape Up as the methodology

- Basecamp as the issue tracker, etc

- GitHub for code reviews and pull requests

- Native for mobile (swift/kotlin), least for desktop (electron). All using the majestic monolith and the same HTML views in the center

- Postfix mail server + ActionMailbox. Own stack for mail delivery.

- imageproxy - caching image proxy server written in go

- ALB for load balancing.

- Resque for background jobs.

- Development time: 2 years.

- HEY is already doing 30,000 web requests per minute.

- @DHH: React is so 2019. HTML + minimum JS is 2020

- Went with MySQL because reliable and relational databases are good.

- HEY is 99% K8s (EKS).

- Currently have a second region in a standby state and it will make it active/active soon.

- Using k8s to avoid lock-in. They are happy how it has helped them scale to handle increased demand. @t3rabytes: Much more than lock-in, I answered this elsewhere but we have pretended over deploying, logging, monitoring, cluster management and access, Kubernetes actually has a CLI that’s useful, and we’ve found Kubernetes to be (gasp) less of a black box than ECS...Config portability (HEY didn’t start out on Kubernetes running on AWS ;)), preferences for how we deploy, log, monitor, and manage cluster access, a CLI, and (surprisingly) it’s less of a black box than ECS.

- @DHH: Yes, we have a plan to be big tech free on infrastructure in five years. But there aren't currently any suitable non-big tech clouds. We're also building out our on-prem work, but I'm very happy we are cloud for the past 2 weeks.

- @DHH: On-prem is MASSIVELY cheaper than cloud for compute and big dbs over the long run. But we would have been toast launching in Orem with HEY. Never in a million years would I have predicted this take off.

- Users are multi-tenant in the same DB.

- HTML over websocket.

- @DHH: GCP nearly burnt Basecamp to the ground. Twice shy and all that.

- MySQL is not running on k8s. They are using Aurora.

- @t3rabytes: Cloud becomes very nice when you plan for X number of users and then the world bursts open and now you're planning for 4X number of users and scaling looks like a few YAML updates and waiting a few minutes

- @t3rabytes: 91% of the instances powering the app are spot instances. I've pushed hard since the beginning on using them for as much as possible. It hasn't all been smooth sailing with them though, we've had to deal with a lot of termination behavior quirks.

- whoisjuan: They are using Turbolinks. They generate everything server-side and then Turbolinks calculates the delta between the existing loaded template in the client and the incoming template generated in the server and injects only the changes. This has a lot of advantages, mainly that you don't need to care about state on the client side and you're always synced with the real estate on the backend.

- Also, A few sneak peeks into Hey.com technology (I - Intro)

- Here's what Basecamp went with for Hey:

- So real it feels like tomorrow. Sci-Fi Short Film: "Hashtag". Highly entertaining.

- Is AWS just yucky? Why I think GCP is better than AWS.

- AWS charges substantially more for their services than GCP does, but most people ignore the real high cost of using AWS, which is; expertise, time and manpower.

- It’s not that AWS is harder to use than GCP, it’s that it is needlessly hard; a disjointed, sprawl of infrastructure primitives with poor cohesion between them. A challenge is nice, a confusing mess is not, and the problem with AWS is that a large part of your working hours will be spent untangling their documentation and weeding through features and products to find what you want, rather than focusing on cool interesting challenges.

- One of the first differences that strikes you when going from GCP to AWS is accounts vs projects

- Why do we have to do so much work to use AWS? Why can’t AWS abstract away this pain away from you in the way that Google has done?

- The AWS interface looks like it was designed by a lonesome alien living in an asteroid who once saw a documentary about humans clicking with a mouse. It is confusing, counterintuitive, messy and extremely overcrowded. GCP’s user interface is on the other hand very intuitive to use and whenever you want to provision anything you are given sane defaults so you can deploy anything in a couple of clicks

- You can forgive the documentation in AWS being a nightmare to navigate for being a mere reflection of the confusing mess that is trying to describe.

- If your intent is to use Kubernetes, don’t even bother with AWS, their implementation is so bad I can’t even comprehend how they have the gall to call it managed, especially when compared with GCP

- GCP on the other hand has fewer products but the ones they have (at least in my experience) feel more complete and well integrated with the rest of the ecosystem

- I felt that performance was almost always better in GCP, for example copying from instances to buckets in GCP is INSANELY fast

- In April the Khan Academy served 30 million learners. How did Khan Academy Successfully Handled 2.5x Traffic in a Week?

- We scaled readily in large part because of our architecture and a rigorous practice of choosing external services carefully and using them properly.

- We use Google Cloud, including AppEngine, Datastore, and Memcache, and Fastly CDN, and they were the backbone of the serverless and caching strategy that’s key to our scalability.

- Using GCP App Engine, a fully managed environment, means we can scale very easily with virtually no effort.

- We similarly use Datastore which scales out storage and access capacity automatically in much the same way App Engine scales out web server instances.

- Fastly CDN allows us to cache all static data and minimize server trips. Huge for scalability, it also helps us optimize hosting resources, for which costs grow linearly with usage in our App Engine serverless model.

- We also extensively cache common queries, user preferences, and session data, and leverage this to speed up data fetching performance. We use Memcache liberally, in addition to exercising other key best practices around Datastore to ensure quick response times.

- We noticed some slowdowns in the first few days and found that deploys were causing those hits. At our request, Google increased our Memcache capacity, and within a week we were comfortable returning to our normal continuous deployment pattern

- dangoor:

- We offload as much as we can to the CDN, but (as I note elsewhere in the comments), there's a lot of dynamic work going on on our site. Had we been using PostgreSQL for all of our data, for example, we would have been scrambling to ramp it up.

- I forget how the numbers work out precisely, but you are absolutely correct that we spend more using App Engine vs. just spinning up Compute Engine instances (the Google equivalent of EC2). The tradeoff is, indeed, that we spend less engineering/ops time and also have less after hours work as a result. I do think Fastly is a little different in the equation. Fastly makes a big difference in user experience by returning a lot of data very quickly and with low latency (because it's replicated to their many node locations).

- The CDN and YouTube are important, but Khan Academy is a lot more dynamic than you might think. We need to keep track of people's progress on the site as they watch videos, read articles, and do exercises. We have dashboards for teachers, parents, and school districts (in addition to the presenting learners with their own progress). We integrate with test providers (like the College Board for SAT). So it's definitely the case that YouTube offloads a lot of bandwidth for the videos, but we do have a lot of requests to the site for all of these other features.

- We have a number of different caches to deal with different data access patterns. In Python, we have a library of function decorators to make different sorts of caching easy. Those decorators are able to generate cache keys based on function names and parameters.

- When kids return to school in a COVID world they are being advised (in my area of the Bay Area) to organize around cohorts—groups that stick together and don't interact. Summer camps are using the same strategy too. Nobody intermingles or changes places. The cohort sticks together and only together. We have this same strategy in software, it's called the bulkhead pattern. Its purpose is to decrease the blast radius of an event. If someone is sick and they could have potentially interacted with the whole student body then everyone has to be checked. Using cohorts only the cohort has to be checked. Who said software wasn't real life?

- Design decisions that made sense under light loads are now suddenly technical debt. Six Rules of Thumb for Scaling Software Architectures:

- Cost and scalability are indelibly linked. A core principle of scaling a system is being able to easily add new processing resources to handle the increased load. Cost and scale go together. Your design decisions for scalability inevitably affect your deployment costs.

- Your system has a bottleneck. Somewhere! Software systems comprise multiple dependent processing elements, or microservices. Inevitably, as you grow capacity in some microservices, you swamp capacity in others. Any shared resource in your system is potentially a bottleneck that will limit capacity. Guard against sudden crashes when bottlenecks are exposed and be able to quickly deploy more capacity.

- Slow services are more evil than failed services. When one service is overwhelmed and essentially stalls due to thrashing or resource exhaustion, requesting services become unresponsive to their clients, who also become stalled. The resulting effect is known as a cascading failure — a slow microservice causes requests to build up along the request processing path until suddenly the whole system fails. Architecture patterns like Circuit Breakers and Bulkheads are safeguards against cascading failures.

- The data tier is the hardest to scale. Changing your logical and physical data model to scale your query processing capability is rarely a smooth and simple process. It’s one to want to confront as infrequently as possible.

- Cache! Cache! And Cache Some More! One way to reduce the load on your database is to avoid accessing it whenever possible. This is where caching comes in. If you can handle a large percentage of read requests from your cache, then you buy extra capacity at your databases as they are not involved in handling most requests.

- Monitoring is fundamental for scalable systems. As your system scales, you need to understand the relationships between application behaviors. A simple example is how your database writes perform as the volume of concurrent write requests grows. You also want to know when a circuit breaker trips in a microservice due to increasing downstream latencies, when a load balancer starts spawning new instances, or messages are remaining in a queue for longer than a specified threshold.

- DHH and Apple arrived at a face saving compromise. Apple, HEY, and the Path Forward. It is a ridiculous compromise. Hey now has a two week free tier with a random expiring email address. The email address isn't even portable. Useless. Is that a great customer experience? No. It's an obvious hack that does nothing to settle the underlying issues. But hey, Hey got approved so all the faux Sturm und Drang got the job done. How about for everyone else?

- From his secret floating lair controlled by and army of ARM chips, James Hamilton announces the new high performance M6g, C6g, and R6g Instances Powered by AWS Graviton2.

- You think AWS as a software company, but the secret sauce is in their custom hardware. Nitro makes it relatively easy for AWS to integrate in new hardware platforms.

- Graviton2 is based on 64-bit Neoverse cores with ~30B transistors on 7nm technology. It has 7x better performance over the v1 part. 4x increase in core count. 5x faster memory. 2x the cache.

- The new M6g, C6g, and R6g instances deliver up to 40% better performance over comparable x86-based instances.

- PostgreSql on M6g is 46% faster and has 56% better response time than on M5. MySQL is 28% faster with 47% better response time. Honycomb.io when compared to C5 ran 30% fewer instance whith each instance costing 10% less for an all-in savings of 47% for 15 hours of development work. Insiders are moving to M6g at scale.

- James says a new era has begun. Maybe the new era is an inflection point? When does hardware become cheap enough that paying for managed services is the suckers play?

- Fun and wide ranging interview. If you're interested in how Lambda got its start and where it should be going—listen to The Past, Present, and Future of Serverless with Tim Wagner. Serverless needs to support disaster recovery so enterprises can adopt it for certain classes of problems. Lambda, like Cloudflare, must work at the edge as a first class citizen. Lambda needs better CI/CD itegration. AWS needs to settle on a better more powerful tool chain rather than have a bunch of systems that do mostly the same thing in different ways. Lambda needs a built-in idempotency mechanism. Lambda needs a built-in circuit breaker mechanism so downstream failures can feed back. Lambda needs to support building stateful applications. Lambda needs to bill at the millisecond granularity so applications can be composed together at much finer grain level. Lambda should integrate with EFS so the ultimate compute infrastructure can take advantage of the ultimate storage infrastructure (He got his wish!). Lambda should be able to be used for building other infrastructures. For that Lambda must be able to support networking. Lambda functions must be able to talk to other Lambda functions efficiently so distributed algorithms could be built on top of Lambda.

- Even more fun is this. Bobby Allen, CTO at CloudGenera, speaks his mind like few CTOs are willing to do. Pure gold. Day Two Cloud 052: Moving Back Home From The Cloud. Some highlights:

- Building your own datacenter can mean you may be missing conversations with your customers because you are trying to save a few bucks on AWS.

- The average CIO is taking satisfaction in the chicken they’ve raised instead of the dish they put on the table (listen and that will make sense).

- Are you freeing yourself up to have more time to ask what matters to the business? How we can compete better in the market?

- We're focussing on the wrong things. That's why the cloud is so attractive.

- There are almost always a tipping point between between Iaas and PaaS where it becomes cheaper to run itself. Sometimes PaaS is 10x IaaS.

- AWS is running loss leaders on the low end. Because we trusted them on the low end we're drinking the cool-aid at the high end.

- Murat shares his surefire theory driven system for learning distributed systems in 21 days. Learning about distributed systems: where to start? It starts lite, with predicate logic, and gets easier with TLA+. After that is the almost trivial introduction to impossibility results. 21 days no problem. Finally, we've reached consensus.

- Your database looking a little tired lately? Spice it up with these exciting alternatives:

- Recent database technology that should be on your radar (part 1):

- TileDB is a DB built around multi-dimensional arrays that enables you to easily work with types that aren’t a great fit for existing RDBMS systems

- Materialize touts itself on its website as “the first true SQL streaming database” and that actually may not be overblown!

- Prisma isn’t a database but rather a set of tools that seek to abstract away your database as much as possible.

- petercooper has even more for you:

- QuestDB – https://questdb.io/ – is a performance-focused, open-source time-series database that uses SQL. It makes heavy use of SIMD and vectorization for the performance end of things.

- GridDB - https://griddb.net/en/ - is an in-memory NoSQL time-series database out of Toshiba that was boasting doing 5m writes per second and 60m reads per second on a 20 node cluster recently.

- MeiliSearch - https://github.com/meilisearch/MeiliSearch – not exactly a database but basically an Elastic-esque search server written in Rust. Seems to have really taken off.

- Dolt – https://github.com/liquidata-inc/dolt – bills itself as a 'Git for data'. It's relational, speaks SQL, but has version control on everything.

- TerminusDB, KVRocks, and ImmuDB also get honorable mentions.

- Recent database technology that should be on your radar (part 1):

- How the biggest consumer apps got their first 1,000 users: Go where your target users are, offline; Go where your target users are, online; Invite your friends; Create FOMO in order to drive word-of-mouth; Leverage influencers; Get press; Build a community pre-launch.

- SpaceX is successful so of course people want to know what makes it not explode. Software Engineering Within SpaceX. Automatic docking software for the ATV that delivers supply to ISS is written using C code and verified with Astree...They talk about the triple redundancy system and how SpaceX uses the Actor-Judge system. In short there are 3 dual core ARM processors running on custom board...the actual graphical display application uses Chromium/JS. The rest of the system is all C++. The display code has 100% test coverage, down to validation of graphical output (for example if you have a progress bar and you set it to X% the tests verify that it is actually drawn correctly).

- Meta-programming Lambda functions with Tom Wallace.

- Clever. Meta-programming Lambda is the idea that from within a Lambda you can generate custom code for scenarios, something like html templates for code. You can even redeploy Lambda code from within Lambda. The given edge example wasn't clear to me. You can imagine automatically generating bespoke code based on memory and latency needs.

- If doesn't cost you anything to have a bespoke Lambda function per user, for example. The code would implement the configuration/requirements for that customer. You only pay when they run, so why not have custom versions? Of course, you have to route correctly.

- Use Lambda all the way down to implement map reduce for IoT data. IoT data is stream based an you need to reconstitute the steam to recover state, so a SQL based Athena approach would not work.

- A master lambda starts 100s of other lambda to do the work and they report back when done. Step Functions don't ramp up parallelism fast enough.

- With Lambda you can spin up thousands of Lambdas quickly, but not as quickly as they say you can. You need to add in rety logic to start Lambdas at scale.

- You can answer questions very quickly when you start 3000 Lambdas and can read 50MB/s from S3.

- Lambdas for queries run on a separate account so their use doesn't interfere with other users of Lambda. Querying at scale can starve the Lambda pool for other users and functions would start failing intermittently. It doesn't matter if queries exhaust the Lambda pool becaus they can just retry.

- A general pattern is to use separate accounts to get manage limits.

- A good Lambda feature would be a spot scheduler for ondemand work that could optimize for lower costs, green energy use, etc. Your Lambda would be executed whenever.

- Also, Meta-programming in lambda - Tom Wallace

- On a somewhat related note is Optimize your AWS Lambda cost using multiprocessing in nodeJS. The idea is to fork new Lambda processes to increase parallelism. Something I used to do on System V Unix, but never even considered for Lambda. There's a lot of smart people out there.

- Find the S3 lifecycle rules perplexing? I did. Good explanation, but waiting on the how-to. Save $$$ on Your S3 Bill with S3 Lifecycles!

- The Serverless Supercomputer. The rise of embarrassingly parallel serverless compute.

- Imagine a world where nearly everything becomes MUCH faster.

- Using this on-demand scale from serverless FAAS providers, jobs can be spun up in as many workers as required. This would be equivalent to summoning thousands of threads, running in parallel with an instant startup. Much like AWS s3 is an “unlimited file storage service”, AWS Lambda is effectively leveraged here as “unlimited on-demand compute threads”.

- Any processes that can be chunked up and processed in pieces in a distributed fashion is suitable for this massive parallel runtime.

- CC lambda can Process 3.5 billion webpages or 198TB of uncompressed data in ~3 hours.

- Also, Stanford Seminar - Tiny functions for codecs, compilation, and (maybe) soon everything

- Garbage collection driven programming. How Rust Lets Us Monitor 30k API calls/min:

- We hosted everything on AWS Fargate, a serverless management solution for Elastic Container Service (ECS), and set it to autoscale after 4000 req/min. Everything was great! Then, the invoice came

- Instead of sending the logs to CloudWatch, we would use Kinesis Firehose...With this change, daily costs would drop to about 0.6% of what they were before

- In some instances, the pause time breached 4 seconds (as shown on the left), with up to 400 pauses per minute (as shown on the right) across our instances. After some more research, we appeared to be another victim of a memory leak in the AWS Javascript SDK

- The solution was to move to a language with better memory management and no garbage collection. Enter Rust

- What we found was that with fewer—and smaller—servers, we are able to process even more data without any of the earlier issues.

- With the Rust service implementation, the latency dropped to below 90ms, even at its highest peak, keeping the average response time below 40ms...The original Node.js application used about 1.5GB of memory at any given time, while the CPUs ran at around 150% load. The new Rust service used about 100MB of memory and only 2.5% of CPU load.

- NimConf 2020 videos are now available. No, not this Nim.

- #10: Serverless at DAZN with Daniel Wright and Sara Gerion

- Serverless first. Serverless is easy to use and operationally easier. You don't have to manage clusters. You get resilience for free. It really shines when are using an event based model.

- Most systems are a hybrid. Use EC2 where appropriate.

- Spike loads, like when a lot of people sign up all at once, it's not clear of Lambda can scale up fast enough to handle initial burst. You need to scale up in advance or scale up faster.

- There's a 3,000 concurrency AWS Lambda limit per region. But if you have a spike of 1.2 million concurrent users before an event an autoscaling group you can increase before an event makes more sense. Sometimes you want a button that says scale up to as much as you need. Lambda doesn't have that.

- Use active-active to minimize downtime. DynamoDB APIGateway, Lambda is easier. Anything not in a critical path is active-passive. Everything else is deployed in 4 regions. They make big use of DynamoDB global tables.

- When using global tables you have to make sure when using streams to only process changes in one region or you'll duplicate that operation in every region.

- Evolving a system makes you more vulnerable. Just cut over to the new system without backward compatibility because the greatest risk is always in the backward compatible portion of the system. Half the time we humans are sick is because our body over reacts to a threat that's no longer a threat in the modern world. And we've seen the same with SSL downgrade attacks and now with Bluetooth. The Bluetooth standard includes both a legacy authentication procedure and a secure authentication procedure. It's the legacy version that was attacked. Bluetooth devices supporting BR/EDR are vulnerable to impersonation attack. These aren't bugs. They are how the system was designed.

- Building a Next-Generation of Serverless. Lift and shift is no cheaper when you come to the cloud, but after you break up your application and convert it to serverless the saving can be as much as 90 percent. Come for the agility—stay for the savings.

- Dan Luu wisdom. A simple way to get more value from metrics: I think writing about systems like this, that are just boring work is really underrated. A disproportionate number of posts and talks I read are about systems using hot technologies. I don't have anything against hot new technologies, but a lot of useful work comes from plugging boring technologies together and doing the obvious thing. Since posts and talks about boring work are relatively rare, I think writing up something like this is more useful than it has any right to be. For example, a couple years ago, at a local meetup that Matt Singer organizes for companies in our size class to discuss infrastructure (basically, companies that are smaller than FB/Amazon/Google) I asked if anyone was doing something similar to what we'd just done. No one who was there was (or not who'd admit to it, anyway), and engineers from two different companies expressed shock that we could store so much data, and not just the average per time period, but some histogram information as well. This work is too straightforward and obvious to be novel, I'm sure people have built analogous systems in many places. It's literally just storing metrics data on HDFS (or, if you prefer a more general term, a data lake) indefinitely in a format that allows interactive queries. If you do the math on the cost of metrics data storage for a project like this in a company in our size class, the storage cost is basically a rounding error. We've shipped individual diffs that easily pay for the storage cost for decades. I don't think there's any reason storing a few years or even a decade worth of metrics should be shocking when people deploy analytics and observability tools that cost much more all the time. But it turns out this was surprising, in part because people don't write up work this boring.

- YouTube's origin story. I especially like the part where Thanos uses the Ininity Guantlet to build new infrastructure.

- 'We had no idea how to do it': YouTube's founders, investors, and first employees tell the chaotic inside story of how it rose from failed dating site to $1.65 billion video behemoth.

- YouTube's cofounders originally thought "a generic platform where we could host all the videos on the internet" was too bold of an idea. So for about a week, the site was a dating platform — until it wasn't.

- By the summer of 2005, venture capital firm Sequoia Capital had taken notice. Sequoia invested $3.5 million in YouTube's Series A round that September and another $8 million a few months later.

- Steve had a strange way of working. Basically, him and the engineers would work at night. They would show up in late afternoon and work most of the night. When we would show up at 9 a.m. for board meetings, there were very few people there. If you showed up at 7 p.m., all the engineers were there sharing a pizza dinner. It was a fun place.

- We had shots before every software push.

- By the summer of 2006, YouTube had experienced two massive viral hits — the Saturday Night Live skit "Lazy Sunday" and a Nike ad featuring soccer star Ronaldinho. It was getting so big, so fast, YouTube was having trouble keeping its infrastructure up and running.

- Just about every 48 hours, some emergency came up. When you have a million people watching a video a day, you can't just manually log each view anymore.

- The day that I joined we had just hit 40 million views [per day], which was a big milestone. The next month, by July, it was 100 million views. The thing just kept growing.

- What's hard to appreciate is how quickly we had grown beyond our capacity to manage the business. Our global data infrastructure was cracking, our bank account was dwindling, angry music company executives demanding hundreds of millions of dollars, partners banging doors down trying to get our attention.

- Akatsuki: Building Stable and Scalable Large Scale Game Servers with Amazon ECS. Spikes are common in mobile games. During peak there may be 1 million plus requests per minute requiring over 700 containers to handle the load. It takes two minutes to scale after load. Game moves are calculated on the server. A request comes in from the mobile device, over ALB to ECS. Player data is stored in Aurora. Data is cached in ElastiCache. Game logic is calculated by an app in ECS. When a startup event is detected by CloutWatch a Lambda function autoscales the system. They run 5 different kinds of stress tests to stabalize the system. Client. Overload. Scaling. Failover fail parts. Long-Run runs for hours. System remains stable. Considering a system that automatically run stress tests.

- Jepsen is back to torturing poor defenseless databases. So much carnage. Redis-Raft 1b3fbf6. The testing is the the think of course, but the writeups themselves are instructional. It's like being a doctor dissecting a cadaver. But unlike the cadaver, Redis iterated and in the end the patient lived to failover another day. Something to note: we were surprised to discover that Redis Enterprise’s claim of “full ACID compliance” only applied to specially-configured non-redundant deployments, rather than replicated clusters. There's more. PostgreSQL 12.3: Users should be aware that PostgreSQL’s “repeatable read” is in fact snapshot isolation. MongoDB 4.2.6: We continue to recommend that users of MongoDB consider their read and write concerns carefully, both for single-document and transactional operations.

- WordPress Hosting Performance Benchmarks (2020). For not a lot of money you can get some well performing services.

- If you have an unhealthy relationship with incident reports then you may like the The Post-Incident Review.

- Noise is the salt of physics. No salt—bland and uninteresting. Add a little and everything tastes better. Add too much and it's inedible. Adding noise for completely secure communication: The new protocol overcomes this hurdle with a trick – the researchers add artificial noise to the actual information about the crypto key. Even if many of the information units are undetected, an “eavesdropper” receives so little real information about the crypto key that the security of the protocol remains guaranteed.

- It's fun to see how quality work was organized in the past. Fabergé: A Life of Its Own. They had a 30% failure rate. Work masters could sometimes form their own a company under Fabergé and put their own mark on the pieces produced.

- Design an entire system for the place in which it will actually function, not your cube. Google’s medical AI was super accurate in a lab. Real life was a different story: With nurses scanning dozens of patients an hour and often taking the photos in poor lighting conditions, more than a fifth of the images were rejected...Because the system had to upload images to the cloud for processing, poor internet connections in several clinics also caused delays. “Patients like the instant results, but the internet is slow and patients then complain,” said one nurse. “They’ve been waiting here since 6 a.m., and for the first two hours we could only screen 10 patients.”

- Ever want someone to go through a year's worth of podcasts and deliver the highlights? Here you go. Episode #53: A Year of Serverless Chats. Some of the ideas that landed for me:

- Storage first (Episode #40: Eric Johnson and Alan Tan). This is one of my favs. Get your data stored first—before processing it, so it doesn't get lost somewhere in your buggy code pipeline. While data is in transition it's not safe. And you can't see data in SQS, but you can in DynamoDB and S3. And you can generate events from those sources to drive your application state machine. And if there's a problem the data is safe, so you can redo whatever you need to do.

- Pre-render first (Episode #50: Guillermo Rauch). This one makes you think. It sort of fits with the metaprogramming ideas discussed earlier. The idea: the vast majority of pages that you visit every day on the internet can be computed once and then globally shared and distributed...What we found is that front end is really powered by this set of statically computed pages that get downloaded very, very quickly to the device, some of which have data in line with them. This is where the leap of performance and availability just becomes really massive, because you're not going to a server every time you go to your news, your ecommerce, your whatever...I'm going to think about the customer first. I'm going to think about building my back end very, very low in my priority list.

- Integration tests. Episode #47: Mike Roberts: What integration tests do is validate those assumptions, they validate how you expect your code to run within the larger environment and the larger platform. If we want to test the code, that's when you write a unit test or a functional test.

- DynamoDB. Episodes #34 & #35: Rick Houlihan: There's two types of applications out there. There's no OLTP or online transaction processing application which is really built using well-defined access patterns. You're going to have a limited number of queries that are going to execute against the data, they're going to execute very frequently and we're not going to expect to see any change or we're going to see limited change in this collection of queries over time.

- NoSQL. Episode #36: Suphatra Rufo: You probably didn't know that Sears, Kmart, Barneys New York, Party City, I can name a dozen more retailers that just last year, either completely closed down or had to significantly reduce their number of stores, just last year. It's because retail isn't done the same way anymore. Those spikes are now a common part of life and people are having a hard time figuring out how to handle it. Tesco, which is the largest grocery chain store, I'm not sure in America or in the world, I'll have to check that... But they, in 2014, crashed on Black Friday because they couldn't handle the spike in the demands they were getting online. So they lost an entire day of business on Black Friday because they couldn't handle that workload. And then the year later, they went on a NoSQL database and now they can handle that load. I think what people are seeing is that normal day to day business operations are fundamentally different... A decade after NoSQL databases are invented, it's really not a new invention anymore. Now this is just the way of business.

- Serverless future. Episode #7: Taylor Otwell: Yeah, I think the next five years will be huge for serverless, I really do. I think it is the future, because what's the alternative, really? Like more complexity, more configuration files, more weird container orchestration stuff?

Soft Stuff:

- StanfordSNR/gg: a framework and a set of command-line tools that helps people execute everyday applications—e.g., software compilation, unit tests, video encoding, or object recognition—using thousands of parallel threads on a cloud functions service.

- lsds/faasm: a high-performance stateful serverless runtime. Faasm provides multi-tenant isolation, yet allows functions to share regions of memory. These shared memory regions give low-latency concurrent access to data, and are synchronised globally to support large-scale parallelism.

- hydro-project/anna: a low-latency, autoscaling key-value store developed in the RISE Lab at UC Berkeley. The core design goal for Anna is to avoid expensive locking and lock-free atomic instructions, which have recently been shown to be extremely inefficient.

- stanford-mast/pocket: a storage system designed for ephemeral data sharing. Pocket provides fast, distributed and elastic storage for data with low durability requirements. Pocket offers a serverless abstraction to storage, meaning users do not manually configure or manage storage servers. A key use-case for Pocket is ephemeral data sharing in serverless analytics, when serverless tasks need to exchange intermediate data between execution stages.

- hse-project/hse: embeddable key-value store designed for SSDs based on NAND flash or persistent memory. HSE optimizes performance and endurance by orchestrating data placement across DRAM and multiple classes of SSDs or other solid-state storage.

Pub Stuff

- CIRCLLHIST (GitHub): The circllhist histogram is a fast and memory efficient data structure for summarizing large numbers of latency measurements. It is particularly suited for applications in IT infrastructure monitoring, and provides nano-second data insertion, full mergeability, accurate approximation of quantiles with a-priori bounds on the relative error.

- Technical Report: HybridTime - Accessible Global Consistency with High Clock Uncertainty: In this paper we introduce HybridTime, a hybrid between physical and logical clocks, that can be used to implement a globally consistency database. Unlike Spanner, HybridTime does not need to wait out time error bounds for most transactions, and thus may be used with common time-synchronization systems. We have implemented both HybridTime and commit-wait in the same system and evaluate experimentally how HybridTime compares to Spanner’s commit-wait in a series of practical scenarios, showing it can perform up to 1 order of magnitude better in latency

- Dynamic and scalable DNA-based information storage (article, GitHub): As DNA-based information storage systems approach practical implementation44,45, scalable molecular advances are needed to dynamically access information from them. DORIS represents a proof of principle framework for how inclusion of a few simple innovations can fundamentally shift the physical and encoding architectures of a system. In this case, ss-dsDNA strands drive multiple powerful capabilities for DNA storage: (1) it provides a physical handle for files and allows files to be accessed isothermally; (2) it increases the theoretical information density and capacity of DNA storage by inhibiting non-specific binding within data payloads and reducing the stringency and overhead of encoding; (3) it eliminates intractable computational challenges associated with designing orthogonal sets of address sequences; (4) it enables repeatable file access via in vitro transcription; (5) it provides control of relative strand abundances; and (6) it makes possible in-storage file operations.

- From Laptop to Lambda: Outsourcing Everyday Jobs to Thousands of Transient Functional Containers: We present gg, a framework and a set of command-line tools that helps people execute everyday applications—e.g., software compilation, unit tests, video encoding, or object recognition—using thousands of parallel threads on a cloud functions service to achieve near-interactive completion times. We ported several latency-sensitive applications to run on gg and evaluated its performance. In the best case, a distributed compiler built on gg outperformed a conventional tool (icecc) by 2–5×, without requiring a warm cluster running continuously. In the worst case, gg was within 20% of the hand-tuned performance of an existing tool for video encoding (ExCamera).

- Faasm: Lightweight Isolation for Efficient Stateful Serverless Computing: We introduce Faaslets, a new isolation abstraction for high-performance serverless computing. Faaslets isolate the memory of executed functions using software-fault isolation (SFI), as provided by WebAssembly, while allowing memory regions to be shared between functions in the same address space. Faaslets can thus avoid expensive data movement when functions are co-located on the same machine. Our runtime for Faaslets, Faasm, isolates other resources, e.g. CPU and network, using standard Linux cgroups, and provides a low-level POSIX host interface for networking, file system access and dynamic loading. To reduce initialisation times, Faasm restores Faaslets from already-initialised snapshots. We compare Faasm to a standard container-based platform and show that, when training a machine learning model, it achieves a 2x speed-up with 10x less memory; for serving machine learning inference, Faasm doubles the throughput and reduces tail latency by 90%.

- Dive into Deep Learning: An interactive deep learning book with code, math, and discussions. Provides both NumPy/MXNet and PyTorch implementations.

Music Stuff

- Entrancing. Ólafur Arnalds - Undan hulu (Live at Sydney Opera House). This is the same guy who programs the dueling pianos.