A twitter discussion on build times and source-file sizes got me interested in doing some analysis of Chromium build times. I had some ideas about what I would find (lots of small source files causing much of the build time) but I inevitably found some other quirks as well, and I’m landing some improvements. I learned how to use d3.js to create pretty pictures and animations, and I have some great new tools.

As always, this blog is mine and I do not speak for Google. These are my opinions. I am grateful to my many brilliant coworkers for creating everything which made this possible.

The Chromium build tools make it fairly easy to do these investigations (much easier than my last build-times post), and since it’s open source anybody can replicate them. My test builds took up to 6.2 hours on my four-core laptop but I only had to do that a few times and could then just analyze the results.

I did my tests on an October 2019 version of Chromium’s code (necessary patches described here) because that gave me the most flexibility about which build options to use. I used a 32-bit, debug, component (multi-DLL, for faster linking) build, with NACL disabled, full debug information, with reduced debug information for blink (Chromium’s rendering engine) as my base build. In other words, these are the build arguments I used:

target_cpu = “x86” # 32-bit build, maybe faster?

is_debug = true # Extra checks, minimal optimizations

is_component_build = true # Many different DLLs, default with debug

enable_nacl = false # Disable NACL

symbol_level = 2 # Full debug information, default on Windows

blink_symbol_level = 1 # Reduced symbol information for blink

This is a good set of options for developing Chromium as it gives full debuggability together with a fast turnaround on incremental builds (when just a few source files are modified between builds).

Enough talk, let’s see some data

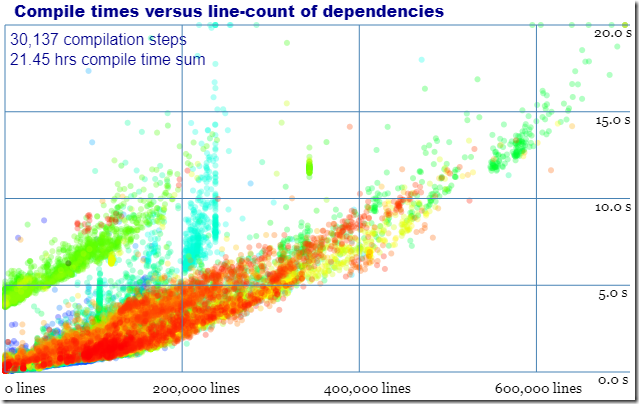

Let’s start with some pretty pictures. The graph below shows the relationship between the number of lines of code in source files and how long it took to compile them. I clamped the really-big and really-slow files and zoomed in to make it easier to see the patterns and… there aren’t any (live diagrams here):

In this diagram and the ones that follow the colors represent when in the build a file was compiled, with blue files happening first, then green, and red files happening last.

The .csv files I generated from my builds have a wealth of data, easily explorable with the supplied scripts:

python ..\count_costs.py windows-default.csv

30137 files took 21.453 hrs to build. 11.856 M lines, 3611.6 M dependent lines

A bit of analysis shows that it makes sense that there is no correlation between source-file length and compile time. The 30,137 compile steps that I tracked consumed a total of 11.6 million lines of source code in the primary source files. However the header files included by these source files added an additional 3.6 billion lines of source code to be processed. That is, the main source files represent just 0.32% of the lines of code being processed.

It’s not that Chromium has 3.6 billion lines of source code. It’s that header files that are included from multiple source files get processed multiple times, and this redundant processing is where the vast majority of C++ compilation time comes from. It’s not disk I/O, it’s repeated processing (preprocessing, parsing, lexing, code-gen, etc.) of millions of lines of code (note the 100% CPU usage, maintained for most of the build, due to ninja’s excellent job scheduling):

But what about precompiled header files? Couldn’t they be used to reduce the overhead of these header files? Well, it turns out that Chromium does use precompiled header files in some areas and the 3.6 billion line number is after those savings have been factored in. More on that later.

Patterns? You want patterns?

Let’s create another chart, this one showing the relationship between the lines of code in all of the include-file dependencies and the compile times. Now we’ve got some patterns (live diagrams here):

Is it just me or does that look like a distorted version of the classic Hertzsprung-Russell diagram of stellar luminosities versus temperatures? No? Just me?

Moving on…

Looking at this graph I can see at least four patterns, numbered here:

- The bottom of the chart shows a thick band of points curving up and to the right (mostly red, but all colors appear) – the main sequence. This area clearly shows that more lines in dependencies generally leads to longer compile times. The bottom of this band curves up gradually, looking like it’s almost an O(n^2) equation, at least for the minimum cost, although the average and maximum costs look closer to linear.

- Starting at about the 4.0 second mark on the y-axis (in green) there is a significant set of files that have a minimum compile time of about four seconds, regardless of include size. There are many files in this region whose source-files plus all of their includes are less than 200 lines and yet take 4.0 seconds or longer to compile.

- There is an odd vertical structure from 200,000 to 240,000 lines of includes (in greenish blue).

- There is also a perfectly straight vertical line of points at 343,473 lines of includes and around 12 to 13 seconds of compile time (below the digit ‘4’, in green).

The colorful graphs of compile times (live versions available here) are interactive. This means that identifying which files are associated with each of the patterns is as simple as moving the mouse around in that area.

Structures 2 and 4 – Precompiled headers

It turns out that structures 2 and 4 are both related to precompiled header files. Structure 2 is a set of files that use precompiled header files. This leads to a minimum compile cost of about 4.0 seconds (loading precompiled header files in clang-cl is fairly expensive) and a very low dependencies line count – apparently the headers that are precompiled don’t count as dependencies in this context.

The vertical line in structure four is from creating precompiled header (.pch) files. It is perfectly vertical because every one of the precompilations is compiling exactly the same set of headers, all from precompile_core.cc, which includes (through command-line magic) precompile_core.h. For some reason this file gets compiled 59 times, each time creating a 76.9 MB .pch file. This got even worse for a while but has been mitigated – see below.

In short, precompiled headers can be very helpful, but they come with their own costs. In this case there is the 900+ seconds to redundantly build the .pch files, and then the ~16,000 seconds to load the large .pch file more than 4,000 times, plus the additional dependencies.

Note: if we disable precompiled headers entirely the build gets slightly slower. And the main source files go from 0.32% of the lines of code being processed to just 0.125%! (previously reported as 0.22% due to omission of system header files), with the includes adding up to 9.3 billion lines of code.

By March 2020 the number of copies of the blink .pch file had grown to 67 with each one now 90 MB. I ran some experiments with shrinking the blink precompiled header files and found that if I reduced precompile_core.h dramatically I could:

- Cut out almost 90% of the cost of creating the .pch files

- Cut out almost 95% of the 5.5 GB size of the .pch files

- Slightly lower the average compile time for Blink source files

- Reduce accidental dependencies – translation units depending on headers that they don’t need

Those improvements were good enough that I was able to get a change landed to reduce what precompile_core.h includes.

I got the cost-reduction numbers above from a build-summarizing script I wrote, but when when I created graph creation tools for this blog post it made sense to apply them to this change, to better visualize the improvements, so I patched the precompiled header change in to my old Chromium build. And, I realized that I could animate the compile-times and the number of include lines for each target, to make a movie showing the transition from old to new. In reality the change was a jump from one state to the other, but showing it as motion makes it easier to see patterns.

In this video (which just shows source files in Blink, to reduce the noise) you can see the files which create the precompiled-header files (pattern 4) moving down and to the left, because they are including fewer files and compiling faster. You can also see the many files which consume the precompiled header files (pattern 2) moving to the right because they now have more header files to consume – recall that precompiled headers aren’t counted – and moving both up and down (both longer and shorter compiles).

The animation makes it crystal clear that this change didn’t help all blink source files. Some got slower, so I might have to try using the original precompile_core.h for some files – this blog post sure triggers a lot of work!

In addition to visualizing the savings I (and you) can use one of my scripts to measure, for instance, the before/after costs of creating the precompiled header files:

python ..\count_costs.py windows-default.csv *precompile_core.cc

59 files took 0.193 hrs to build. 0.000 M lines, 20.3 M dependent lines

python ..\count_costs.py windows-pchfix.csv *precompile_core.cc

59 files took 0.021 hrs to build. 0.000 M lines, 2.6 M dependent lines

Our full results with this change patched in to the old build now look like this (live diagrams here):

The two precompile-related patterns are now gone which just leaves us with the main sequence and the bluish-green tower (pattern #3) at just past 200,000 include lines. A bit of spelunking shows that the tower is mostly v8 files, especially source files generated by the build, which all include expensive-to-compile header files. In general generated source files can consume much compilation time. I hope to make some improvements there, but that will have to wait until after the blog post:

python ..\count_costs.py R710480-default.csv gen\*.cc

5662 files took 4.065 hrs to build. 3.027 M lines, 836.7 M dependent lines

What if we had fewer source files…

My original belief was that having fewer source files would improve build times, by reducing redundant processing of header files. Wouldn’t it be nice if there was some way to test this? It turns out that there is. A classic technique for doing this is to treat your .cpp files like include files. That is, instead of compiling ten .cpp files individually, generate a .cpp file that includes them, and compile those generated files. This technique is sometimes called a unity build. The generated files look something like this:

#include “navigator_permissions.cc”

#include “permissions.cc”

#include “permission_status.cc”

#include “permission_utils.cc”

#include “worker_navigator_permissions.cc”

If the included C++ files share a significant number of header files – usually the case if the files are related – then a significant amount of work can be avoided.

Some people have argued against this by pointing out that if you #include all of your source files into one then your incremental build times get worse. Well. Yeah. So don’t do that. There’s a lot of middle ground between compiling everything separately and compiling everything in one translation unit.

For a while Chromium had an option to do this, called jumbo builds, created by Daniel Bratell. This system defaulted to trying to #include 50 source files in each generated file (subject to constraints) and this was configurable. These jumbo builds significantly reduced the time to do full rebuilds of Chromium on machines with few processors. For incremental builds and massively parallel builds the benefits of jumbo builds were lower.

I decided to do three different jumbo builds with jumbo_file_merge_limit set to three different values. I would have done more small numbers but 2-4 failed due to command-line limits that I didn’t feel like addressing.

The graph below shows four points which are, left to right, merge amounts of 50, 15, 5, and the default build. The graph shows how the total number of hours of compile time goes down as the number of translation units compiled is reduced.

The downwards curve of the graph suggests that if we can reduce the number of translation units to 10,000 then the compile times will hit zero but I would advise readers not to trust that extrapolation.

One of the common complaints about jumbo/unity builds is that, by glomming many files together, they make individual compiles take longer. Let’s examine that:

python ..\count_costs.py R710480-default.csv

30137 files took 21.453 hrs to build. 11.856 M lines, 3611.6 M dependent lines

Averages: 393 lines, 2.56 seconds, 119.8 K dependent lines per file compiled

So, our default compile takes an average of 2.56 seconds per source file. What of our jumbo build with up to five C++ files per compilation:

python ..\count_costs.py R710480-jumbo05.csv

15880 files took 10.744 hrs to build. 5.925 M lines, 1905.0 M dependent lines

Averages: 373 lines, 2.44 seconds, 120.0 K dependent lines per file compiled

Uh. Wait a minute. We’ve almost cut the number of files compiled in half, and the average compile time has… dropped?

No, this isn’t an error. This happens because jumbo was applied mostly to expensive-to-compile files. Since most of their cost is header files the combining of them barely increased their compile cost. Since there are now fewer expensive files the average cost drops. It’s a miracle! It turns out you have to go to the 84th percentile before jumbo-5 files take longer to compile, and even the most expensive file only takes 42% longer to compile. There is such a thing as a free lunch. In addition jumbo linking may be faster due to reduced redundant debug information.

This all looks great, but, there are problems.

The main one is that when you #include a bunch of source files together then the compiler treats them as one translation unit, with one anonymous namespace, but the programmers writing the code see each source-file as independent. This causes obscure compiler errors in Chromium as identically named “local” functions and variables conflicted, but only in the jumbo configuration. The jumbo build effectively meant we were programming in an odd dialect of C++ with surprising rules around global namespaces.

Jumbo builds (if taken to excess) can also make incremental builds slower – because the compile-times for a large batch of source files can be non-trivial – and more tweaks are needed to work around this. Even though the average is better there are a bunch of 99th percentile file which take longer to compile, especially if jumbo_file_merge_limit is set too high.

Jumbo builds also creates additional coupling at link time as “unused source files” get linked in and then require all of their dependencies as well. Without care this can lead to shipping binaries that are bloated with test code, or surprising link errors.

Additionally, Google’s massively parallel goma build never benefited from jumbo. So, jumbo builds were deemed too much of a hack and were turned off. I was only able to use it for this post by syncing to the commit just before it was disabled – and I had to fix two name conflicts and some other glitches in anonymous namespaces to get Chromium to compile with it.

Reductio ad absurdum

The logical extension of source files with little code and lots of header files would be source files with zero code and lots of header files. But surely such files would never exist. Right?

Well…

While working on this I noticed that compiling of source files generated by mojo (Chromium’s IPC system) took quite a while. While poking at these I found that 680 of the generated C++ files (21% of the total) contained no code. Due to the size of the header files these no-code C++ files were collectively taking about twenty minutes of CPU time to build! I landed a change that detected this situation and removed the #includes when no code was detected. This was a simple change that reduces Chromium’s build time significantly in absolute terms (four to five minutes elapsed time on a four-core laptop) but as a percentage (~1.6%) barely moves the needle.

Now that I have the scripts needed to create videos to show compile-time improvements I figured I might as well animate this one as well – you can see some of the source-files racing towards the origin (tested on a March 2020 repo):

Why so long?

My initial twitter guess was that build times grow roughly in proportion to the square of the number of translation units. If we assume a large project with N classes, each with a separate .cc file and .h file then the number of compile steps is N. If 10% of the header files are used (often indirectly) by each .cc file then our compile cost is N * 0.10 * N, which is O(N^2). QED.

Other work in this area

I am far from the first person to try their hand at investigating Chromium’s build times.This is hardly surprising because Chromium’s build times have always been daunting, and different visualizations often reveal different opportunities.

The color coding scheme (and the basis of my graphing code) that I used in my plots comes from this excellent 2012 post by thakis.

Much more recently an alternate denominator – tokens instead of lines of code – was proposed in this January 2020 document. I chose to stick to lines of code because everyone knows how to measure them and the correlations in Chromium are similar.

For understanding why individual source files take a long time to compile we now have the excellent -ftime-trace option for clang which emits flame charts showing where time went. This flag can be set in Chromium’s build by setting compiler_timing = true in the gn args. When this is done a .json file will be created for each file compiled, and these can be loaded into chrome://tracing, or programmatically analyzed. In particular the author of –ftime-trace has created ClangBuildAnalyzer which can do bulk analysis of the results.

This Chromium script looks for expensive header files in a Chromium repo. It could potentially be combined with –ftime-trace for more precise measurements.

ninjatracing converts the last ninja build’s build-step timing into a .json file suitable for loading into chrome://tracing file to visualize the parallelism of the build.

post_build_ninja_summary.py is designed to give a twenty line summary of where time went in a ninja build. If the NINJA_SUMMARIZE_BUILD environment variable is set to 1 then autoninja will automatically run the script after each build, as well as displaying other build-performance diagnostics. This script was enhanced recently to allow summarizing by arbitrary output patterns.

There is an ongoing project to improve Chromium build times by making more use of mojom forward declarations instead of full definitions wherever possible. This is tracked by crbug.com/1001360.

There is an ACM paper (temporary freely accessible) discussing unity/jumbo builds in WebKit in great detail.

Reproducing my work

The scripts and data that I created for this blog post are all on github. They simply leverage ninja and gn’s results to create .csv files, and allow easy queries of the .csv files. The web page source is available, with the live page itself here. Click on any point on a graph to save it as a .png, and hover over it to see details on individual source files.

But it’s not science unless other people can reproduce the results. In order to follow along you need to get a Chromium repo (not a trivial task, but possible, just follow these instructions). Then, you need to run one or both of the supplied batch files (consider running them manually since some steps may be error prone, take 5+ hours, and consume dozens of GB of disk space):

- test_old_build.bat – this batch file checks out an old version of Chromium’s source, patches it so that jumbo works, and then builds Chromium with five different settings. Expect this to take about 20 hours on a four-core machine with lots of memory and a fast drive.

- test_new_build.bat – this batch file assumes that you are synced to a recent version of Chromium’s source, it patches in a change so that system headers are tracked and then builds Chromium, and then does incremental builds with and without the mojo empty-file fix. Expect this to take about 13 hours on a four-core machine with lots of memory and a fast drive.

All of my test builds use the”-j 4” option to ninja, for four-way build parallelism. My laptop has four cores and eight logical processors, and ninja would default to ten-way parallelism, but I wanted each compile process to have a core to itself, to minimize interference. You should adjust this setting based on your particular machine and scientific interests. Using all logical processors with “-j 10” instead of “-j 4” makes my builds run about 1.28 times as fast.

If you don’t want to spend hours getting a Chromium repo and building multiple variants you can still do some Chromium build-time analysis. Just clone the repo and you can run count_costs.py on various .csv files with varying filters to see which files are the worst. If you find anything interesting, let me know.

And, if you want to fix any of the issues, Chromium is open source.

Issues found while writing this

- Empty files generated by mojo – crbug.com/1054626, fixed in this change

- Precompiled files are too big, causing wasted time creating them, wasted time loading them, and 5+ GB of wasted disk space – crbug.com/1061326, fixed in this change

- Precompiled files generated redundantly – crbug.com/1060840. This was reported a long time ago. Since then the number of redundant builds has increased, but the change to shrink precompile_core.h has made this redundant building less critical.

- Clang loads precompiled files slowly – this has been known for a while and is discussed on crbug.com/672115 and there is ongoing work on a patch to improve this.

- Windows Photos can’t correctly display images with alpha. I tweeted about that here and included a link to the bad photo here.

Conclusions

- Precompiled header files have tricky tradeoffs that are difficult to assess.

- Chromium’s average source-file size is smaller (393 lines) than I expected.

- If you are creating a large project and build times are important to you then the most important thing you can do is to prohibit small source files

Reddit discussion is here and (mostly) here.

Twitter discussions are here, here, here, and various other places.

Consider looking at ClangBuildAnalyzer as well (https://github.com/aras-p/ClangBuildAnalyzer). It well aggregate the .json files from -ftime-trace to report other statistics. For example, I discovered that almost all of my unit test compile time is spent on std::tuple compilations.

Ah – excellent. I added a link to that and I’ll have to try it.

This blog post really has made too many to-do items for me…

On Minecraft, we created a unity build to experiment with build times (though ours are much lower than Chromium’s anyway). In addition to the gotchas you mention above, we ran into issues with #defines being on and off in unexpected places, causing TUs to behave very strangely.

In my experience, if you offer both a unity build and non-unity build, then your unity build is going to be constantly broken, and in unexpected ways, as devs who use the ‘normal’ build accidentally break the unity build. For that reason, our shipping builds never were built with the unity flag enabled (except for one platform that had some special limitations), and since we didn’t ship that way, and saw differences in behavior constantly between unity and non-unity builds, the unity builds fell out of favor pretty quickly.

I’m sure a happy medium could be found, but I suspect it would take some non-trivial investment in the build pipeline, along with an ongoing maintenance tax keeping the two flavors of builds in ‘sync’

Unreal Engine supports toggling unity build whenever you want for individual modules or the entire engine, and it works very well. Maybe worth looking at how they’ve done it?

Mozilla avoided breaking Unified builds by making all the automation/integration builds that happen on code checkin be unified builds, and all our test coverage and manual testing, and release builds are and have been for a very long time unified. We also have a habit of avoiding “generic” defines like “DEFAULT” or “PREFIX”. In some cases we add some #undefs at the start of a file. And we only combine files in the same source directory.

Some imported libraries (zlib for example) have unified turned off because they don’t have include-guards.

Unity builds have one hidden gotcha – they can lead to pretty funny ODR violations which are detected only at runtime…Oops the wrong function got called, sorry, don’t give them the same names even in different files… The real solution is switching the language for one designed to work on modern machines and optimized for programmer productivity … And it is not rust, sorry… Unfortunately very few language designers and language developers care for these things…

Mozilla uses unified builds exclusively now (based on directory); for a long time we maintained both, but for most of that time unified builds were the standard.

Occasionally we’d break something. Typically someone using unified that didn’t realize some other file in the directory had already included foo.h and so didn’t include it – which is fine, until the directory structure changes/new files added/etc, and the unify list changes and suddenly it’s not in a unit that already included foo.h. Note that this can and does happen regardless of if you support both. Similar things can happen for defines, as you note (and conflicts). Generally these are easy to resolve, and happen when someone lands a patch that adds/removes/moves files.

To resolve conflicts, we typically have a list of files in each moz.build file that shouldn’t be unified; something like:

webrtc_non_unified_sources = [

‘trunk/webrtc/common_audio/vad/vad_core.c’, # Because of name clash in the kInitCheck variable

‘trunk/webrtc/common_audio/vad/webrtc_vad.c’, # Because of name clash in the kInitCheck variable

‘trunk/webrtc/modules/audio_coding/acm2/codec_manager.cc’, # Because of duplicate IsCodecRED/etc

Note that most directories/moz.build files don’t have any.

I’m trying a -j 4 build to compare; I imagine our source trees are roughly comparable in size and complexity. Normally a build on my desktop here (14×2 core intel i9 7940 IIRC) takes around 15 minutes; and a fair bit of that is gated by single-threaded rust library link optimization (several minutes of it). Touching a typical c++ file and rebuilding (when you know there are no js/buildconfig/test changes) takes perhaps 15-30 seconds, most of that link time. We have a lot of Rust code now. In theory, 4 cores should be ~1/7th as fast (modulo hyperthread overhead), so you’d think circa maybe 1.25-1.5 hours (since the single-threaded rust stuff and linking aren’t affected by cores). Also note it’s not a laptop and has higher clockrates and better cooling.

Ok, with -j 4 a build took 44:18, so faster than I expected (assuming the -j 4 worked 100% correctly – it was WAY less than 100% cpu every time I looked. OS reported avg CPU utilization was 388%, which matches -j 4 pretty well

Great post, thanks. I remember reading somewhere that Unity builds were introduced in Chromium to ease the pain of the outside external contributors without any access to goma. Guess there is too many conflicts.

I wonder what happened with that

https://lists.llvm.org/pipermail/cfe-dev/2018-April/057579.html ember reading somewhere

Great post!

A few more resources on build performance: https://github.com/MattPD/cpplinks/blob/master/building.md#build-performance

A very interesting analysis, and thanks for making the data available.

Is a list of the top-level includes for each source file available? If so, a regression analysis can be used to estimate the contribution of each header to compile time.

One I did earlier: http://shape-of-code.coding-guidelines.com/2019/01/29/modeling-visual-studio-c-compile-times/

That would be an interesting project. The -ftime-trace data could be used to make that more accurate and more detailed. I hope to spend more time on that sort of work. Ultimately I want to focus on investigations that are most likely to lead to improvements, but that is difficult to predict.

Thanks for the post and the scripts! Much appreciated.

I successfully hacked your scripts to work on our open source project (and to run on macOS). I am just starting to dig into the data a bit and have already addressed a small issue with one file in our code.

My first thought – because I know this is an issue with our project – was an analysis of headers & includes as well. It’s a bit of a mess – many, many includes – and I’d like to target the ones that cost the most to compile and/or are processed most frequently.

Is there a way to do this with the ninja data or would we need to get it from the compiler like you mention? (I’m trying to use Apple’s clang and avoid installing new compilers…)

The ninja data just tells you what files were included, not how long they (and their descendants) took to process. For that you should look at -ftime-trace and ClangBuildAnalyzer (the aggregator for -ftime-trace).

Even that has limitations because if Foo.h includes Bar.h then it will be “charged” for that cost, unless Bar.h was previously included independently. Which is to say that assigning meaning to the numbers can be challenging. But, I am certain it will help.

I’m glad you found the scripts useful. I’d be curious as to what the issue you found was, and if you create any visualizations (or animations!) please share.

Thanks Bruce! That makes sense.

I have not created any visualizations yet – just skimming the CSV.

I sorted the CSV by time and looked at the top few.

One of the (#14) was taking 7.3 seconds and had 1264 deps.

I took a look at the file and removed a bunch of unnecessary includes. After re-compiling, it dropped to #90, taking 3.2 seconds with 489 deps. A quick win for a few minutes work and I haven’t even dug into the data yet.

I think, because of the nature of the code base, there are probably a handful of headers that are killing us – and one cpp file that dominates that we can’t do much about because it’s 3rd party (see max below).

count_costs (I have 8 cores):

442 files took 0.335 hrs to build. 0.295 M lines, 64.9 M dependent lines

Averages: 668 lines, 2.73 seconds, 146.7 K dependent lines per file compiled

min: 0.0, 50%ile: 2.1, 90%ile: 4.3, 99%ile: 12.4, max: 131.2 (seconds)

Thanks for the detailed post, and the view into tools to help manage truly large projects.

I’m the one on our team who, when build times creep up, sifts through our code base removing redundant #includes, converting to forward declarations, etc.

Selfishly, the next time someone complains to me that our builds are too slow, perhaps I will point them to this post to show them what long build times really are!

Oh, and “Hi, Randell!” Great to see your name pop up here.

If you just clean up after people they’ll never start keeping the place tidy themselves.

I don’t just clean up. Each round is a teaching opportunity. Some of our developers are fairly new to C++.

The primary rule is, “Always think twice before #including a header in another header.”

Next stop: C++ modules 🙂

Would be interesting to see numbers.

Regarding clang PCH performance, there seems to be another patch on top of the already mentioned one: https://llunak.blogspot.com/2019/11/clang-precompiled-headers-and-improving.html

All the projects I’m involved with exclusively use Unity builds, which eliminates any problems with maintaining two configurations and also means we build the same way that we ship.

To get around issues with iteration time with large unity files, we compile locally modified files separately. We use FASTBuild (which I am the author of) to manage the unity creation (automatically based on files on disk) and also to automatically manage the exclusion of locally modified files (writable when checked out from source control).

All this means we can have fairly large Unity files (30 seconds+ each to compile) while still having great iteration times.

The setup we have now is literally over 10x faster than before, and I had a similar experiences (with all the same stuff in place) at my previous employers.

It seems for such large projects there is no way around a build cluster, especially if you are pulling from upstream regularly.

WebKit also uses unified builds when using CMake or Xcode; without them WebCore takes forever to compile. (With it enabled, it just takes a really long time 😉 ) I think there’s a general ban on “using namespace” at the global level to prevent issues when running the build. There’s a relevant paper, too: https://dl.acm.org/doi/10.1145/3302516.3307347 (some of the authors work directly on WebKit).