Stuff The Internet Says On Scalability For September 14th, 2018

Hey, it's HighScalability time:

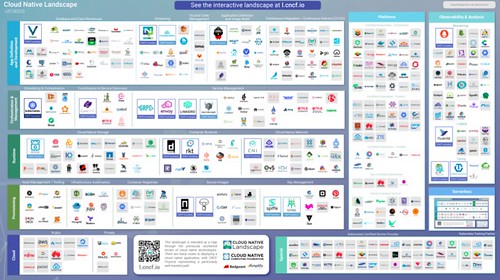

The Cloud Native Interactive Landscape is fecund. You are viewing 581 cards with a total of 1,237,157 stars, market cap of $6.86T and funding of $20.1B. (changelog)

Do you like this sort of Stuff? Please lend me your support on Patreon. It would mean a great deal to me. And if you know anyone looking for a simple book that uses lots of pictures and lots of examples to explain the cloud, then please recommend my new book: Explain the Cloud Like I'm 10. They'll love you even more.

- 72: signals sensed from a distant galaxy using AI; 12M: reddit posts per month; 10 trillion: per day Google generated test inputs with 100s of servers for several months using OSS-Fuzz; 200%: growth in Cloud Native technologies used in production; $13 trillion: potential economic impact of AI by 2030; 1.8 trillion: plastic pieces eaten by giant garbage pac-man; 100: min people needed to restart humanity;

- Quotable Quotes:

- Joel Hruska: Farmers in California have lost the fight to be allowed to repair their own tractors and equipment thanks to the capitulation of their own lobbying group. This issue has been gathering steam for the last few years, after aggressive moves by John Deere to lock down the equipment.

- @RachFrieee: 3.6B events processed to date 300k+ users globally 50% of the Fortune 100 use @pagerduty 10,500+ customers of every size 300+ integrations 🔒 Enterprise grade security #PDSummit18 @jenntejada

- @editingemily: "In peacetime, a ticketing system is great... American Eagle loses $200K per minute of downtime. You gonna make a ticket? Burn a cool million?" 😂🤣😂 @jenntejada #PDSummit18

- NSF: When the HL-LHC reaches full capability in 2026, it is expected to produce more than 1 billion particle collisions every second, marking a 10-fold increase that will require a similar 10-fold increase in data processing and storage, including tools to collect, analyze, and record the most relevant events. Uniting multidisciplinary teams of researchers and educators from 17 universities, IRIS-HEP will receive $5 million a year for five years from the NSF with a focus on producing innovative software and training the next generation of users.

- @aallan: “Moore’s Law is really dead, but the lesser know Dennard Scaling Law is also dead… we’re almost at the end of the line, last year performance only improved 3 percent,” says David Patterson. #TheAIConf

- Gary Bronner~ DRAM scaling is starting to run out of steam. The industry continues to scale from the 1x, to the 1y, to the 1z generation, but the benefit from each generation is getting smaller and smaller. Because of that big servers and other memory systems need to have another kind of memory in the hierarchy. The new memory hierarchy is SRAM, DRAM, SCM (storage class memory), SSD, HDD. SCM slots between DRAM and flash in terms of latency, cost, and density. It's a way of adding more memory when DRAM alone can't give you all the performance you want.

- @sethearley: 7 areas of opportunity for #AI in teaching. 1. Feedback & Scoring, 2. College readiness, 3. Empowering students with challenges, 4. Behavior management, 5. Curriculum development, 6. Higher order learning, 7. Professional development. #TheAIConf

- @The_McJones: “How do we work out who wins? Well we don’t go to the bar and talk it out, instead we’ve decided to all spend billions of dollars and let the market figure it out” - David Patterson #theAIConf

- DSHR: As I understand it, Amazon's S3 is redundant across three data centers, whereas Backblaze's B2 is not. Thus, despite both quoting 11 nines of durability against hardware failures, S3 is durable against failures that B2 is not, and is thus better.

- @dr_c0d3: 2000: Write 100s of lines of XML to "declaratively" configure your servlets and EJBs 2018: Write 100s of lines of YAML to "declaratively" configure your microservices At least XML had schemas...

- Programming Rants: This time PHP7 became the best performing programming language implementation, also the least memory consumption (I'm amazed with what they did in version 7).

- @BenedictEvans: With each cycle in tech, companies find ways to build a moat and make a monopoly. Then people look at the moat and think it's invulnerable. They're generally right. IBM still dominates mainframes and Microsoft still dominates PC operating systems and productivity software. But...

- @The_McJones: “So we strapped a bunch of FitBits onto cows” - Peter Norvig #TheAIConf

- @noahsussman: Complex systems are intrinsically hazardous systems. Complex systems are heavily and successfully defended against failure. Catastrophe requires multiple failures – single point failures are not enough. Complex systems contain changing mixtures of failures latent within them. Complex systems run in degraded mode. Catastrophe is always just around the corner. Post-accident attribution accident to a ‘root cause’ is fundamentally wrong.

- @copyconstruct: Putting the "micro" back in microservice. https://bit.ly/2NZUX0g - argues that process-level isolation is too slow for FaaS - FaaS running on shared processes, isolated primarily with language-based compile-time guarantees and fine-grained preemption have better perf profiles - "defence in depth" using tasks written in Rust (guarantees computations executing within a shared worker don't segfault) and seccomp based whitelisting of syscalls. - use of a "pseudo stack" on top the real stack frame to allow signal handlers to panic

- @aallan: “This is an arm that can see…” says @josephsirosh, “all it takes is cheap components, like a #RaspberryPi, and an #Arduino board. But we all know the secret is ML in the cloud.” #TheAIConf

- @mcclure111: C is a cool programming language where if you want to return a string from a function you have to set up an entire physical-universe human social system for adjudicating who is responsible for freeing it "In order to create a C string, you must first create civilization"

- Microsoft: Why didn’t VSTS services fail over to another region? We never want to lose any customer data. A key part of our data protection strategy is to store data in two regions using Azure SQL DB Point-in-time Restore (PITR) backups and Azure Geo-redundant Storage (GRS). This enables us to replicate data within the same geography while respecting data sovereignty. Only Azure Storage can decide to fail over GRS storage accounts. If Azure Storage had failed over during this outage and there was data loss, we would still have waited on recovery to avoid data loss.

- Elon Musk: The electric airplane isn't necessary right now. Electric cars are important. Solar energy is important. Stationary storage of energy is important. These things are much more important than creating electric supersonic [airplanes]. It's important that we accelerate the transition to sustainable energy.

- @rakyll: I do. The current state of error handling in Go is a wild experiment. It only encourages to bubble up errors rather than handling them. Error values don't have any diagnostics bits. We have production systems written in this language now.

- Jack Scott: I recently developed a simple app and used WakaTime to measure the time I spent on my coding. Here are the results: React Redux front-end repository — 32 Hours. Express + Mongoose back-end repository — 4 Hours.

- swimorsinka: Hmm, I use Redshift every day and I've also used BigQuery. You can't have a valid benchmark without adding sort keys and dist keys to Redshift. It really isn't meant to work without them. I understand it's more work that way, which is why BigQuery is so nice. But if you're trying to do lots of queries, BigQuery is also more expensive.

- enitihas: We have [migrated back] from Google cloud functions because they are a joke (see cold start graph on OP link). Even during development it was horribly painful to have 10+ second call times on many many requests while testing (and the same in production). Even requests that are only a few minutes or seconds away from each other would cold start randomly.

- Horace Dediu: The important call to make is that Apple is making a bet that sustainability is a growth business. Fundamentally, Apple is betting on having customers not selling them products. The purpose of Apple as a firm is to create and preserve customers and to create and preserve products. This is fundamental and not fully recognized.

- Zuckerberg: One of the important lessons I’ve learned is that when you build services that connect billions of people across countries and cultures, you’re going to see all of the good humanity is capable of, and you’re also going to see people try to abuse those services in every way possible.

- The Woman Who Smashed Codes: Codebreaking is about noticing and manipulating patterns. Humans do this without thinking. We’re wired to see patterns. Codebreakers train themselves to see more deeply.

- Rabble: Do you see the irony in this? Apple killed Odeo for the iPod, and then Twitter exists and is successful because of the iPhone.

- @garybernhardt: mixins are just weird inheritance and promises are just weird callbacks

- @JoeEmison: This is the benefit of serverless done correctly; you mitigate the risk of access to tech talent by limiting your needs to talent needed for UX.

- @bigmediumjosh: So you know: 50% of mobile data is used to download ads.—@yoavweiss at #smashingconf

- Corey Quinn: Then we do an analysis and we learn that the developer environments are something on the order of three percent of their bill. Production is what has scaled up. That’s where the low-hanging fruit is.

- Jakob: Always Measure One Level Deeper [John Ousterhout]– to understand the observed system behavior at one level, you have to measure the level below it. This is the idea expressed in the title of the article, and it is really the most powerful insight in the article. Part of this is to also look at more than simple averages – the data has to be examined and understood. Are there are outliers, scattered values all over the place, or a nice smooth distribution?

- Corey Quinn: Last year when there was a four-hour outage to the standard (US East) region of S3, we saw a lot of knee-jerk reactions where everyone was moving all their things over to multi-region buckets, doing replications, setting up multi-homed things. You’re going to double or triple your infrastructure costs by doing that.

- @colmmacc: This is how we build the most critical control planes at AWS. For example if you use Route 53 health checks, or rely on NLB, or NAT GW, the underlying health statuses are *ALWAYS* being pushed around as a bitset. ALWAYS ALWAYS. A few statuses can change (which is normal), and the system reacts, or they can ALL change, and it will react just the same (which is abnormal). We could have lots of targets suddenly disappear .... break ...

- The Woman Who Smashed Codes: A then-secret document said of Elizebeth, “She and her husband are among the founders of American military cryptanalysis”—cryptanalysis is another word for codebreaking—and a federal prosecutor told the FBI that “Mrs. Friedman and her husband . . . are recognized as the leading authorities in the country.” Yet in the canonical books about twentieth-century codebreaking, Elizebeth is treated as the dutiful, slightly colorful wife of a great man, a digression from the main narrative, if not a footnote. Her victories are all but forgotten.

- Karen Piper: By the time my mom arrived on the scene, calculators were already “miniature,” but computers were enormous. She was at the forefront of the shift to computers that was a room-sized IBM mainframe set up in the basement. She told stories about “punch cards” and how the IBM had to constantly be fed with them. That was “programming” then. Ironically, as computers became central to the process of building a missile, and then guiding missiles, women became central, too. The men did not know how to use them. My mom once said to me, “I was shocked that these guys did not know how to do basic things like plotting. I had to do everything for them. Except for Mr. Bukowski, of course. He knew it was important to learn.” Nevertheless, the men still got the big salaries.

- Norman P. Jouppi: For at least the past decade, computer architecture researchers have been publishing innovations based on simulations using limited benchmarks claiming improvements for general-purpose processors of 10% or less, while we are now reporting gains for a domain-specific architecture deployed in real hardware running genuine production applications of more than a factor of 10. Order-of-magnitude differences between commercial products are rare in computer architecture, which could even lead to the TPU becoming an archetype for future work in the field. We expect that many will build successors that will raise the bar even higher.

- John G. Messerly: They were amused by my optimism, but they didn’t really buy it. They were not interested in how to avoid a calamity; they’re convinced we are too far gone. For all their wealth and power, they don’t believe they can affect the future. They are simply accepting the darkest of all scenarios and then bringing whatever money and technology they can employ to insulate themselves — especially if they can’t get a seat on the rocket to Mars.

- Morgan Stanley: Today, public clouds account for just 20% of all computing workloads, but that percentage could grow to 48% by the end of 2020, according to Morgan Stanley. In a report published on Tuesday, analyst Katy L. Huberty forecast that global capital expenditure spending among 14 major cloud companies will increase 35% in 2018, up from 16% growth in 2017. Morgan Stanley estimates that Google, Amazon, Facebook and Microsoft alone will account for 73% of that growth in 2018.

- Broad Band: The Mountbatten archive was the perfect test case for a hypertext project: a vast, interrelated collection of documents spanning many different media, subject to as many readings as there could be perspectives on the last century of British history. Wendy put together a team, and by Christmas 1989, they had a running demo for a system called Microcosm. It was a remarkable design: just as the World Wide Web would a few years later, Microcosm demonstrated a new, intuitive way of navigating the massive amounts of multimedia information computer memory made accessible. Using multimedia navigation and intelligent links, it made information dynamic, alive, and adapted to the user. In fact, it wasn’t like the Web at all. It was better.

- The Memory Guy: To examine the cost impact of fab size, Scotten Jones, President of IC Knowledge LLC has modeled wafer cost versus fab capacity all the way out to 1,000,000 wafer per month. The chart used for this post’s graphic illustrates wafer cost versus fab capacity and includes the percentage increase in cost versus the 1,000,000 wafers per month fab for smaller fabs. Click on the chart if you would like to see a larger version. As can be seen from the figure wafer cost for an 8,000 wafer per month fab is a whopping 50% higher than for 1,000,000 wafers per month fab, 70,000 wafers per month is 5% higher and even at 250,000 wafers per month the wafer cost is 1% higher. Since DRAM is built using many different tools than those used for NAND flash, the optimum number of starts will differ too. For DRAM 60,000 starts proves to be an efficient volume. From the perspective of the chart it’s pretty clear why a fab with 100,000 wafer starts might be a good idea – it’s going to be significantly more cost-effective than a 60,000-start fab.

- evanjones: As expected for small N, linear search performs slightly better than binary search (29.6 ns compared to 35.7 ns, a 27% improvement). Surprisingly, the std::map implementation is faster than binary search on an array. This suggests to me that I either have a bug in my code, or there is something about my benchmark that causes Clang's optimizer to do something strange. I don't understand how the pointer chasing the happens with a binary tree could possibly be better than a plain binary search over an array. As the data sets get much larger, binary search is better than the map. Possibly this just means that modern CPUs are really good at pointer chasing, if the data is all in the L1 cache.

- Steve Blank: So, what does [The Death of Moore’s Law] mean for consumers? First, high performance applications that needed very fast computing locally on your device will continue their move to the cloud (where data centers are measured in football field sizes) further enabled by new 5G networks. Second, while computing devices we buy will not be much faster on today’s off-the-shelf software, new features– facial recognition, augmented reality, autonomous navigation, and apps we haven’t even thought about –are going to come from new software using new technology like new displays and sensors. The world of computing is moving into new and uncharted territory. For desktop and mobile devices, the need for a “must have” upgrade won’t be for speed, but because there’s a new capability or app. For chip manufacturers, for the first time in half a century, all rules are off. There will be a new set of winners and losers in this transition. It will be exciting to watch and see what emerges from the fog.

- Paul Ormerod: In 1880, if you wanted to hear a particular singer, you had to go to a live performance. Perhaps a thousand people could enjoy the joint consumption of the product. In 1980, tens of millions could watch on television. Rosen, writing well before the internet, argued that advances in communications technology such as radio and television increased enormously the potential size of markets involving joint consumption. As he put it so succinctly, “the possibility for talented persons to command both very large markets and very large incomes is apparent”. For example, the football played in England’s Premier League is in general better than that in the Scottish Premiership. But the English league rakes in well over £1bn a year in television rights, and Scotland less than £20m. It is the combination of the joint consumption nature of these services and advances in communications which mean that a relatively small number of sellers can in principle service the entire market. The more talented they are, the fewer still are needed. And that’s how they are able to earn so much.

- Zat Rana: One of Shannon’s go-to tricks was to restructure and contrast the problem in as many different ways as possible. This could mean exaggerating it, minimizing it, changing the words of how it is stated, reframing the angle from where it is looked at, and inverting it.

- Cory Doctorow: Here’s the most utopian thing I believe about technology: the more you learn about how to control the technology in your life, the better it will serve you and the harder it will be for others to turn it against you. The most democratic future is one in which every person has ready access to the training and tools they need to reconfigure all the technology in their lives to suit their needs. Even if you personally never avail yourself of these tools and trainings, you will still benefit from this system, because you will be able to choose among millions of other people who do take the time to gain the expertise necessary to customize your world to suit you.

- voycey: Speed is only one factor of a data warehouse that should be considered, as someone who has moved through 3 of these in the past year we have settled on a mix of Spark, Presto and BigQuery depending on the workload. - Presto is not good at longer queries, if a node dies the query fails and it needs to be restarted. It is however orders of magnitude faster for any of the other solutions when it comes to Geospatial functions, the team behind it are simply wizards. - BigQuery is also super fast and a fantastic tool for adhoc analysis of huge amounts of data, they have just started to implement GIS functionality in this so we are watching it closely. Some of our analyists have been stung on pricing where partitions weren't possible meaning we were charged for scanning 10TB+ of data for a relatively simple query - it has a learning curve for sure! - Redshift was our original data warehouse, it was great for prescribed data in an ETL pipeline, however scaling a cluster takes hours and data skew meant that the entire cluster would fill up during queries if sort keys and distribution keys weren't precisely calibrated - quite difficult when you have changing dimensions of data. - Spark / EMR / Tez has been our standout workhorse for many things now, it is much slower than any of the above but there are many tools that work with Spark and the ecosystem is growing rapidly, we had to perform a cross join of 16B records to 140M ranges and every single one of the above solutions either crapped out on us or became prohibitively expensive to run this at scale and get meaningful output. Spark took longer (1h 25m) but the progress was steady and quantifiable. Presto often died mid query for a number of reasons (including that we wanted to run this on pre-emptible instances on GCP and it doesnt support fault tolerance). File formats are a HUGE differentiator when it comes to these systems as well - we chose ORC as our file format due to the availability of bloom filters and predicate pushdown in Presto, this means we can load a 10TB dataset in a couple of minutes and query the files directly without having to specifically load them into a store. Our preference is ORC > Parquet > AVRO > CSV in order.

- François Chollet with a thoughtful meditation in the form of Notes to myself on software engineering. On the development process: Code isn’t just meant to be executed. Code is also a means of communication across a team, a way to describe to others the solution to a problem. On API design: Your API has users, thus it has a user experience. In every decision you make, always keep the user in mind. On Software careers: Productivity boils down to high-velocity decision-making and a bias for action. This requires a) good intuition, which comes from experience, so as to make generally correct decisions given partial information, b) a keen awareness of when to move more carefully and wait for more information, because the cost of an incorrect decision would be greater than cost of the delay. The optimal velocity/quality decision-making tradeoff can vary greatly in different environments.

- Amitabha with Notes from #TheAIConf in San Francisco, CA, Sept 2018: Focus on Hardware: There seemed to be an important focus on HW, a lot of focus on on edge devices to run inference workloads...China is a huge market for AI: Driven by huge mobile consumption (3x), large number of mobile payments (50x), large number of bicycle rides (300x), ubiquity of cameras in traffic intersections and streets, China is the most important market for AI (All numbers are relative to the USA). It is expected that 48% of AI money gies to China, while 36% to USA, and China will be 7 trillion dollar market by 2025...Incumbent companies have huge advantage because of being large data warehouses. Only 7 companies in the world can really afford to do large-scale AI...On the technology point of view, a lot of talks focussed on Reinforcement Learning, Recurrent Neural Networks (RNN), Long Short-Term Memory (LSTM), Auto-encoders, Time-Series Analysis and Forecasting, as well as applications of ML/DL in the fields of robotics, self-driving cars, sports (baseball), storage systems, finance (investments and trading), chatbots, space, earth science, to name a few

- Serverless: Cold Start War: Here are some lessons learned from all the experiments above: Be prepared for 1-3 seconds cold starts even for the smallest Functions; Different languages and runtimes have roughly comparable cold start time within the same platform; Minimize the amount of dependencies, only bring what's needed; AWS keeps cold starts below 1 second most of the time, which is pretty amazing; All cloud providers are aware of the problem and are actively optimizing the cold start experience; It's likely that in middle term these optimizations will make cold starts a no-issue for the vast majority of applications

- Nvidia is betting a combination of the cloud and 5G will help it get some of that lucrative subscription gaming revenue. Nvidia promises to shift graphics grunt work to the cloud, for a price. Nvidia VP of sales, Paul Bommarito: "you don't need a big expensive high-end workstation." How? Nvidia's GeForce Now cloud gaming service that does most of the graphics grunt work for you and so removes the need for monster processing powers on your machine, and 5G super-fast wireless technology that provides the bandwidth and low latency. AT&T's Fuetsch: E-sports is the future. Dave 126: Small thought: the players need equally low latency to each other. However the spectators could watch a more visually impressive display - realtime ray-traced game footage at the expense of a few more milliseconds. Wild Bill: I’m in the beta for this. I’ve got to say it works extremely well. I actually play Overwatch on it (a super twitchy fast shooter) and it plays great for me (though I’m not very good at the game, so maybe more hardcore players might notice a difference). This is on a 76mb down / 38mb up connection

- Here are some Highlights from Git 2.19.

- Top earners? In the United States, Germany, India, and the United Kingdom DevOps specialists are the number one highest earning developer. DevOps specialists are earning a median salary close to $100,000 in the United States. Top tech? The specific technologies that developers use also impact salary. This year, the technologies most associated with higher salary include Go, Scala, Redis, and React.

- Ad serving latency decreased drastically: response times dropped from 90ms to under 10ms. GopherCon 2018 - From Prototype to Production: Lessons from Building and Scaling Reddit's Ad Serving Platform with Go. Odd to create your own ad serving platform these days, but reddit with 330M+ MAU chose to do so. They use: Apache Thrift for all RPCs; RocksDB for data storage; Go as the main backend language. The process goes like: Reddit.com makes a call to a service called ad selector; Enrichment service is responsible for getting more data and information about the request, user, and other information it needs to find and select the most relevant ad; ad selector selects the add; The client sends an event HTTP request to the event tracker service; This event notification also gets shelled out to Kafka and Spark. Go was good: Increased developer velocity; Great performance out of the box; Easy to focus on business logic; Ad serving latency decreased drastically. Lessons: Use a framework/toolkit; Go makes rapid iteration easy and safe; Distributed tracing with Thrift and Go is hard; Use deadlines within and across services; Use load testing and benchmarking.

- The next data explosion ground-zero? In-Chip Monitoring: Understanding dynamic conditions (voltage supply and junction temperature) as well as understanding how the chip has been made (process) has become a critical requirement for advanced node semiconductor design. So, we should not only get used to in-chip monitors and sensors but also understand the problems they solve and what the key attributes are for good in-chip monitors.

- Lots of interesting videos from Nerd Nite SF.

- How Discord Handles Two and Half Million Concurrent Voice Users using WebRTC: Discord Gateway, Discord Guilds and Discord Voice are all horizontally scalable. Discord Gateway and Discord Guilds are running at Google Cloud Platform. We are running more than 850 voice servers in 13 regions (hosted in more than 30 data centers) all over the world. This provisioning includes lots of redundancy to handle data center failures and DDoS attacks. We use a handful of providers and use physical servers in their data centers. We just recently added a South Africa region. Thanks to all our engineering efforts on both the client and server architecture, we are able to serve more than 2.6 million concurrent voice users with egress traffic of more than 220 Gbps (bits-per-second) and 120 Mpps (packets-per-second).

- Farhan Ali Khan has taken on a project, implementing a few famous papers related to distributed systems in Golang. The current article is Chord: Building a DHT (Distributed Hash Table) in Golang. You get an explanation with code examples. Nicely done.

- Go get 'em, videos from GopherCon 2018 are now available. Titles include: Micro optimizing Go Code; gRPC State Machines and Testing; Rethinking Classical Concurrency Patterns.

- Videos from Serverlessconf San Francisco 2018 are now available. Better Application Architecture with Serverless by Joe Emison who considers code a liability and serverless as a really sharp pair of scissors for cutting away layers of code. Put your core business logic in serverless and use other services to handle undifferentiated functionality; AppSync and GraphQL is a great unifying API for slapping services together.

- Remember those cartoons where Lucy pulls the football away before Charlie brown can kick it? Add the magic of Google Photos to your app. PathTo3Commas: Or raise the API usage costs from under $100 a month on average to over ~$15,000 a month like they just did/are doing with the Google Maps/Places/Geocode API's. Not kidding. They're shutting thousands of websites, businesses down because of this. They gave everyone two months notice to effectively shutting them down.

- The total migration: 10x the number of queries, 1/10th the query runtime. Managing your Amazon Redshift performance: How Plaid uses Periscope Data: This led us to our first critical discovery: 95% of the slow queries came from 5% of the tables...Once we understood the problem, the technical solution was fairly straight-forward: pre-compute the common elements of the queries by creating rollup tables, materialized views, and pre-filtered & pre-segmented tables, and change user queries to run against these derivative tables. We already used Airflow for a good amount of data ETL management, so it was an easy choice to start materializing views and rollups. The associated DAGs are simple to implement; just have Airflow run the SQL query every 15 minutes or so, and then swap out the old table for the new one.

- facebookincubator/LogDevice: a scalable and fault tolerant distributed log system. While a file-system stores and serves data organized as files, a log system stores and delivers data organized as logs. The log can be viewed as a record-oriented, append-only, and trimmable file. How is different than Kafka? sh00es: LogDevice is a lower level building block compared to all of Kafka infrastructure. You could build something like Kafka Streams or KSQL on top of it, but nothing like that currently exists. LogDevice's API is similar to a subset of Kafka's Producer and Consumer APIs. This lack of infrastructure around it is the major drawback.

- yahoo/Oak (article): a concurrent Key-Value Map that may keep all keys and values off-heap. This enables working with bigger heap sizes than JVM's managed heap. OakMap implements an API similar to the industry standard Java8 ConcurrentNavigableMap API. It provides strong (atomic) semantics for read, write, and read-modify-write, as well as (non-atomic) range query (scan) operations, both forward and backward.

- Nozbe/WatermelonDB: a new way of dealing with user data in React Native and React web apps. It's optimized for building complex applications in React / React Native, and the number one goal is real-world performance. In simple words, your app must launch fast. Why not use SQLite directly? radex: SQLite gives you just the "data fetching" part. But if you're building a React/RN app, you probably also want everything to be automatically observable. For example, if you have a todo app, and you mark a task as done, you'd want the task component to re-render, the list to re-render (put the task at the bottom), and update the right counters (the counter of all tasks, and a counter of all tasks in ta project), etc. I hope you're getting the point. Watermelon is an observable abstraction on top of SQLite (or an arbitrary database), so that you can connect data to components and have them update automatically

- sslab-gatech/qsym (article): A Practical Concolic Execution Engine Tailored for Hybrid Fuzzing. "Here’s a simplified representation of a bug that QSYM found in ffmpeg. It’s nearly impossible for a fuzzer to get past all the constraints (lines 2-9), by QSYM was able to do so by modifying seven bytes of the input. This meant that the AFL fuzzer was able to pass the branch with the new test case and eventually reach the bug."

- cjbarber/ToolsOfTheTrade: Tools of The Trade, from Hacker News.

- auchenberg/volkswagen: Volkswagen detects when your tests are being run in a CI server, and makes them pass.

- Finding and fixing software bugs automatically with SapFix and Sapienz: SapFix can automatically generate fixes for specific bugs, and then propose them to engineers for approval and deployment to production. SapFix has been used to accelerate the process of shipping robust, stable code updates to millions of devices using the Facebook Android app — the first such use of AI-powered testing and debugging tools in production at this scale. We intend to share SapFix with the engineering community, as it is the next step in the evolution of automating debugging, with the potential to boost the production and stability of new code for a wide range of companies and research organizations.

- An Empirical Study of Experienced Programmers’ Acquisition of New Programming Languages: Our results show that users spend 42% of online time viewing example code and that programmers appreciate the Rust Enhanced package’s in-line compiler errors, choosing to refresh every 30.6 seconds after first discovering this feature. We did not find any significant correlations between the resources used and the total task time or the learning outcomes. We discuss these results in light of design implications for language developers seeking to create resources to encourage usage and adoption by experienced programmers

- github.com/Microsoft/FASTER/wiki/Performance-of-FASTER-in-C#: ConcurrentDictionary get around 500K ops/sec for this workload. The 10-50X performance boost with FASTER is attributed to the avoidance of fine grained object allocations and the latch-free paged design of the index and record log.

- SlimDB: A Space-Efficient Key-Value Storage Engine For Semi-Sorted Data: SlimDB is a paper worth reading from VLDB 2018. The highlights from the paper are that it shows: How to use less memory for filters and indexes with an LSM; How to reduce the CPU penalty for queries with tiered compaction The benefit of more diversity in LSM tree shapes. Cache amplification has become more important as database:RAM ratios increase. With SSD it is possible to attach many TB of usable data to a server for OLTP. By usable I mean that the SSD has enough IOPs to access the data. But it isn't possible to grow the amount of RAM per server at that rate. Many of the early RocksDB workloads used database:RAM ratios that were about 10:1 and everything but the max level (Lmax) of the LSM tree was in memory. As the ratio grows that won't be possible unless filters and block indexes use less memory. SlimDB does that via three-level block indexes and multi-level cuckoo-filters.

-

Idempotence Is Not a Medical Condition: In a world full of retried messages, idempotence is an essential property for reliable systems. Idempotence is a mathematical term meaning that performing an operation multiple times will have the same effect as performing it exactly one time. The challenges occur when messages are related to each other and may have ordering constraints. How are messages associated? What can go wrong? How can an application developer build a correctly functioning app without losing his or her mojo?